人脸识别

人脸检测(识别人脸出框、多人脸、面部遮挡),256个面部关键点位识别与输出。

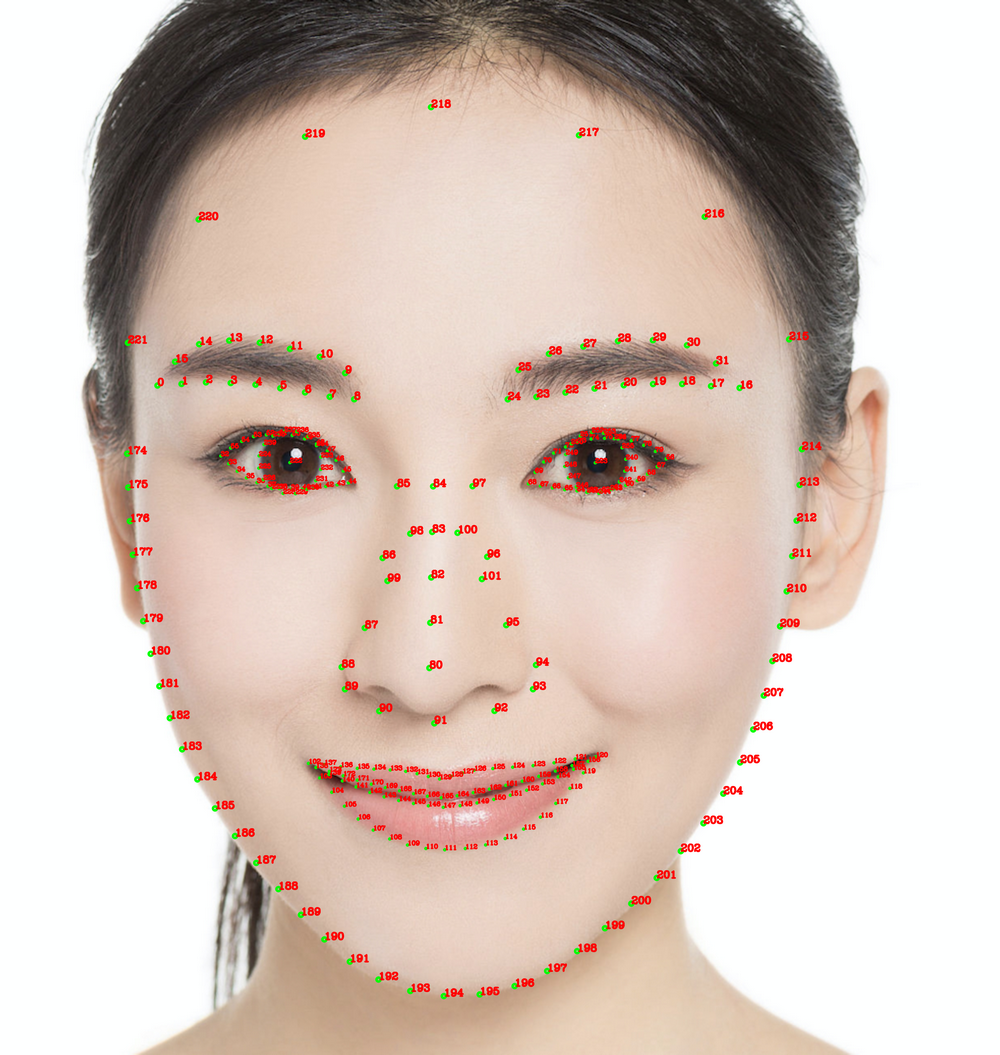

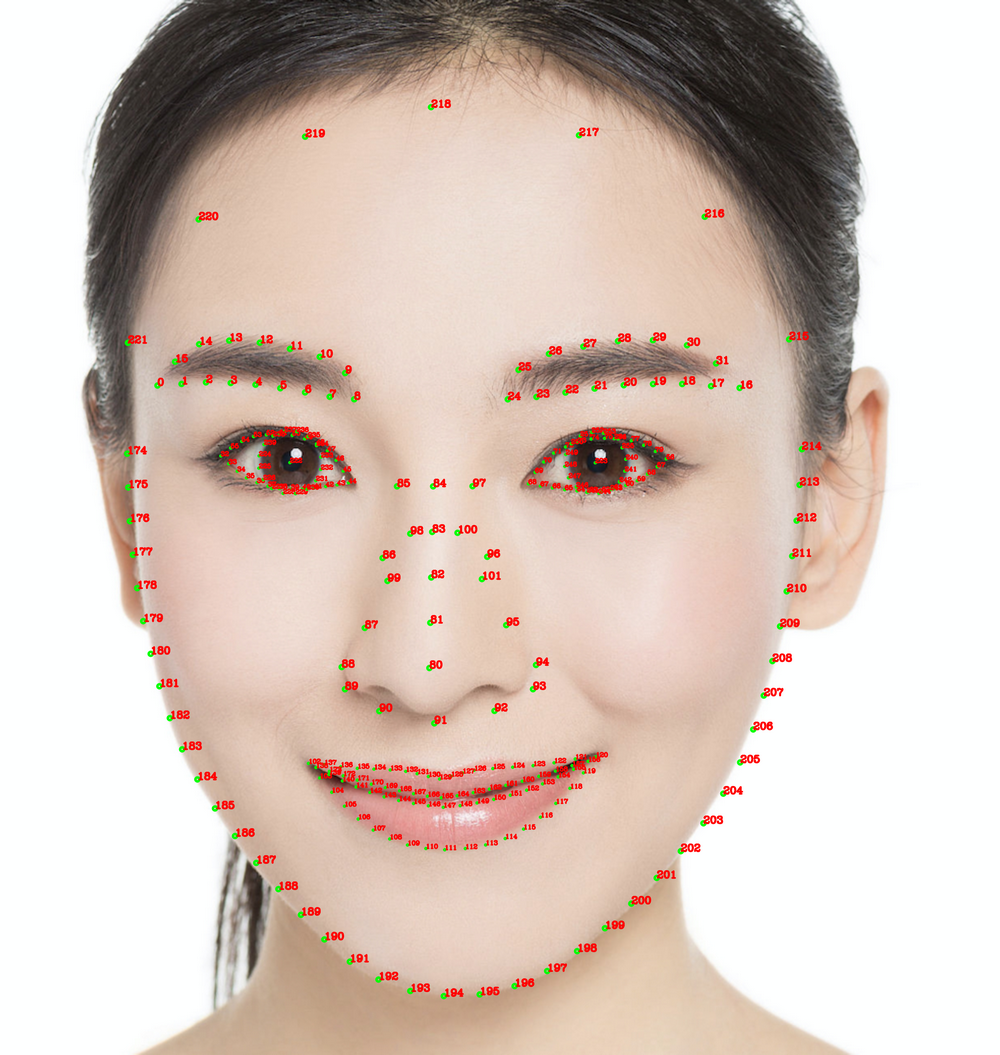

人脸256点对应索引图

iOS 接口说明

iOS 集成指引

Xmagic 接口回调注册

/// @brief SDK事件监听接口/// @param listener 事件监听器回调,主要分为AI事件,Tips提示事件,Asset事件- (void)registerSDKEventListener:(id<YTSDKEventListener> _Nullable)listener;

YTSDKEventListener 回调说明

#pragma mark - 事件回调接口/// @brief SDK内部事件回调接口@protocol YTSDKEventListener <NSObject>/// @brief YTDataUpdate事件回调/// @param event NSString*格式的回调- (void)onYTDataEvent:(id _Nonnull)event;/// @brief AI事件回调/// @param event dict格式的回调- (void)onAIEvent:(id _Nonnull)event;/// @brief 提示事件回调/// @param event dict格式的回调- (void)onTipsEvent:(id _Nonnull)event;/// @brief 资源包事件回调/// @param event string格式的回调- (void)onAssetEvent:(id _Nonnull)event;@end

2.6.0及之前版本设置回调成功后,每一帧人脸事件会回调:

- (void)onYTDataEvent:(id _Nonnull)event;

3.0.0版本设置回调成功后,每一帧人脸事件会回调:

- (void)onAIEvent:(id _Nonnull)event;//在onAIEvent方法中可通过下边方法可以获取到数据NSDictionary *eventDict = (NSDictionary *)event;if (eventDict[@"ai_info"] != nil) {NSLog(@"ai_info %@",eventDict[@"ai_info"]);}

回调 data 是一个 JSON 格式数据,具体含义如下(256点对应上图的位置):

/// @note 字段含义列表/**| 字段 | 类型 | 值域 | 说明 || :---- | :---- |:---- | :---- || trace_id | int | [1,INF) | 人脸id,连续取流过程中,id相同的可以认为是同一张人脸 || face_256_point | float | [0,screenWidth或screenHeight] | 共512个数,人脸256个关键点,屏幕左上角为(0,0) || face_256_visible | float | [0,1] | 人脸256关键点可见度 || out_of_screen | bool | true/false | 人脸是否出框 || left_eye_high_vis_ratio | float | [0,1] | 左眼高可见度点位占比 || right_eye_high_vis_ratio | float | [0,1] | 右眼高可见度点位占比 || left_eyebrow_high_vis_ratio | float | [0,1] | 左眉高可见度点位占比 || right_eyebrow_high_vis_ratio | float | [0,1] | 右眉高可见度点位占比 || mouth_high_vis_ratio | float | [0,1] | 嘴高可见度点位占比 |**/- (void)onYTDataEvent:(id _Nonnull)event;