コード不要で会話型 AI 機能に素早くアクセス

Tencent RTC provides a convenient testing and verification platform, supporting the connection to third-party STT, LLM, and TTS capacities for rapid configuration. It enables the quick implementation of conversational AI services without coding for verification tests, facilitating efficient development and deployment. This article will introduce how to use this tool.

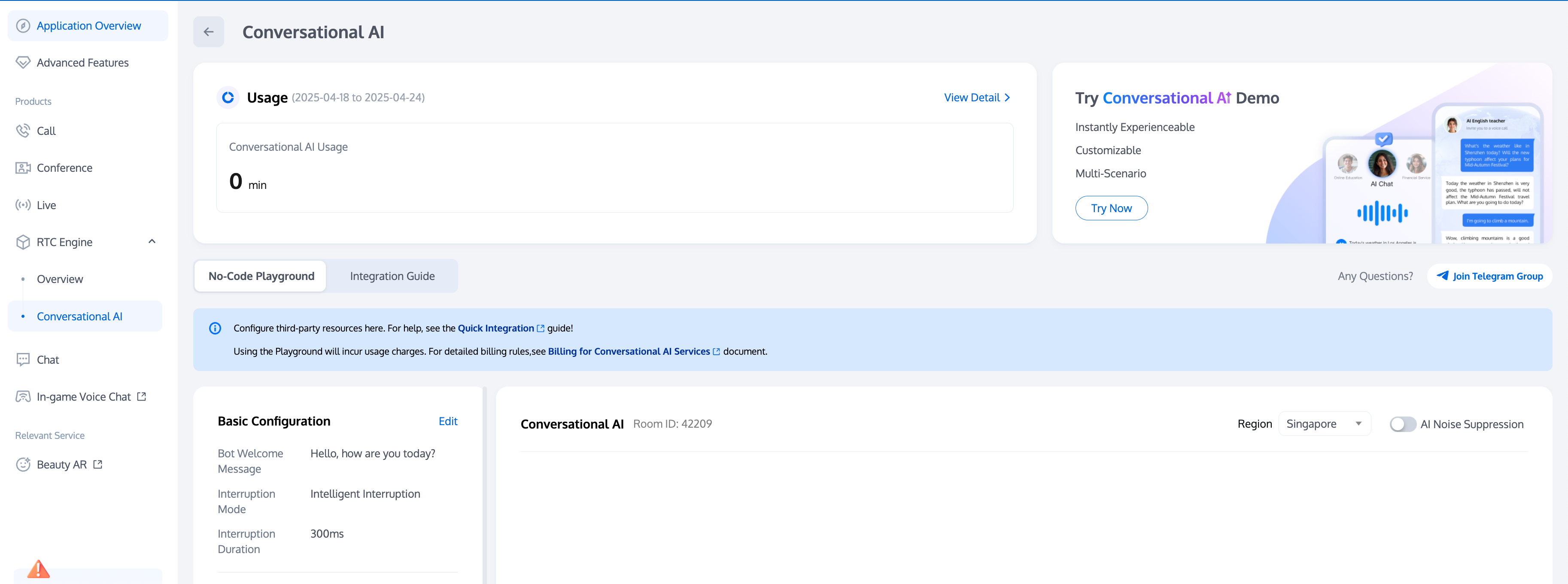

Logging In To the Console

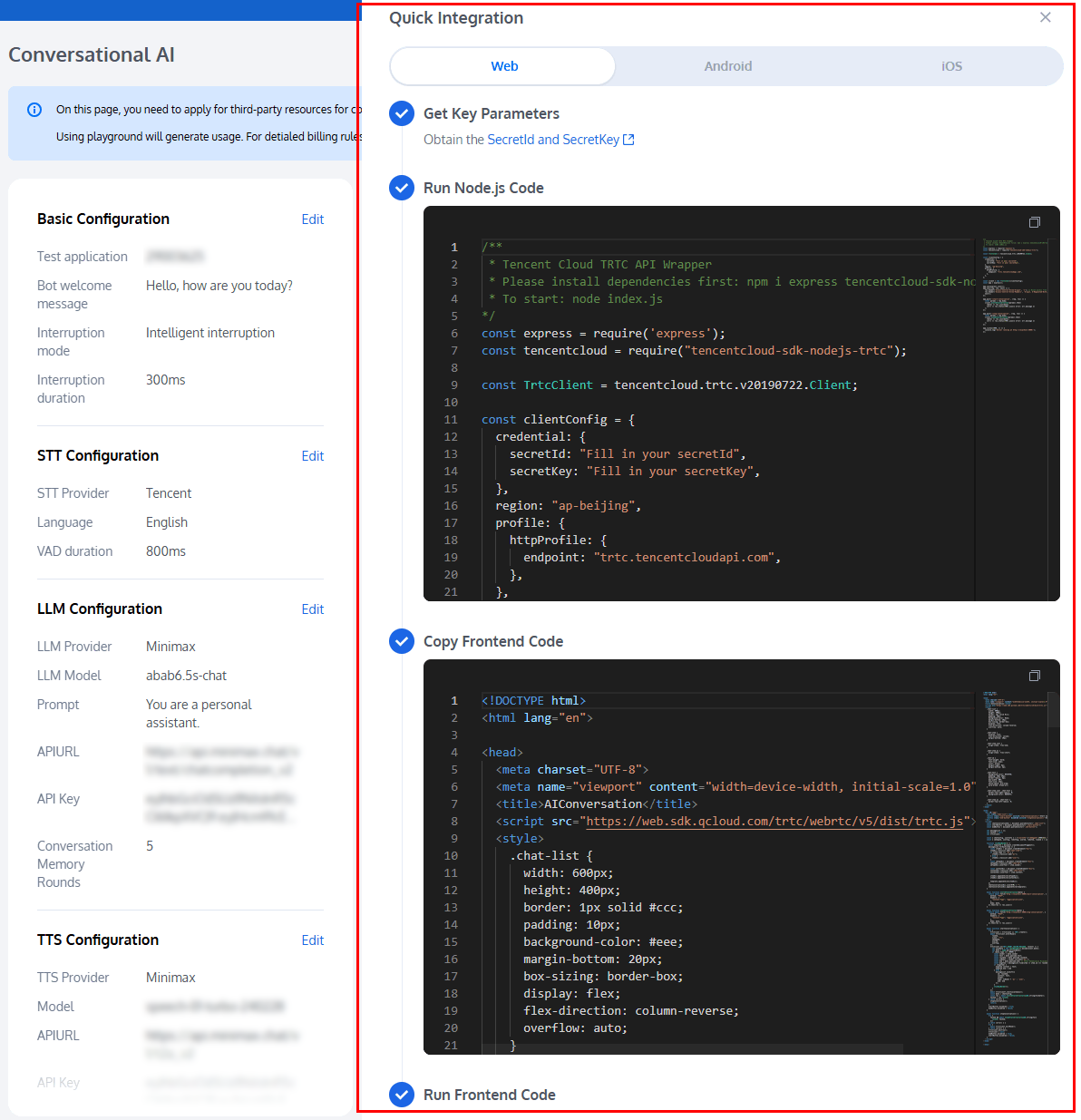

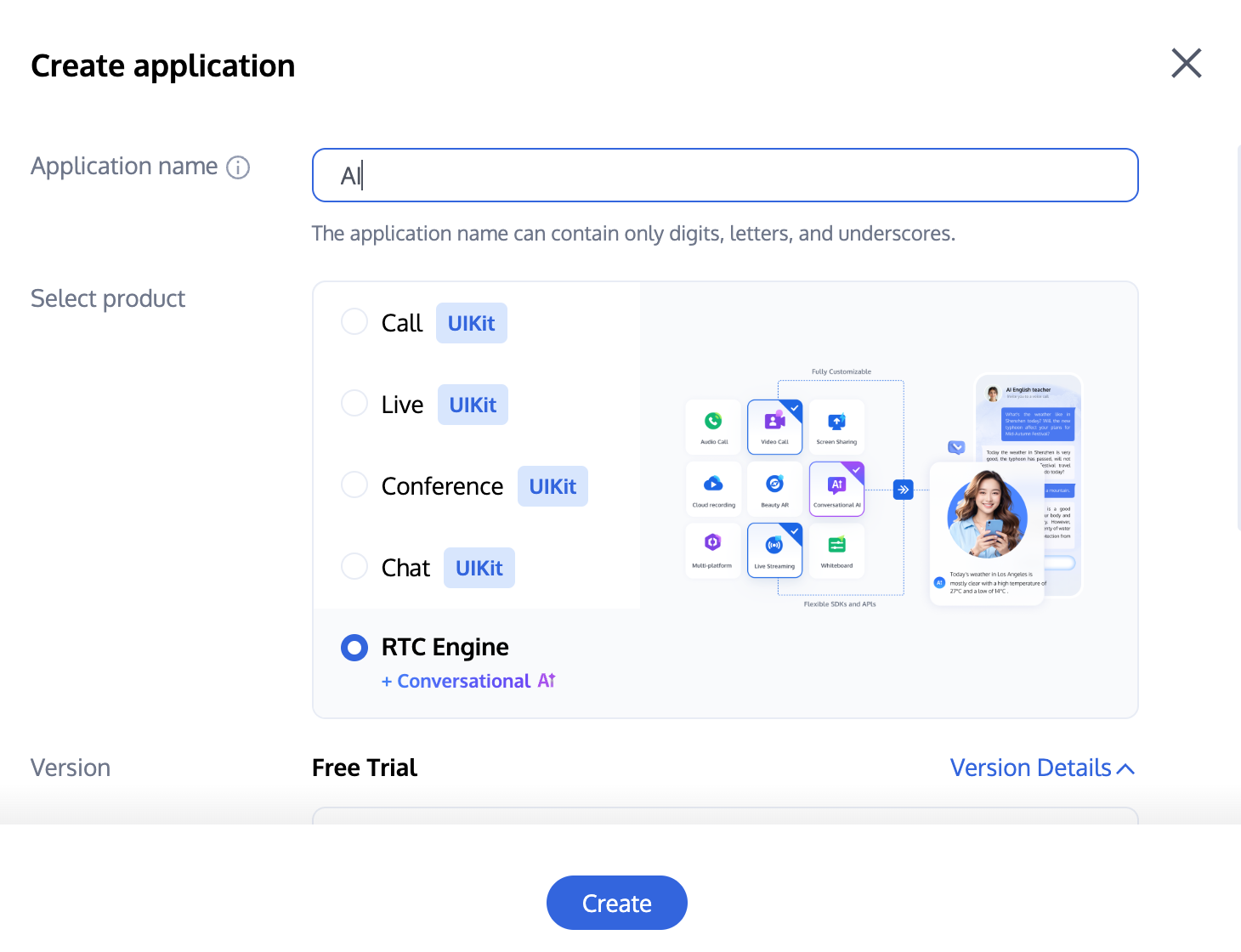

1. Create a RTC Engine application: Tencent RTC Console > Create RTC Engine Application.

2. Click: Start configuring conversational AI.

3. Select No-Code Playground.

Resource Configuration

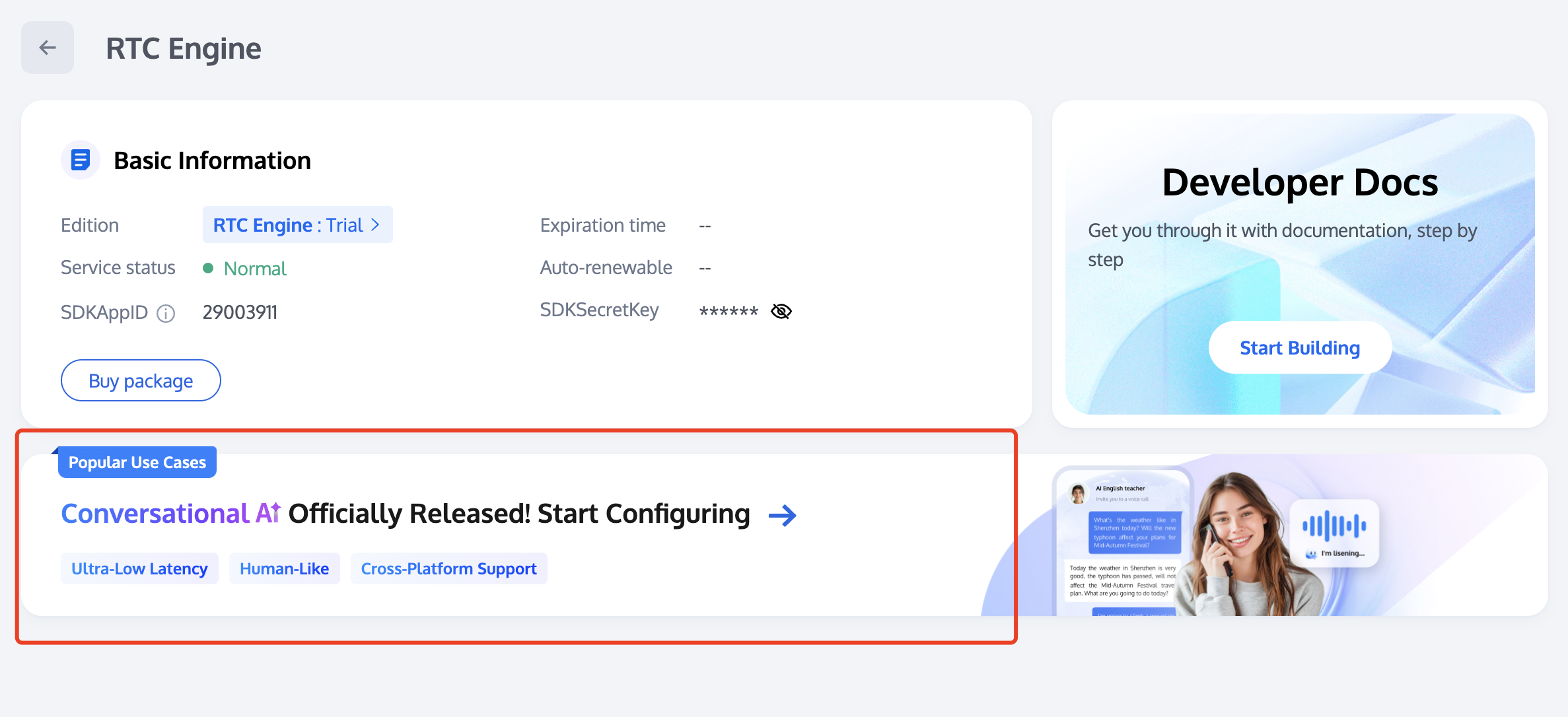

Conversational AI support for docking with multiple STT, LLM, and TTS manufacturers. Click Get Started to quickly configure, test, and integrate conversational AI services here, achieving efficient development and deployment.

Note:

Click Try Demo to experience the capabilities of Conversational AI.

Using the quick conversational AI testing tool will incur usage fees. For billing details, see Billing of Conversational AI Services.

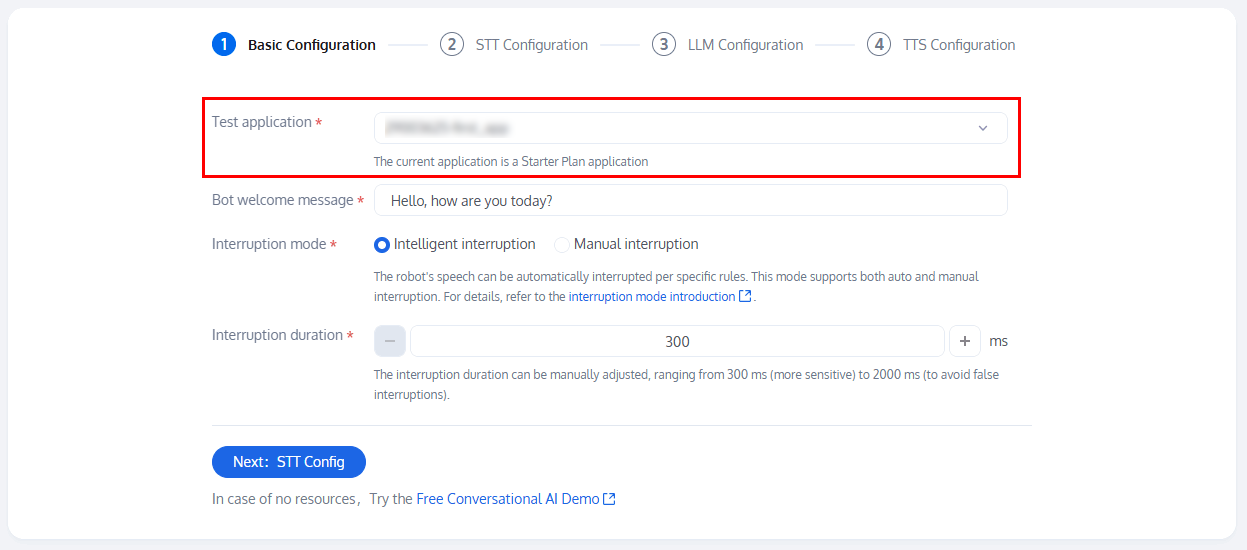

Step 1: Basic Configuration

1. Select an Application

On the Basic Configuration page, you first need to select the application you want to test. You can enable the STT service for the application to use the AI service and STT Automatic Speech Recognition feature by proceeding to purchase the RTC-Engine Monthly Packages (including Starter Plan).

2. Basic Configuration Items

We provide default parameters, and you can fine-tune the basic configuration items according to your needs. After completing the configuration, click Next.

Fill in the bot welcome message.

Select the interruption mode. It can automatically interrupt the robot's speech based on specific rules. This mode supports both automatic and manual interruptions. For detailed description, see Intelligent interruption.

Select the interruption duration.

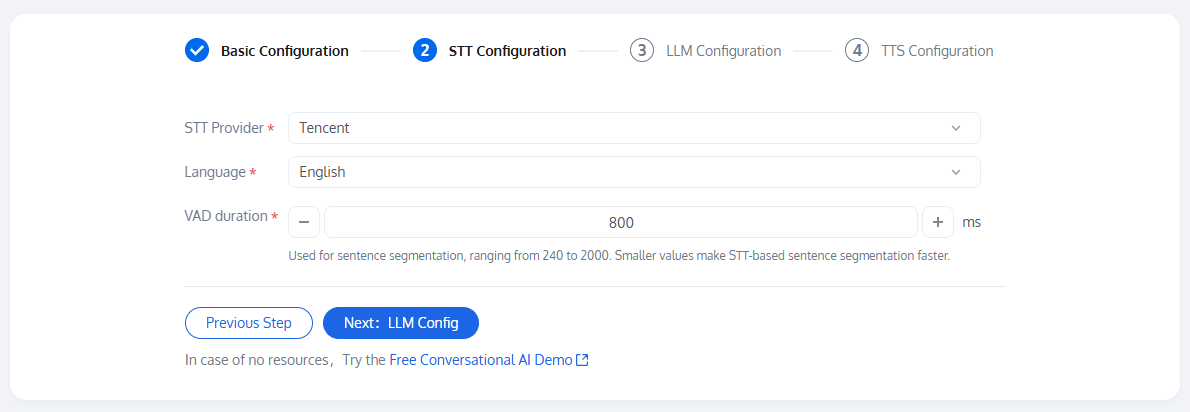

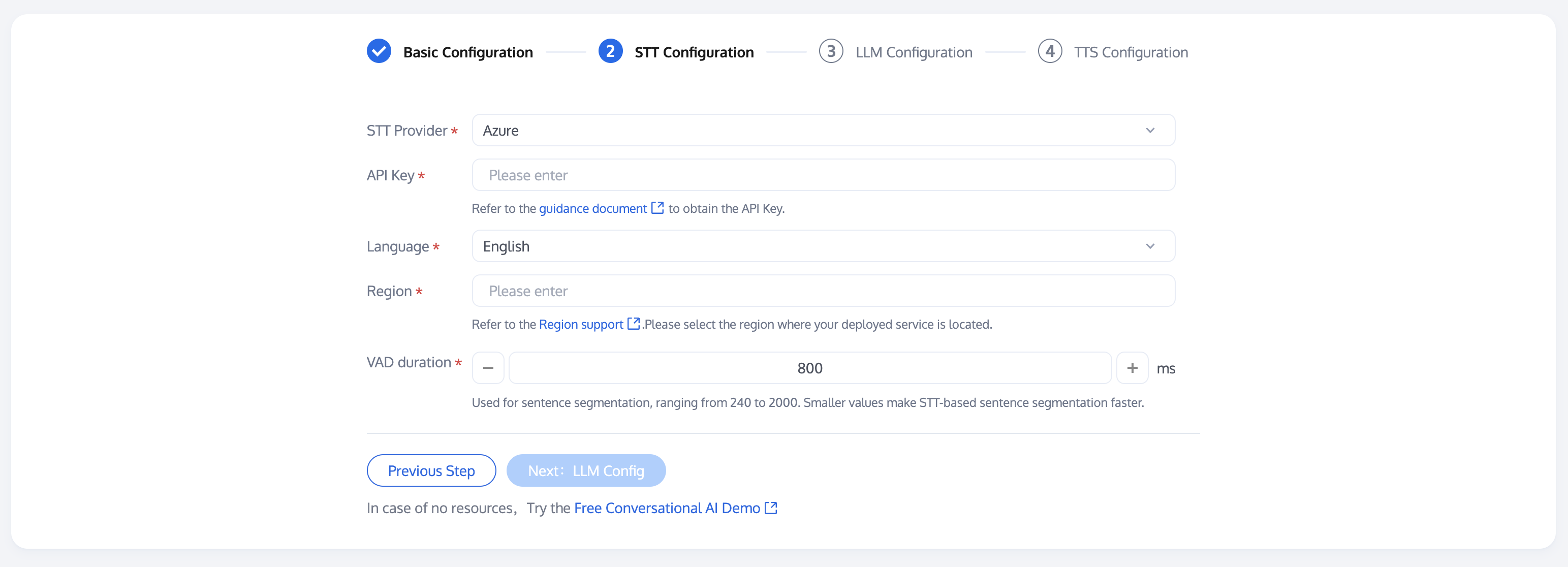

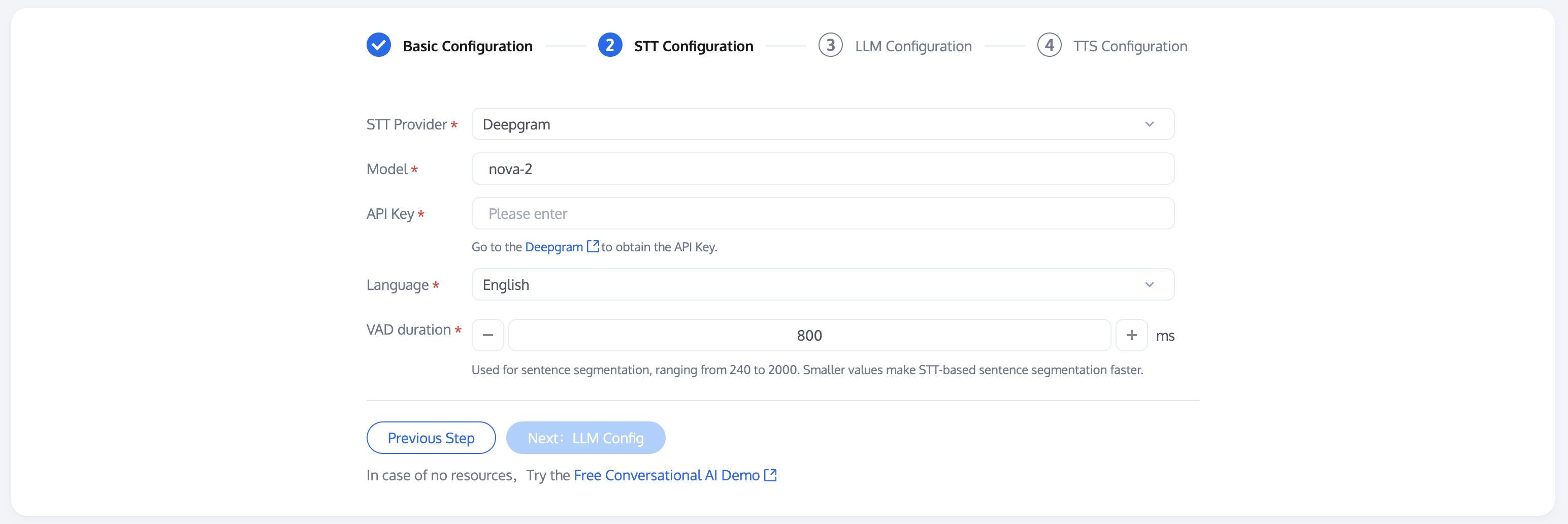

STEP 2: STT (Speech To Text ) Configuration

The STT speech recognition configuration model can choose Tencent, Azure, and Deepgram. It supports multiple languages, and the VAD duration can be adjusted. The setting range is 240-2000. A smaller value will make the speech recognition sentence segmentation faster. After the configuration is completed, click Next.

When selecting Tencent as the STT provider, we provide default parameters for other configurations, and you can fine-tune them according to your needs.

When selecting Azure as the STT provider:

The APIKey can be obtained from the guidance document.

For Region, refer to Region Support to fill in.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

When selecting Deepgram as the STT provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The APIKey can be obtained from Deepgram.

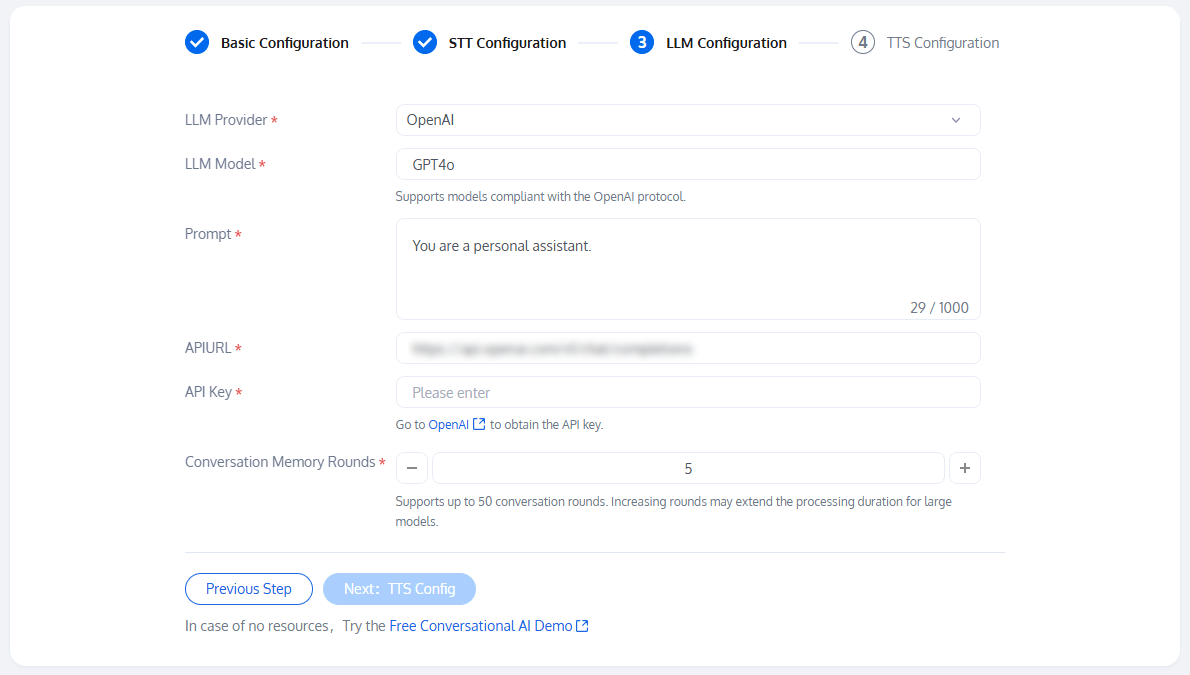

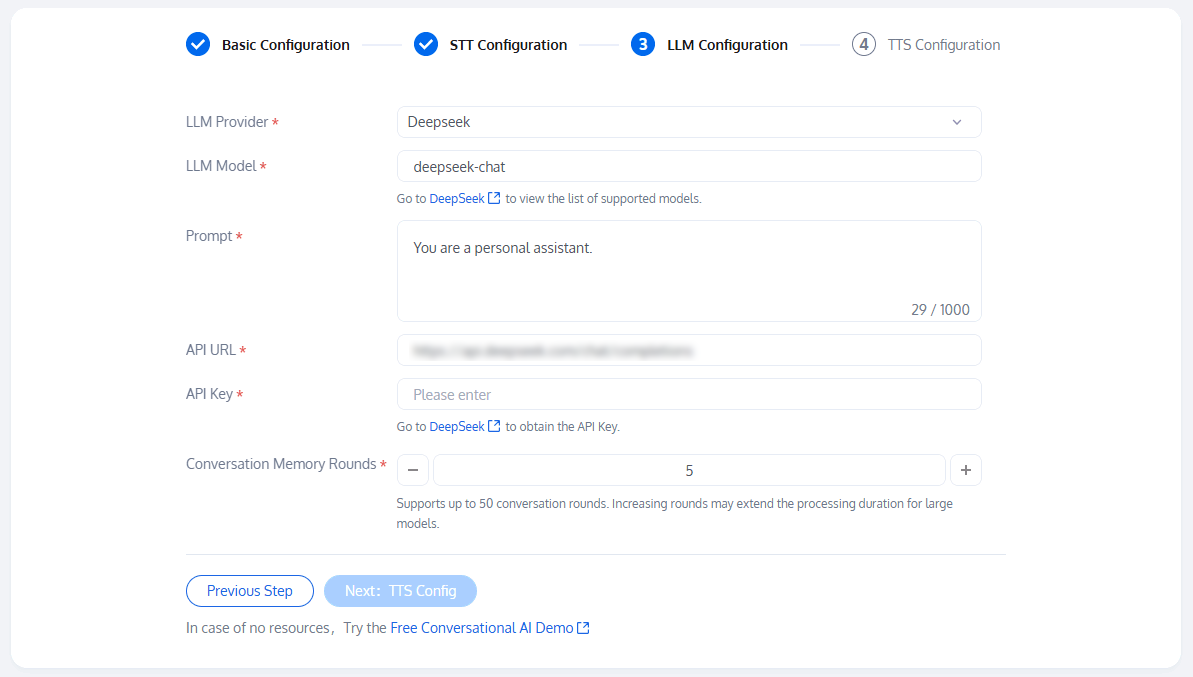

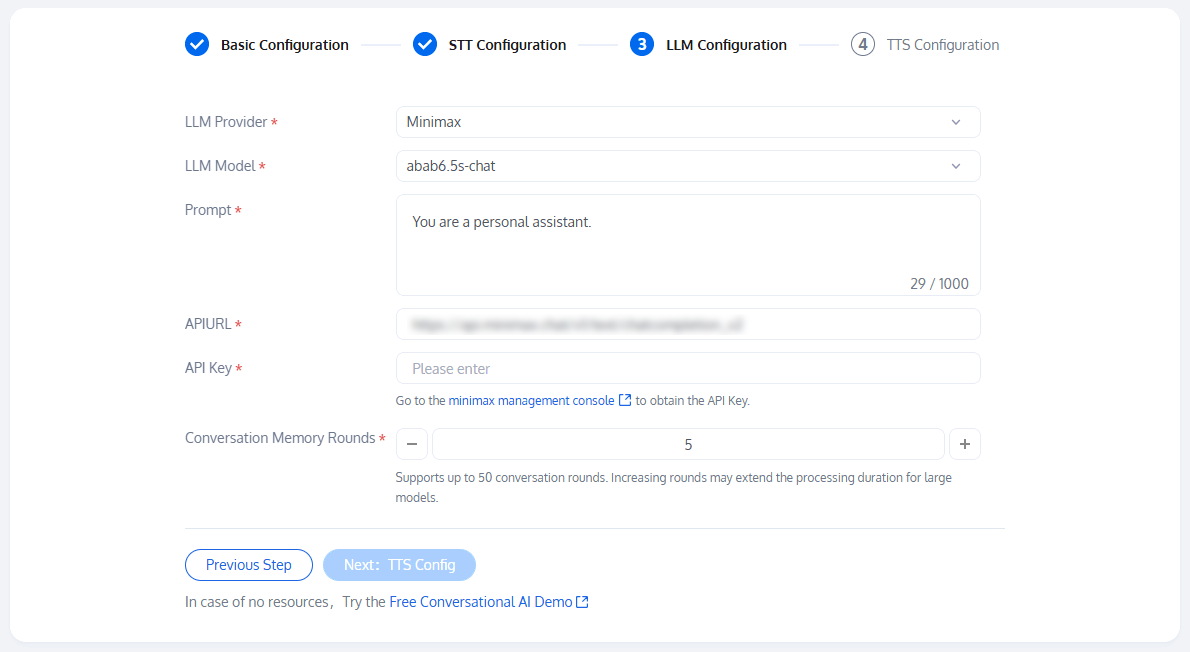

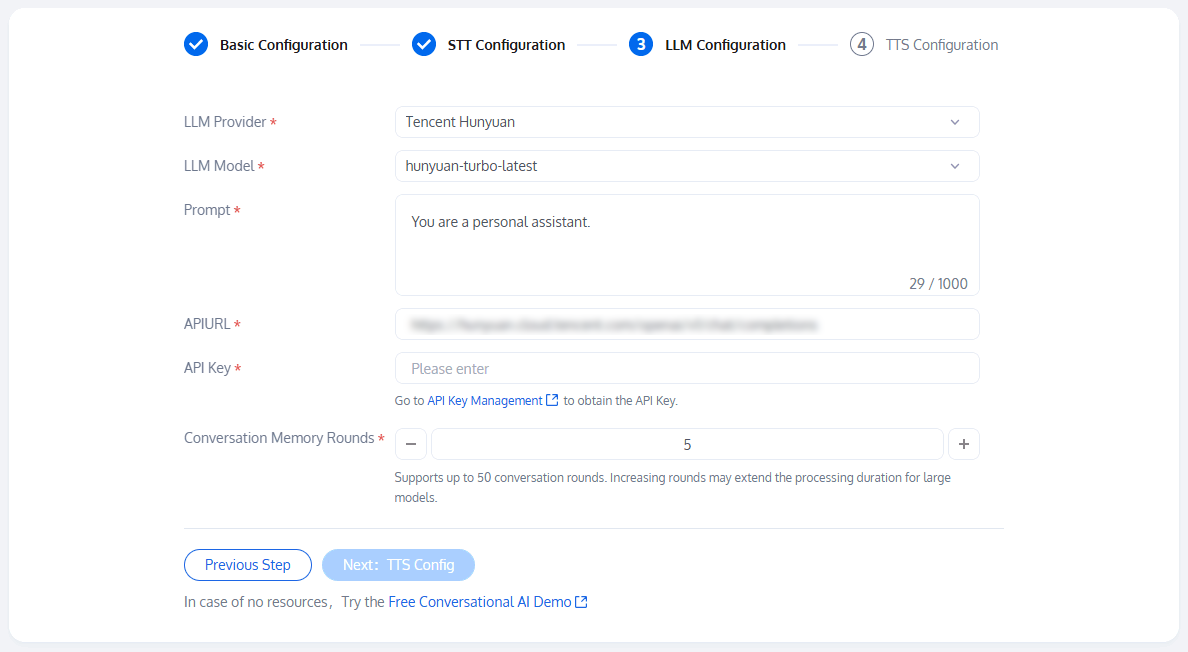

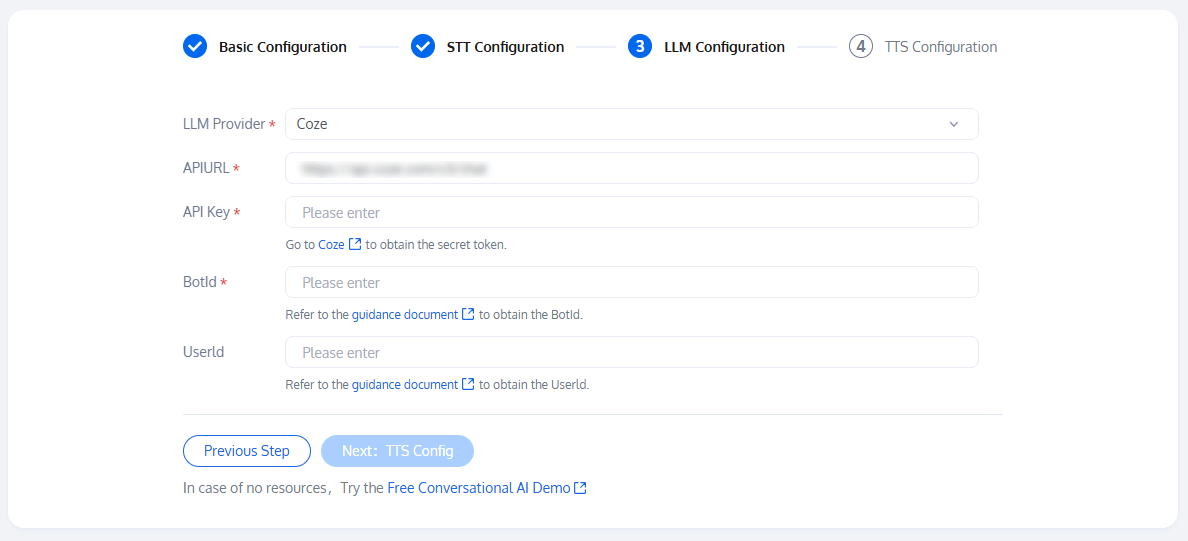

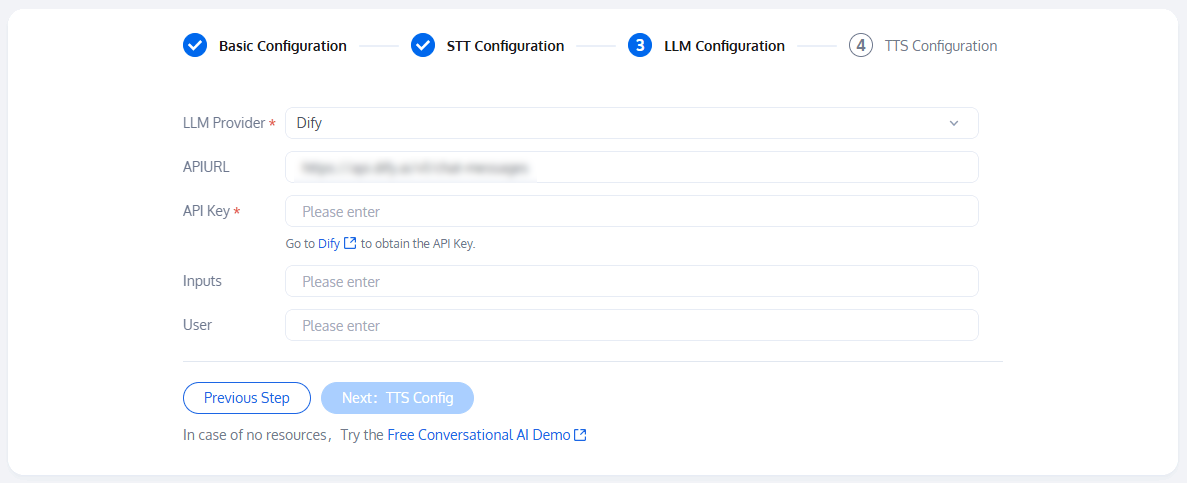

STEP 3: LLM (Large Language Model) Configuration

On this page, you need to apply for third-party resources for configuration: LLM providers support OpenAI, Deepseek, Minimax, Tencent Hunyuan, Coze, and Dify. After the configuration is completed, click Next.

When selecting OpenAI as the LLM provider, this place supports any LLM model that complies with the OpenAI standard protocol, such as Google, Anthropic, etc. You can adjust the parameters of the model, Url and Key below by yourself for calling. When you choose to use the model provided by OpenAI, the API Key can be obtained from OpenAI.

When selecting Deepseek as the LLM provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The LLM model can be viewed at Deepseek by checking the supported model list, and the API Key can be obtained from Deepseek.

Besides Deepseek official, you can also choose to call the Deepseek service deployed on third-party cloud platforms. According to the OpenAI protocol, just replace the parameters of LLM Model, Url and Key below.

When selecting Minimax as the LLM provider, we provide default parameters; you can fine-tune them according to your needs. The LLM model can be freely selected from the dropdown option, and the API Key can be obtained from Minimax management console.

When selecting Hunyuan as the LLM provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The LLM model can be freely selected from the dropdown option, and the API Key can be obtained from API Token Management.

When selecting Coze as the LLM provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The API Key can be obtained by going to Coze to get the Secret token, and see guidance document to obtain BotId.

When selecting Dify as the LLM provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The API Key can be obtained from Dify.

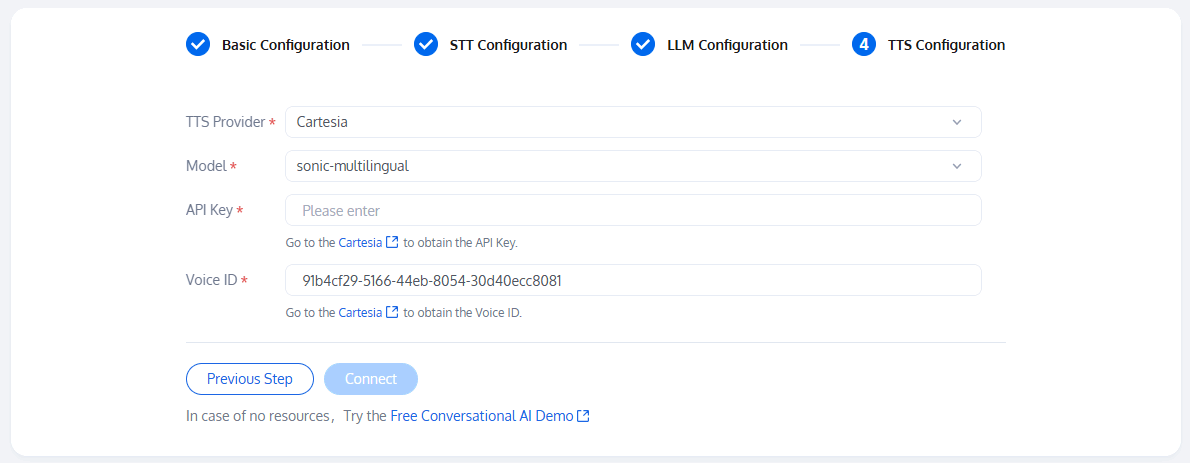

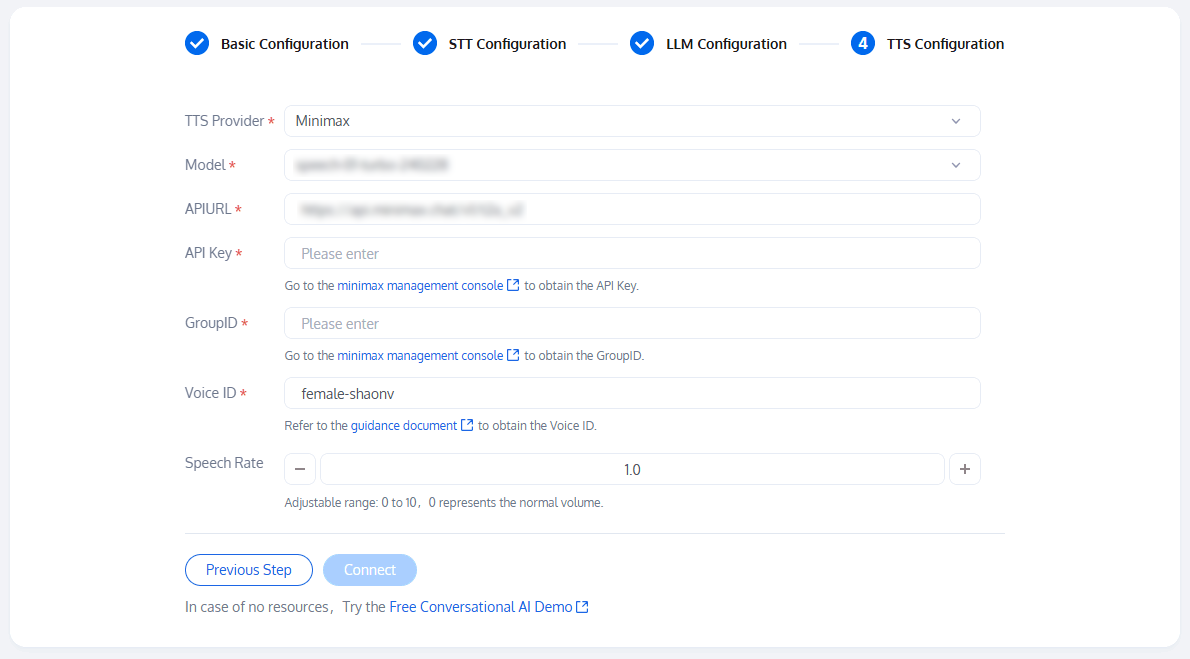

STEP 4: TTS (Text To Speech) Configuration

On the TTS (Text To Speech) configuration page: The TTS provider supports Tencent, Minimax, Azure, Cartesia, Elevenlabs, and custom. After completing the configuration, click Connect, if the configuration information is correct, you will enter the Start Conversation page.

When selecting Cartesia as the TTS provide:

The APIKey can be obtained from Cartesia.

The voice ID can be obtained from Cartesia.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

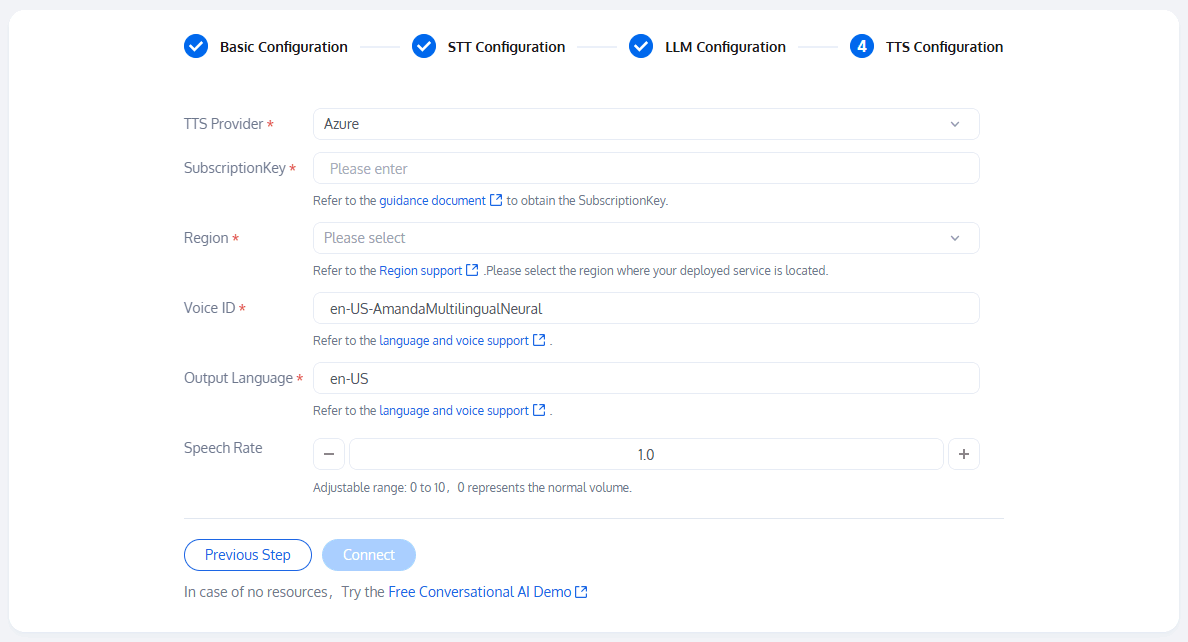

When selecting Azure as the TTS provide:

The SubscriptionKey can be obtained by referring to the guidance document.

For Region, refer to Region Support to fill in.

For language, refer to Language and Sound Support to fill in.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

e-tune them according to your needs.

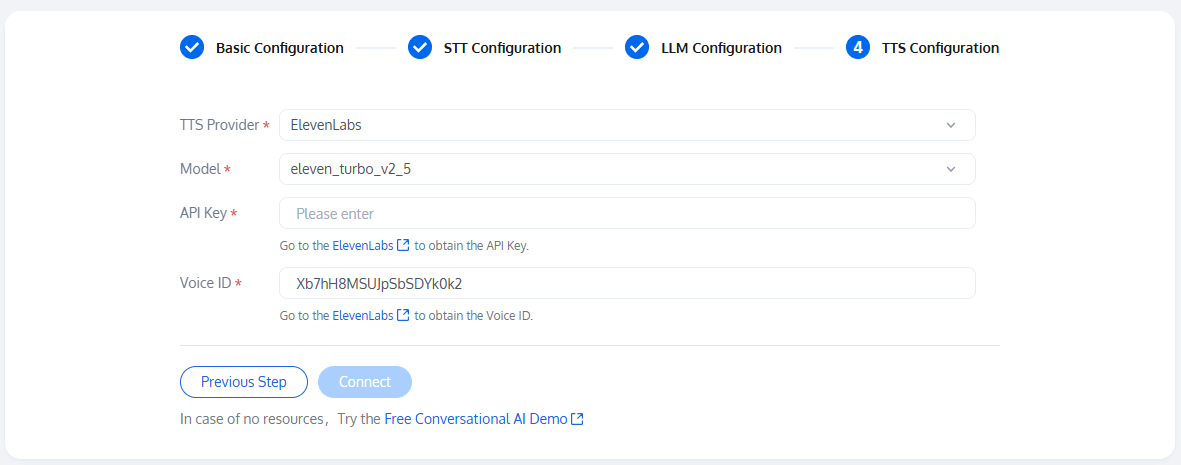

When selecting Elevenlabs as the TTS provide:

The APIKey can be obtained from ElevenLabs.

The voice ID can be obtained from ElevenLabs.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

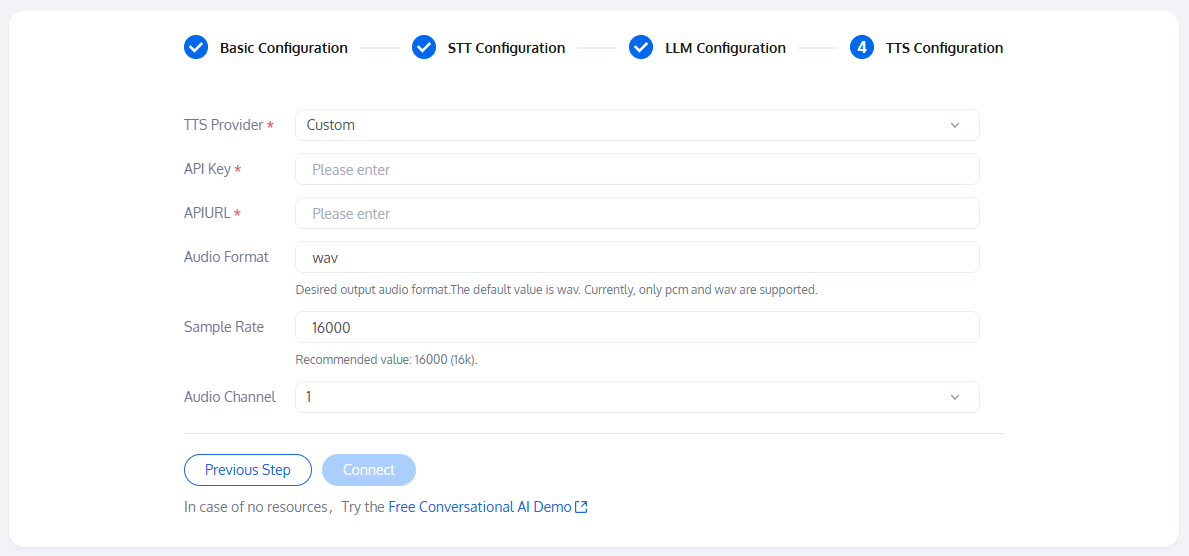

You can refer to custom TTS protocol configuration.

When the TTS provider is set to custom, you can fill in the API Key and API Url according to your actual situation.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

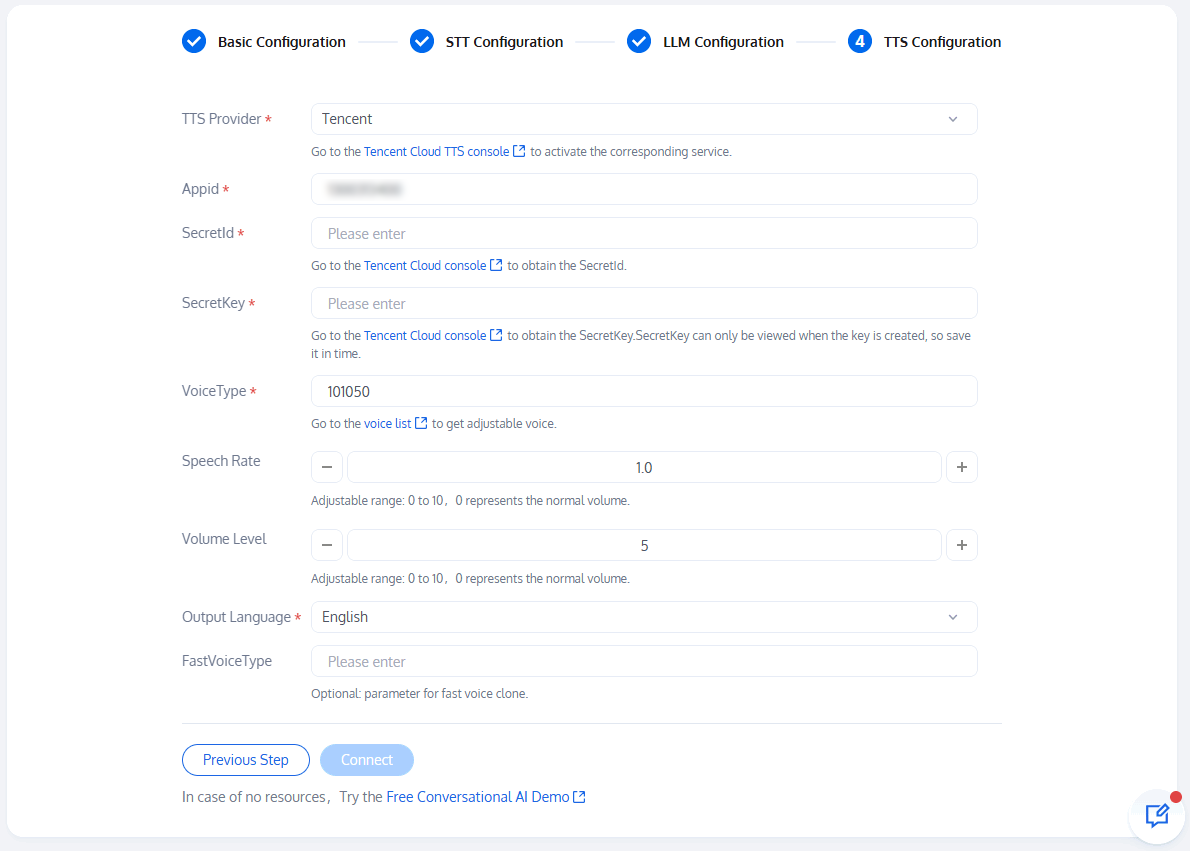

When selecting Tencent as the TTS provider, you need to activate the application's TTS service to use the TTS voice synthesis feature.

The SecretId can be obtained from the guidance document.

The SecretKey can be obtained from the guidance document. The SecretKey can only be viewed when creating the key, please save it in time.

Voice type can be obtained from the voice list.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

When selecting Minimax as the TTS providex:

The APIKey can be obtained from the Minimax management console.

GroupID can be obtained from the Minimax management console.

The voice ID can be obtained by referring to the guidance document.

We provide default parameters for other configurations; you can fine-tune them according to your needs.

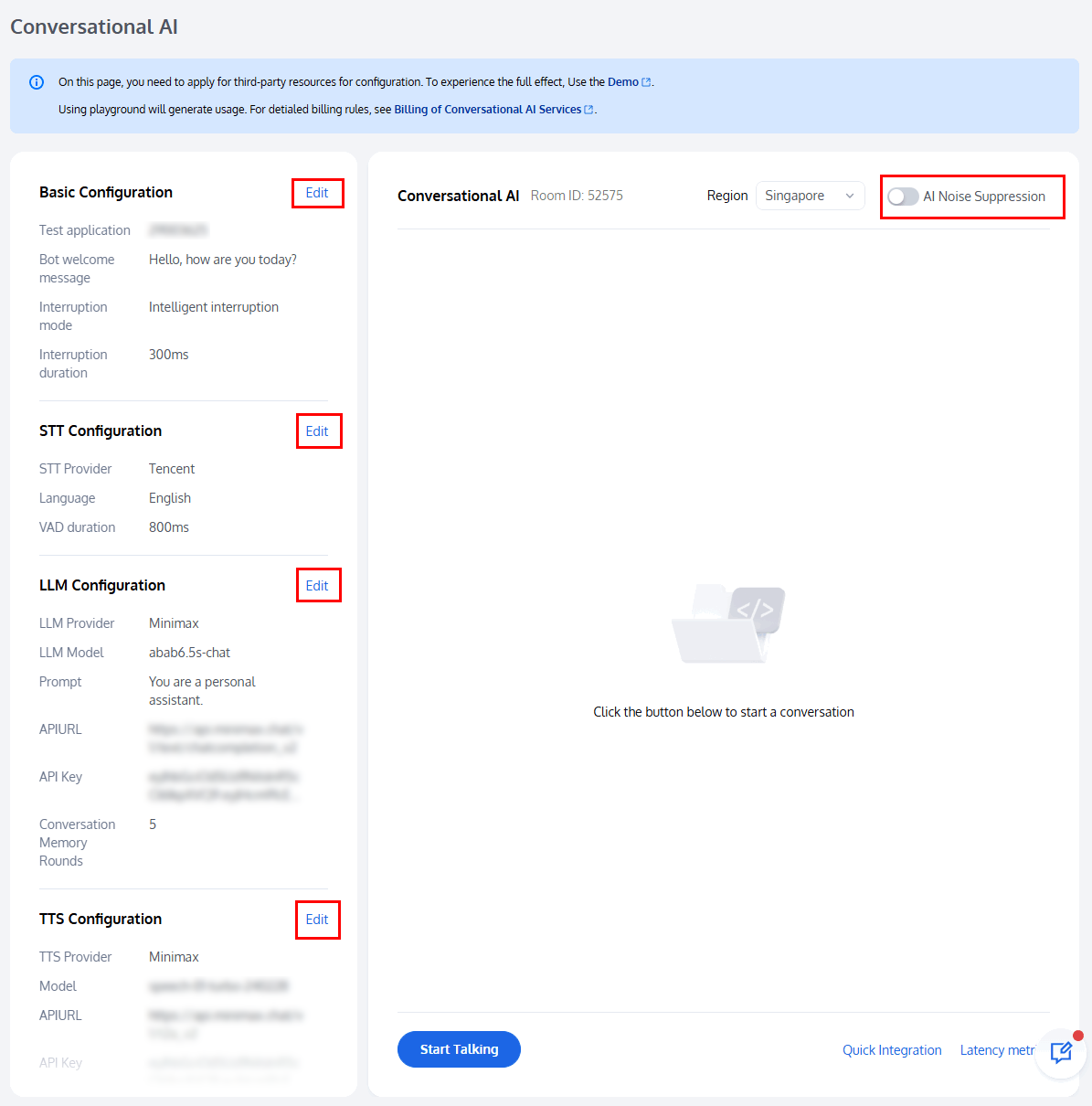

Homepage Introduction

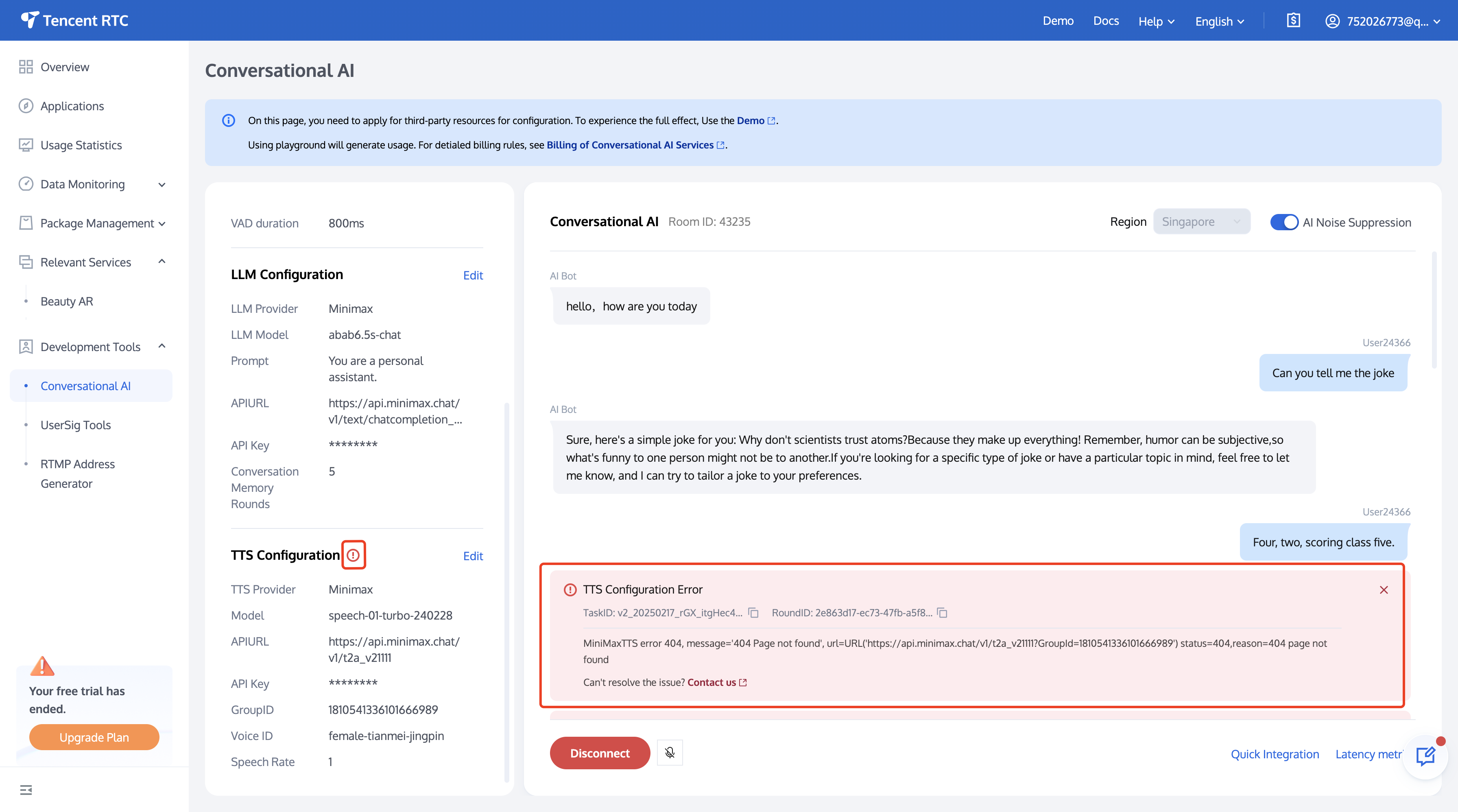

Starting Talking

AI noise suppression: To use this feature, you need to activate the RTC-Engine Monthly Packages standard or pro version.

During the conversation, it supports editing and modifying interruption duration, LLM configuration, and TTS configuration to facilitate effect evaluation.

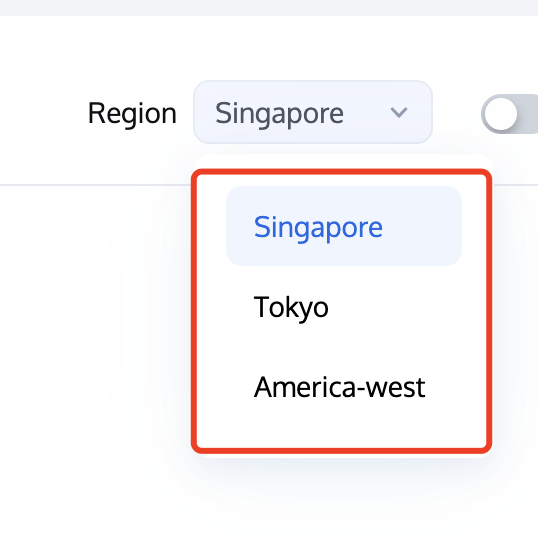

Region: The system defaults to the nearest access region to reduce delay. You can also select a region freely.

During the conversation, if there is a display of error codes: Please note that some error codes may block the dialogue. Modify the configuration according to the error prompt in time. If it cannot be resolved, copy the Task ID and Round ID information and contact us for query. Some error codes do not affect the normal progress of the dialogue, such as response timeout, which you can handle according to the actual situation.

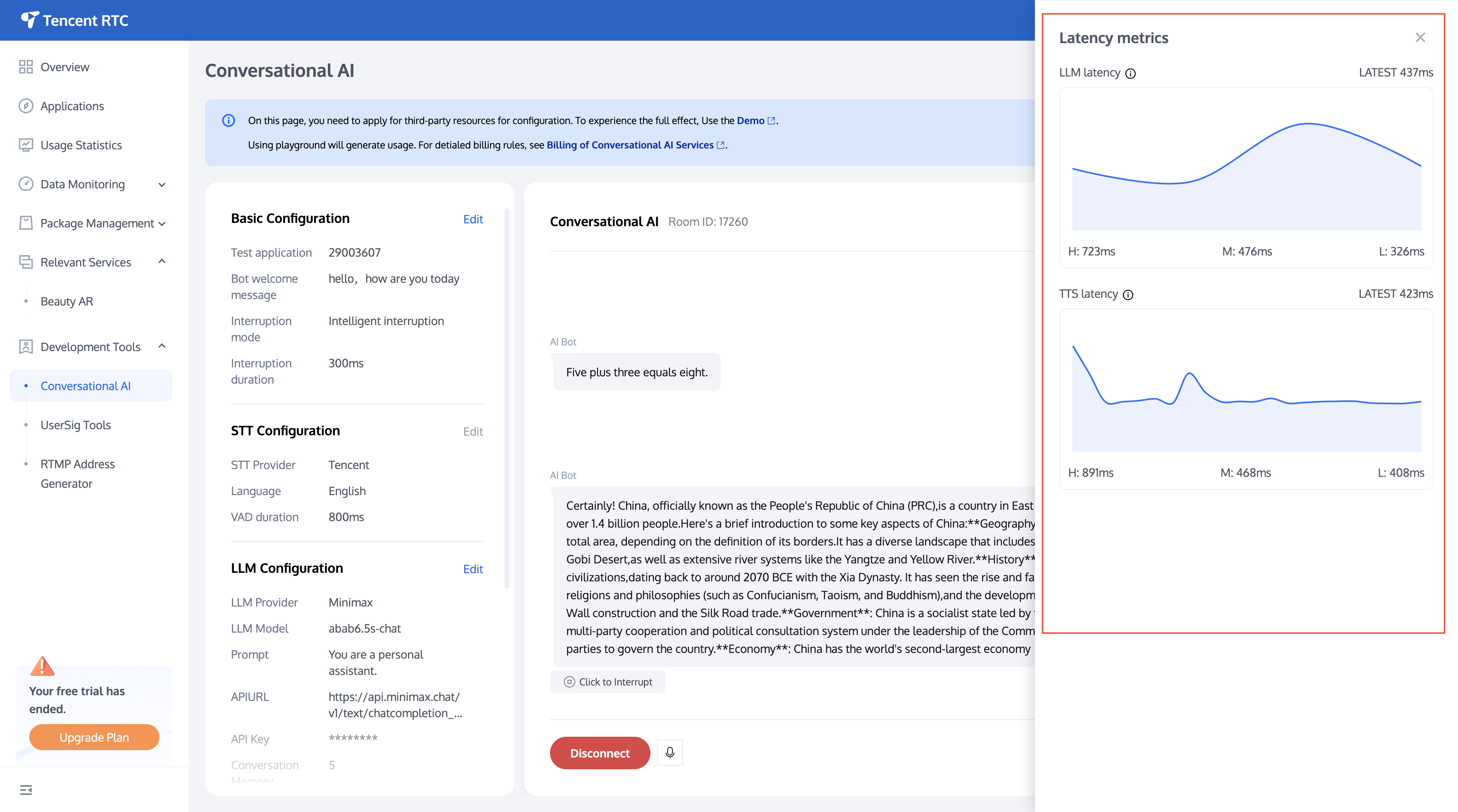

View Latency Metrics

You can check the latency rate at any time during the conversation to select a low-latency model.

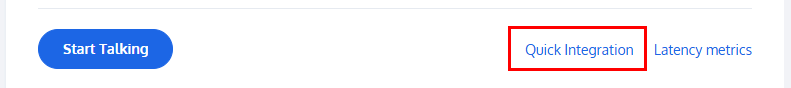

Quick Integration

We support users in quickly implementing Conversational AI in their local environments. The parameters filled in during system configuration have been preset and do not need to be filled in again (currently supports web).