In the world of Over-the-Top (OTT) content delivery and Real-Time Communication (RTC), media players play a crucial role in delivering high-quality audio and video experiences to end-users. This blog post will explore the inner workings of media players, breaking down their processes into four main steps: file acquisition, audio-video separation, decoding and synchronization, and rendering.

1. File Acquisition

The first step in the playback process is acquiring the media file. This can happen in two primary ways:

Network Download: Files can be downloaded from remote servers using protocols such as RTMP (Real-Time Messaging Protocol) or HTTP (Hypertext Transfer Protocol).

Local Access: Files can be accessed from the local disk using I/O protocols, typically through the file:// protocol.

Once the player obtains the file, it parses the container format to understand how the content is packaged.

2. Audio-Video Separation

After acquiring the file, the player needs to separate the different components of the media. This process involves:

Metadata Parsing: The player analyzes the file's metadata to gather information about the content.

Stream Separation: The content is split into separate streams, which may include:

- Video stream

- Audio stream

- Subtitles

- Chapter information

This separation is performed by a module called the splitter or demuxer. It's generally not computationally intensive, as it primarily involves collecting, splitting, and selecting data.

3. Decoding and Synchronization

Once the streams are separated, they need to be decoded and synchronized. This process is crucial for ensuring a seamless viewing experience.

Decoding

Different streams are sent to their respective decoders:

- H.264 video streams go to the video decoder

- AAC audio streams go to the audio decoder

Each decoder decompresses the encoded data back into its original format.

Synchronization Challenge

After decoding, we face a critical question: How do we ensure that the separately decoded audio and video streams are perfectly synchronized?

This is where DTS (Decoder Time Stamp) and PTS (Presentation Time Stamp) come into play. These timestamps are crucial for solving the synchronization problem between audio and video data, and they help prevent decoder input buffer overflow or underflow.

- DTS (Decoder Time Stamp): Indicates when to feed the data into the decoder.

- PTS (Presentation Time Stamp): Indicates when to display the decoded frame.

DTS and PTS can be relative times, and in most cases, they are identical.

Video Synchronization

For video, each I, P, and B frame carries both a DTS and a PTS. This is necessary because the storage order of frames often differs from their display order. For example, the storage order might be IPBBBBB..., while the display order is IBBBBBP.... Before display, the frames in the decoder's buffer need to be reordered, and PTS is essential in this process.

Audio Synchronization

Audio data also has the concept of frames, though these are artificially defined. Typically, a certain number of consecutive samples are chosen as an audio frame, and a PTS is added to this frame. For AAC, 1024 consecutive samples usually form a frame. At a sampling rate of 48000Hz, each audio frame is approximately 21.33ms (1024×1000÷48000).

Synchronization Process

In most cases, due to the fixed sampling rate of audio, the PTS of audio frames is relatively stable. Therefore, when displaying, we use the PTS of audio frames as a time reference. When decoding and displaying an audio frame, we look for video frames with a close PTS to display simultaneously, thus achieving audio-video synchronization.

It's important to note that it's challenging to have exactly matching PTS values for audio and video frames. Generally, we consider the audio and video to be synchronized if the difference in PTS values is within 200ms.

Troubleshooting Synchronization Issues

If the PTS difference is small, but the picture and sound still don't match, it's usually an indication that there was a problem during the capture process.

By understanding and properly implementing these synchronization techniques, developers can ensure that OTT and RTC applications deliver a high-quality, synchronized audio-video experience to end-users.

4. Rendering and Display

The final step is rendering the decoded content:

Video Rendering

Decoded video frames, containing color values for each pixel, are sent to a renderer for display on the screen.

Audio Rendering

Decoded audio data, in the form of waveforms, is sent to the sound card and then to speakers for playback.

Operating systems usually provide APIs for rendering:

- Windows: DirectX

- Android: OpenGL ES (video) and OpenSL ES (audio)

- iOS: OpenGL ES (video) and AudioUnit (audio)

While developers can implement custom rendering, it's generally not recommended due to the complexity involved.

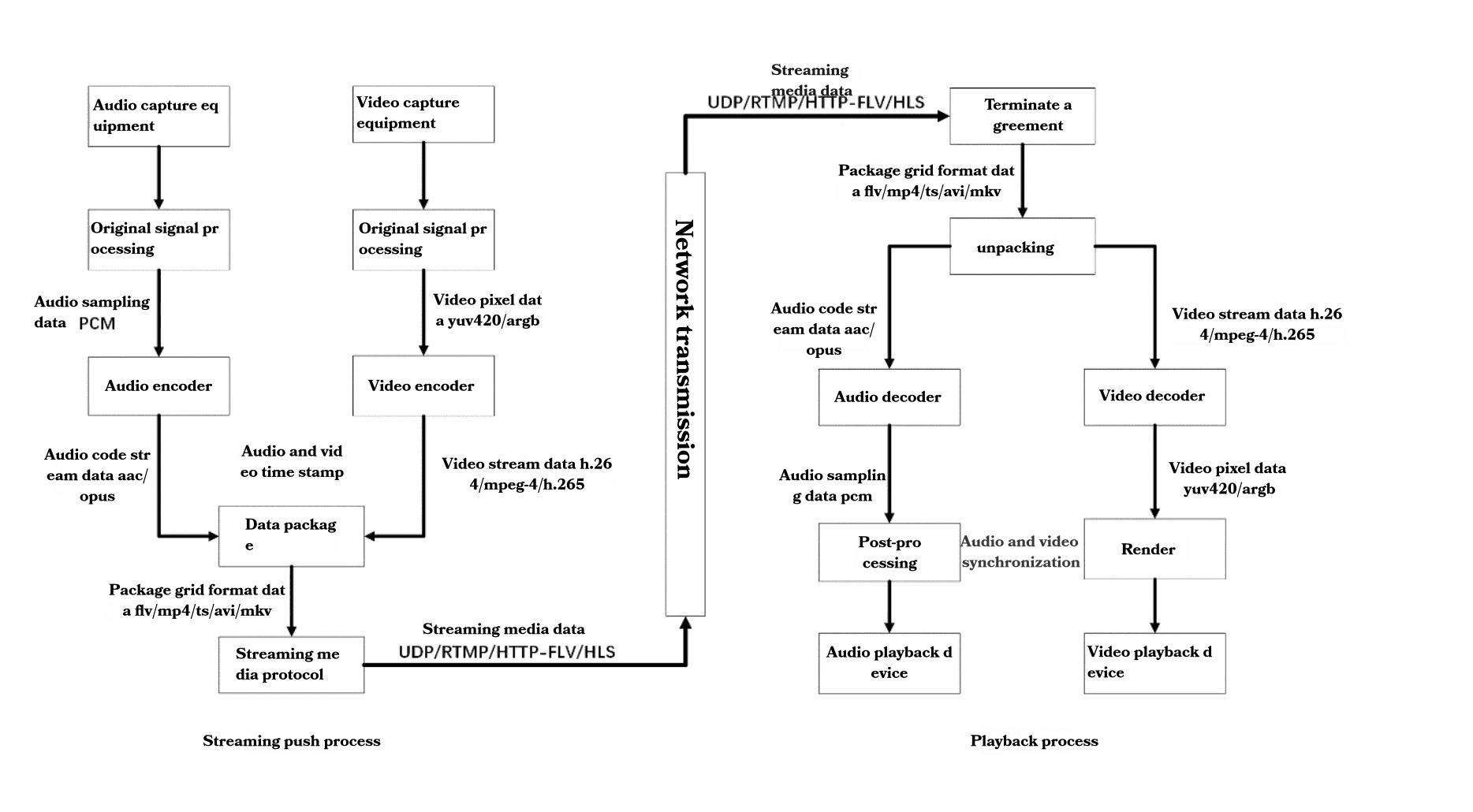

The Complete Audio-Video Flow

To better understand the entire process, let's review the audio-video flow:

Capture:

- Audio captured by microphone

- Video captured by camera

Processing:

- Raw audio data (PCM) is processed

- Raw video data (YUV420/ARGB) is processed

Encoding:

- Audio encoded to formats like AAC/Opus

- Video encoded to formats like H.264/MPEG-4/H.265

Packaging:

- Encoded streams are packaged into containers like FLV/MP4/TS/AVI/MKV

Transmission:

- Packaged data is transmitted using protocols like UDP/RTMP/HTTP-FLV/HLS

Reception and Unpacking:

- Received data is unpacked

Decoding:

- Audio and video streams are decoded back to PCM and YUV420/ARGB respectively

Post-processing and Synchronization:

- Decoded streams are processed and synchronized

Rendering:

- Audio is played through audio playback devices

- Video is displayed on video display devices

Conclusion

Understanding the intricacies of media players is crucial for developers working in OTT and RTC technologies. From file acquisition to rendering, each step plays a vital role in delivering a seamless viewing experience. As technology continues to evolve, staying updated with these processes will be key to developing efficient and high-quality media applications.