Introduction

In a groundbreaking announcement, OpenAI has unveiled its latest innovation: the Real Time API. This new technology represents a significant leap forward in the realm of AI-powered voice interactions, promising to revolutionize how developers create and users experience conversational AI applications. The Real Time API, currently in public beta and available to all paid developers, enables the creation of low-latency, multimodal experiences that closely mimic natural human conversation.

As artificial intelligence continues to evolve at a rapid pace, the introduction of the Real Time API marks a pivotal moment in the industry. It addresses many of the challenges that have long plagued voice-based AI interactions, such as unnatural pauses, lack of context understanding, and the inability to handle interruptions gracefully. By offering a more seamless and intuitive interface between humans and AI, OpenAI is not just improving existing technologies but paving the way for entirely new applications and use cases.

This blog post will delve into the intricacies of the Real Time API, exploring its features, potential applications, and the impact it's likely to have on various industries. We'll also examine the technical aspects behind this innovation, the pricing structure, and the important considerations surrounding safety and privacy. As we stand on the brink of a new era in AI-human interaction, understanding the capabilities and implications of the Real Time API is crucial for developers, businesses, and anyone interested in the future of technology.

What is the Real Time API?

The Real Time API is OpenAI's latest offering designed to enable developers to create sophisticated, voice-based AI applications with unprecedented ease and efficiency. At its core, the Real Time API is a tool that allows for natural speech-to-speech conversations using AI, similar to the Advanced Voice Mode in ChatGPT but with expanded capabilities and flexibility for developers.

Unlike previous methods of creating voice-based AI applications, which often required developers to piece together multiple models for speech recognition, text processing, and speech synthesis, the Real Time API offers a unified solution. It handles the entire process of converting speech to text, processing the input, generating a response, and converting that response back to speech – all within a single API call.

The key features that set the Real Time API apart include:

Low Latency: The API is designed to minimize the delay between user input and AI response, creating a more natural conversational flow.

Multimodal Capabilities: While primarily focused on voice interactions, the API can handle multiple modes of input and output, including text and potentially visual elements in future updates.

Natural Conversation Handling: The API can manage nuances of human conversation, such as interruptions, pauses, and context shifts, making interactions feel more natural and human-like.

Integration with Advanced AI Models: The Real Time API leverages OpenAI's most advanced language models, including GPT-4o, to provide intelligent and contextually appropriate responses.

Customization Options: Developers can choose from six preset voices and have control over various aspects of the conversation flow, allowing for tailored experiences suited to different applications.

Compared to previous methods, the Real Time API represents a significant advancement. Traditional approaches often resulted in disjointed experiences, where the transition from speech recognition to text processing to speech synthesis was noticeable and often jarring. The Real Time API smooths out these transitions, creating a more cohesive and natural interaction.

Moreover, the API's ability to handle real-time adjustments and interruptions is a major step forward. Previous systems typically required users to speak in complete sentences and wait for a full response before continuing, which felt unnatural and limiting. The Real Time API allows for a more dynamic exchange, much closer to how humans naturally converse.

Why Choose Tencent RTC for Conversational AI?

Looking to build a powerful, no-code AI Voice Assistant? Tencent RTC is your ultimate solution! With its unmatched versatility, ease of use, and cutting-edge features, Tencent RTC makes implementing Conversational AI a breeze. Here’s why it stands out:

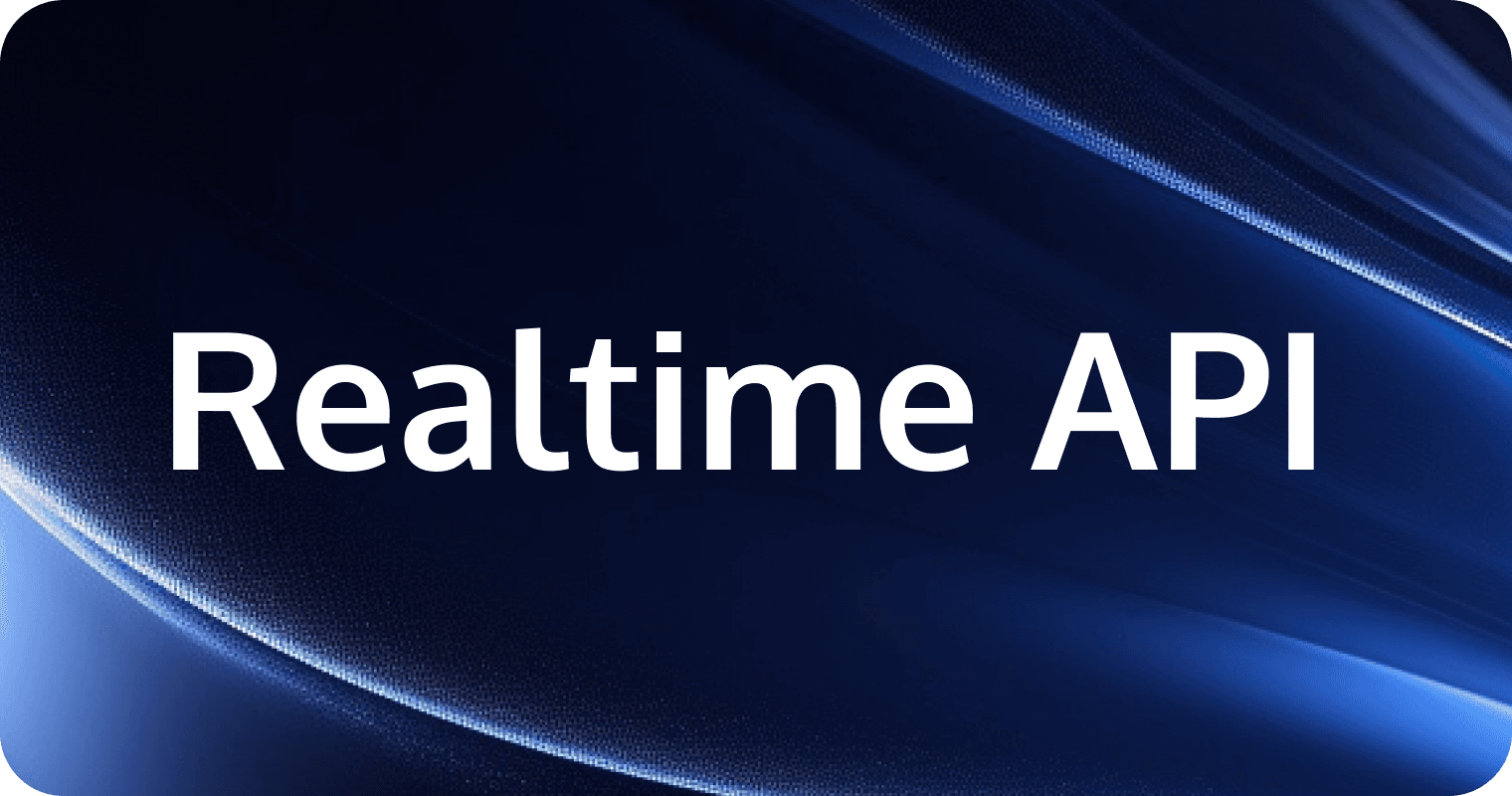

1. Seamless Integration with Multiple AI Services

Tencent RTC supports integration with a wide range of STT, LLM, and TTS providers, including Azure, Deepgram, OpenAI, DeepSeek, Minimax, Claude, Cartesia, Elevenlabs and more. This flexibility allows you to choose the best services for your specific use case. When the LLM provider chooses OpenAI, any LLM model that provides OpenAI-compatible API endpoints is supported here, including Claude and Google Gemini.

2. No-Code Configuration

Tencent RTC simplifies the setup process with a user-friendly interface, enabling you to Configure Conversational AI in just a few minutes. No extensive coding knowledge is required, making it accessible to everyone.

3. Real-Time Interruption Support

Users can interrupt the AI's response at any time, enhancing the fluidity and naturalness of conversations.

4. Advanced Features

AI Noise Suppression: Ensures clear audio input, even in noisy environments.

Latency Monitoring: Tracks real-time performance to optimize conversation flow, including LLM latency and TTS latency.

Switch Providers on the Fly: Without ending the conversation, you can modify the interruption duration, or switch between different LLM and TTS providers (and voice IDs) to experiment with various configurations.

5. Multi-Platform Integration

If you like, Tencent RTC also supports local development and deployment across Web, iOS, and Android platforms, providing flexibility for diverse applications

Ready to see it in action? Watch this video or follow the tutorial to start building your AI Voice Assistant today!

Key Capabilities

The Real Time API boasts several key capabilities that set it apart in the field of AI-powered voice interactions:

Low-Latency, Multimodal Experiences

One of the most significant features of the Real Time API is its ability to provide low-latency responses. This means that the delay between a user's input and the AI's response is minimized, creating a more natural and fluid conversation. The API achieves this through efficient processing and streaming of both input and output, allowing for near-real-time interactions.

The multimodal aspect of the API refers to its ability to handle different types of input and output. While the primary focus is on voice interactions, the API can also process text inputs and generate text outputs. This flexibility allows developers to create applications that can seamlessly switch between voice and text-based interactions, catering to different user preferences and situations.

Natural Speech-to-Speech Conversations

The Real Time API excels in facilitating natural speech-to-speech conversations. It uses advanced speech recognition technology to accurately transcribe user speech, processes this input using state-of-the-art language models, and then generates a response that is converted back into speech. The result is a conversation that feels remarkably human-like.

What sets this apart from previous technologies is the API's ability to handle the nuances of natural conversation. It can recognize and respond to:

- Interruptions: The API can process and respond to user interruptions mid-sentence, much like a human would in a real conversation.

- Pauses and Hesitations: It understands natural pauses and hesitations in speech, not treating them as the end of an input.

- Context and Continuity: The API maintains context throughout the conversation, allowing for more coherent and relevant exchanges.

Integration with GPT-4o Model

The Real Time API is powered by OpenAI's GPT-4o model, which represents the cutting edge in language understanding and generation. This integration allows the API to leverage:

- Advanced Language Understanding: GPT-4o's deep comprehension of language nuances enables more accurate interpretation of user inputs.

- Contextual Response Generation: The model can generate responses that are not only relevant to the immediate input but also consider the broader context of the conversation.

- Multilingual Capabilities: With GPT-4o's multilingual abilities, the Real Time API can potentially support conversations in multiple languages.

Technical Aspects

The Real Time API's advanced capabilities are underpinned by several key technical aspects:

WebSocket Connection

At the heart of the Real Time API's functionality is its use of WebSocket connections. WebSocket is a protocol that provides full-duplex communication channels over a single TCP connection. This is crucial for the Real Time API because it allows:

- Continuous, Two-Way Communication: Unlike traditional HTTP requests, WebSocket connections remain open, allowing for real-time data exchange between the client and server.

- Low Latency: The persistent connection minimizes the overhead of establishing new connections for each interaction, significantly reducing latency.

- Efficient Streaming: WebSocket enables efficient streaming of both audio input and output, crucial for the API's speech-to-speech functionality.

Developers can create a persistent WebSocket connection to exchange messages with GPT-4o, enabling the real-time, interactive nature of the conversations.

Function Calling Support

The Real Time API includes support for function calling, a powerful feature that extends the capabilities of voice assistants beyond simple conversation. Function calling allows the AI to:

- Trigger Actions: The AI can execute predefined functions or commands based on user input. For example, in a smart home application, the AI could turn on lights or adjust thermostats in response to voice commands.

- Retrieve Information: The AI can call functions to fetch real-time data or information from external sources, enhancing its ability to provide up-to-date and relevant responses.

- Personalize Responses: By calling functions to access user-specific data or preferences, the AI can tailor its responses to individual users.

This feature significantly expands the practical applications of voice assistants, allowing them to not just converse but also perform actions and integrate with other systems and services.

Audio Input and Output in Chat Completions API

While the Real Time API focuses on low-latency, real-time interactions, OpenAI is also introducing audio capabilities to its Chat Completions API. This update allows developers to:

- Pass Audio Inputs: Developers can send audio inputs directly to the API, which will handle the speech-to-text conversion internally.

- Receive Audio Outputs: The API can generate responses in audio format, handling the text-to-speech conversion.

- Flexible Response Formats: Developers can choose to receive responses in text, audio, or both, providing flexibility in how the AI's output is used in applications.

This integration simplifies the development process for applications that don't require the real-time, low-latency benefits of the Real Time API but still want to incorporate voice interactions.

Use Cases and Applications

The Real Time API opens up a wide range of possibilities for AI-powered voice applications across various industries. Here are some key use cases and potential applications:

Customer Support

The Real Time API can revolutionize customer support by enabling more natural and efficient AI-powered support systems:

- Virtual Agents: Companies can deploy virtual customer service agents capable of handling complex queries in real-time, providing 24/7 support.

- Multilingual Support: With the API's language capabilities, businesses can offer support in multiple languages without the need for human translators.

- Emotion Recognition: Advanced AI models could potentially recognize customer emotions through voice analysis, allowing for more empathetic responses.

Example: A telecommunications company could use the Real Time API to create a virtual assistant that helps customers troubleshoot network issues, change plans, or answer billing queries, all through natural voice conversations.

Language Learning

The API's natural conversation capabilities make it an excellent tool for language learning applications:

- Conversational Practice: Language learners can engage in realistic conversations with AI, practicing speaking and listening skills in a safe, judgment-free environment.

- Personalized Lessons: The AI can adapt its language complexity and topics based on the learner's proficiency level and interests.

- Pronunciation Feedback: With advanced speech recognition, the API could provide real-time feedback on pronunciation and intonation.

Example: A language learning app could use the Real Time API to create an AI tutor that engages learners in conversations about their hobbies, providing corrections and explanations in real-time.

Personal Assistants

The Real Time API can enhance personal assistant applications, making them more intuitive and capable:

- Complex Task Handling: Assistants powered by the API can manage multi-step tasks, understanding context and maintaining conversation threads.

- Voice-Controlled Smart Home Integration: The function calling feature allows for seamless integration with smart home devices.

- Personalized Recommendations: By understanding user preferences over time, the AI can provide more accurate and relevant suggestions.

Example: A smart home assistant could use the Real Time API to allow users to control their home environment, manage schedules, and receive personalized news and entertainment recommendations, all through natural voice commands.

Educational Tools

In the education sector, the Real Time API can facilitate more interactive and engaging learning experiences:

- Interactive Tutoring: AI-powered tutors can provide personalized instruction across various subjects, answering questions and explaining concepts in real-time.

- Accessibility Support: The API can help create tools that assist students with learning disabilities or visual impairments, providing audio-based learning materials and explanations.

- Language Arts Practice: Students can practice reading aloud, receiving real-time feedback on pronunciation, fluency, and comprehension.

Example: An educational platform could use the API to create an AI tutor that listens to students read aloud, offering immediate feedback on pronunciation and comprehension, and engaging in discussions about the text.

Pricing and Availability

OpenAI has introduced a tiered pricing structure for the Real Time API, reflecting the advanced capabilities and resources required to power this technology. Understanding the pricing model is crucial for developers and businesses considering integrating this API into their applications.

Current Pricing Structure

The Real Time API uses a combination of text tokens and audio tokens for pricing:

- Text Tokens:

- Input: $5 per 1 million tokens

- Output: $20 per 1 million tokens

- Audio Tokens:

- Input: $100 per 1 million tokens

- Output: $200 per 1 million tokens

In practical terms, this translates to approximately:

- $0.06 per minute of audio input

- $0.24 per minute of audio output

It's important to note that these prices are for the beta version and may be subject to change as the API moves towards general availability.

Tencent RTC Conversational AI Solutions

Tencent RTC Conversational AI Solutions are cutting-edge products that combine real-time communication technology with advanced artificial intelligence to create seamless, interactive experiences. These solutions leverage Automatic Speech Recognition (ASR), Text-to-Speech (TTS), Large Language Models (LLM), and Retrieval-Augmented Generation (RAG) to enable natural, intelligent conversations across various applications. From educational tools and virtual companions to productivity assistants and customer service enhancements, Tencent's offerings provide ultra-low latency, emotionally intelligent interactions, and customizable knowledge bases.

Use conversational AI to provide a more personalized educational experience

- AI Speaking Coach

- AI Virtual Teacher

- Character Dialogue

- Scenario Practice

Integrating voice capabilities allows for the creation of virtual teaching assistants that mimic real-time human interaction, providing personalized instruction and responsive feedback within educational scenarios.

Elevate social interactions and entertainment experiences with conversational AI

- Virtual AI Companion

- Character AI Dialogue

- Interactive Game

- Metaverse

Utilizing conversational AI combined with real-time interaction capabilities to understand user intentions and provide corresponding feedback, delivering a more realistic and personalized social entertainment experience for users.

Streamline workflows and boost efficiency with conversational AI

- Voice Search Assistant

- Voice Translation Assistant

- Schedule Assistant

- Office Assistant

Voice-activated productivity tools enable users to command and control applications with their voice, increasing efficiency and reducing manual input.

Elevate call center operations with conversational AI

- AI Customer Service

- AI Sales Consultant

- Intelligent Outbound Calling

- E-commerce Assistant

Conversational AI in call centers, powered by RAG and voice interaction, provides a rich, real-time customer service experience. It reduces costs and enhances service efficiency.

Why Choose Tencent RTC Conversational AI Solutions?

- Achieve Natural Dialogue with AI: Achieve Natural Dialogue with AI ASR + TTS technology ensures clear speech recognition and precise text-to-speech output, with a variety of voice options for a personalized communication experience.

- Ultra-low Latency Communication: Ultra-low Latency Communication Global end-to-end latency for voice and video transmission between the model and users is less than 300ms, ensuring smooth and uninterrupted communication.

- Deliver Precise and Stable Conversational AI: Deliver Precise and Stable Conversational AI LLM + RAG integration allows users to upload their knowledge bases to reduce misinformation and achieve more targeted and stable conversational AI.

- Emotional Communication Experience: Emotional Communication Experience Sentiment analysis and interruption handling accurately recognize and respond to user emotions, providing an emotionally rich communication experience.