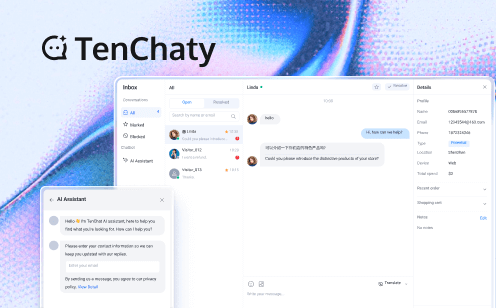

Conversational AI has emerged as a transformative tool, enabling seamless interactions between humans and machines. Tencent RTC, has taken a significant step forward by integrating its advanced Conversational AI Demo with Large language model. This integration allows users to engage in natural, human-like voice conversations without the need for traditional text-based prompts.

In this tutorial, we will guide you through the process of setting up and utilizing Tencent RTC's Conversational AI, focusing on its integration with DeepSeek and other popular STT (Speech-to-Text), LLM (Large Language Model), and TTS (Text-to-Speech) services. When the LLM provider chooses OpenAI, any LLM model that complies with the OpenAl standard protocol is supported here, including Claude and Google Gemini (these models provide OpenAI-compatible API endpoints).

Why Choose Tencent RTC for Conversational AI?

Tencent RTC stands out for its versatility, ease of use, and robust feature set, making it an ideal choice for implementing Conversational AI solutions. Here are the key reasons to choose Tencent RTC:

1. Seamless Integration with Multiple AI Services

Tencent RTC supports integration with a wide range of STT, LLM, and TTS providers, including Azure, Deepgram, OpenAI, DeepSeek, Minimax, Claude, Cartesia, Elevenlabs and more. This flexibility allows you to choose the best services for your specific use case. When the LLM provider chooses OpenAI, any LLM model that provides OpenAI-compatible API endpoints is supported here, including Claude and Google Gemini.

2. No-Code Configuration

Tencent RTC simplifies the setup process with a user-friendly interface, enabling you to configure Conversational AI in just a few minutes. No extensive coding knowledge is required, making it accessible to everyone.

3. Real-Time Interruption Support

Users can interrupt the AI's response at any time, enhancing the fluidity and naturalness of conversations.

4. Advanced Features

AI Noise Suppression: Ensures clear audio input, even in noisy environments.

Latency Monitoring: Tracks real-time performance to optimize conversation flow, including LLM latency and TTS latency.

5. Multi-Platform Integration

If you like, Tencent RTC also supports local development and deployment across Web, iOS, and Android platforms, providing flexibility for diverse applications.

Step-by-Step Guide to Setting Up Conversational AI with Tencent RTC

Step 1: Basic Configuration

Go to Tencent RTC Console and create a 'RTC-Engine' application.

Visit the Conversational AI Console and click 'Get Started'.

1. Select application

On the Basic Configuration page, you first need to select the RTC-Engine application you want to test. You can enable the STT service for the application to use the STT Automatic Speech Recognition feature by proceeding to purchase the RTC-Engine Monthly Packages (including Starter Plan).

2. STT configuration items

Tencent RTC provides default parameters. You can fine-tune the STT configuration items according to your needs.

- Fill in the Bot welcome message.

- Select the Interruption Mode. The robot can automatically interrupt the speech based on specific rules. This mode supports both Intelligent Interruption and Manual Interruption.

-Select the Interruption Duration.

Click 'Next: STT Config' after the configuration is complete.

Step 2: STT (Speech-to-Text) Configuration

STT speech recognition configuration model can be selected from Tencent, Azure, Deepgram, and supports multiple languages.

VAD duration can be adjusted, with a setting range of 240 - 2000 millisecond. A smaller value will make speech recognition sentence segmentation faster. After the configuration is completed, click ‘Next: LLM Config'.

| STT | Configuration | Details |

| Tencent | When selecting Tencent as the STT provider, we provide default parameters for other configurations, and you can fine-tune them according to your needs. | |

| Azure | When selecting Azure as the STT provider:

| |

| Deepgram | When selecting Deepgram as the STT provider, we provide default parameters for other configurations; you can fine-tune them according to your needs. The APIKey can be obtained from Deepgram.

|

Step 3: LLM (Large Language Model) Configuration

Model Selection: Choose from a variety of LLM providers, including OpenAI, DeepSeek, Minimax, Claude, Coze, Dify, and more.

- Tencent RTC not only supports basic models common across various industries but also offers wide-ranging compatibility. When OpenAI is selected as the provider, we can support any model that complies with the OpenAI protocol, including Claude and Google Gemini (these models provide OpenAI-compatible API endpoints).

- Furthermore, Tencent RTC supports multiple renowned overseas LLM cloud platforms, offering users a rich selection. This flexibility enables Tencent RTC to meet AI application development needs for various scenarios and requirements.

| LLM | Configuration | Details |

| OpenAI |

| |

| DeepSeek |

| |

| Minimax |

| |

| Tencent Hunyuan |

| |

| Coze |

| |

| Dify | When the LLM provider chooses Dify, we provide default parameters for other configurations, and you can fine-tune them according to your needs. The API Key can be obtained from Dify. |

Click 'Next: TTS Config' after the configuration is complete.

Step 4: TTS (Text-to-Speech) Configuration

On the TTS speech synthesis configuration page: TTS providers supported include Minimax, Azure, Cartesia, Elevenlabs, Tencent, and Custom.

| TTS | Configuration | Details |

| Cartesia | ||

| Minimax |

| |

| Elevenlabs |

| |

| Tencent |

| |

| Azure |

| |

| Custom |

|

Click 'Connect' after the configuration is complete.

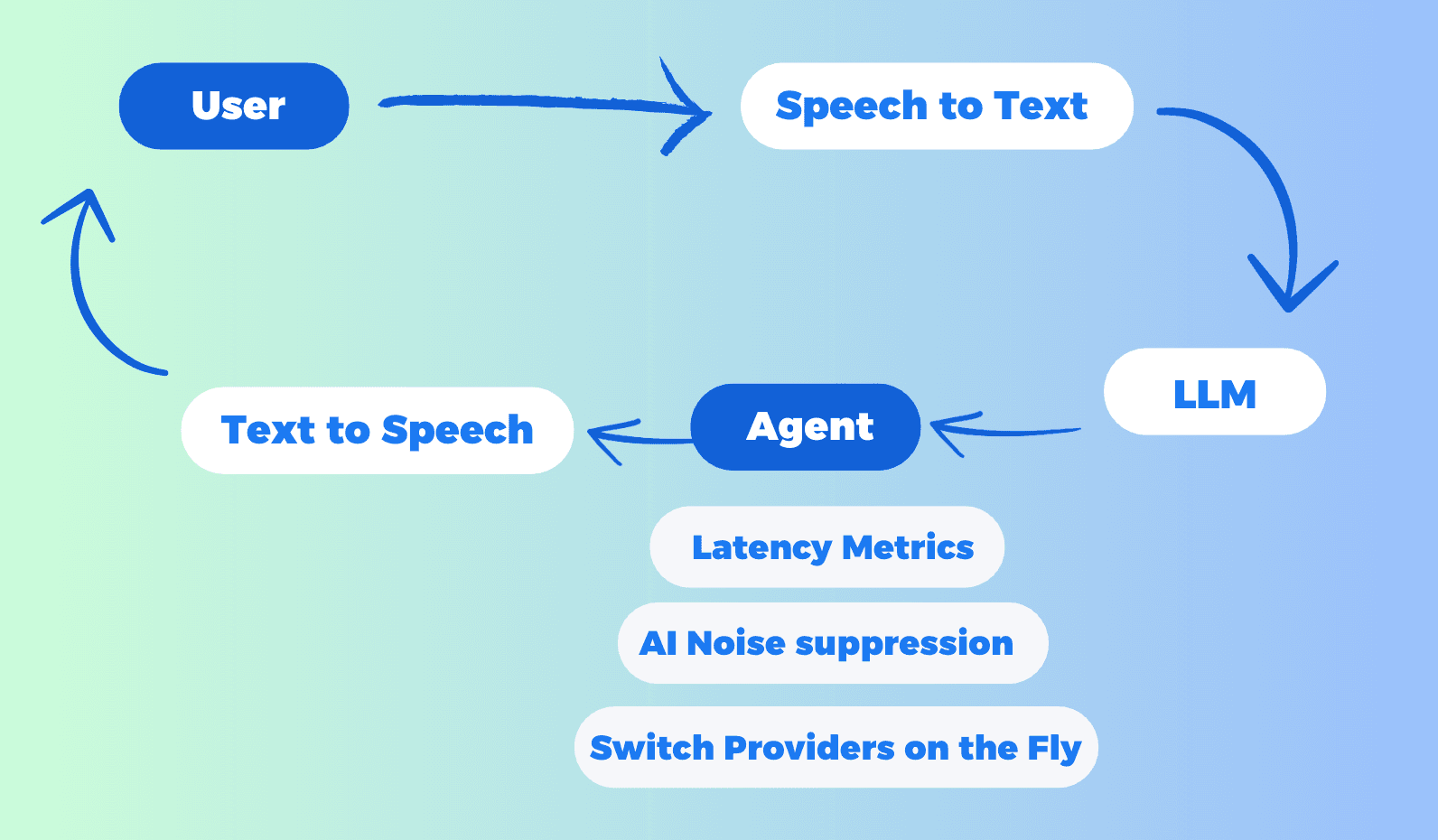

Start Conversation with AI

Now you can interacte with AI in real-time.

View Latency Metrics: Monitor real-time latency for both LLM and TTS to ensure smooth performance.

Switch Providers on the Fly: Without ending the conversation, you can modify the interruption duration, or switch between different LLM and TTS providers (and voice IDs) to experiment with various configurations.

Change Language: After ending the conversation, you can switch to a different language for multilingual support.

AI Noise Suppression: Tencent RTC's AI noise Suppression feature employs advanced algorithms to filter out background noise, ensuring clear and intelligible speech input. Ensures clear audio input, even in noisy environments. To use this feature, you need to activate the RTC-Engine Monthly Packages standard or pro version.

During the conversation, if there is a display of error codes: Please note that some error codes may block the dialogue. Modify the configuration according to the error prompt in time. If it cannot be resolved, copy the Task ID and Round ID information and contact us for query. Some error codes do not affect the normal progress of the dialogue, such as response timeout, which you can handle according to the actual situation.

Local Development and Quick Start

Tencent RTC supports users to quickly run Conversational AI in their local environment. The parameters filled in the system configuration have been preset and do not need to be filled in again. Currently, it supports the Web side, and iOS and Android will be launched soon.

Step1: Get Key Parameters

Obtain the SecretId and SecretKey of the RTC-Engine.

Step1: Run Node.js Code

/**

* Tencent Cloud TRTC API Wrapper

* Please install dependencies first: npm i express tencentcloud-sdk-nodejs-trtc

* To start: node index.js

*/

const express = require('express');

const tencentcloud = require("tencentcloud-sdk-nodejs-trtc");

const TrtcClient = tencentcloud.trtc.v20190722.Client;

const clientConfig = {

credential: {

secretId: "Fill in your secretId",

secretKey: "Fill in your secretKey",

},

region: 'ap-singapore',

profile: {

httpProfile: {

endpoint: "trtc.tencentcloudapi.com",

},

},

};

const client = new TrtcClient(clientConfig);

const app = express();

app.use(express.json());

app.use((req, res, next) => {

res.header('Access-Control-Allow-Origin', '*'); // Allow access from all domains

res.header('Access-Control-Allow-Headers', 'Origin, X-Requested-With, Content-Type, Accept');

next();

});

app.post('/start-conversation', (req, res) => {

const params = req.body;

client.StartAIConversation(params).then(

(data) => res.json(data),

(err) => res.status(500).json({ error: err.message })

);

});

app.post('/stop-conversation', (req, res) => {

const params = req.body;

client.StopAIConversation(params).then(

(data) => res.json(data),

(err) => res.status(500).json({ error: err.message })

);

});

app.listen(3000, () => {

console.log('Server running at http://localhost:3000/');

});Step3: Run Frontend Code

You can copy and run the following front-end code directly, or modify the code to suit your specific requirements and test the setup in a local environment.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>AIConversation</title>

<script src="https://web.sdk.qcloud.com/trtc/webrtc/v5/dist/trtc.js"></script>

<style>

.chat-list {

width: 600px;

height: 400px;

border: 1px solid #ccc;

padding: 10px;

background-color: #eee;

margin-bottom: 20px;

box-sizing: border-box;

display: flex;

flex-direction: column-reverse;

overflow: auto;

}

.chat-item {

display: flex;

flex-direction: column;

margin-bottom: 10px;

}

.chat-item.user {

align-items: flex-end;

}

.chat-item.ai {

align-items: flex-start;

}

.chat-id {

font-weight: bold;

font-size: 12px;

color: black;

margin-right: 5px;

margin-bottom: 3px;

}

.chat-text {

background-color: #F2F4F8;

padding: 10px 20px;

border-radius: 8px;

max-width: 80%;

font-size: 14px;

white-space: pre-wrap;

word-break: break-all;

}

.chat-item.user .chat-text {

border-top-right-radius: 0;

background-color: #D4E3FC;

}

.chat-item.ai .chat-text {

border-top-left-radius: 0;

}

</style>

</head>

<body>

<div id='app'>

<div class="chat-list"></div>

<button class="start-button" onclick="startConversation()">Start Conversation</button>

<button class="end-button" disabled onclick="stopConversation()">End Conversation</button>

</div>

<script>

const chatConversationDiv = document.querySelector(".chat-list");

const startButton = document.querySelector(".start-button");

const endButton = document.querySelector(".end-button");

let messageList = [];

let taskId = null;

let trtcClient;

const roomId = Math.floor(Math.random() * 90000) + 10000;

const { chatConfig, userInfo } = {

const { sdkAppId, userSig, robotSig, userId, robotId } = userInfo;

function ChatBoxRender() {

const template = document.createDocumentFragment();

messageList.forEach(item => {

const itemDiv = document.createElement("div");

itemDiv.classList.add("chat-item");

if (item.type === 'ai') {

itemDiv.classList.add("ai");

} else {

itemDiv.classList.add("user");

}

const idTypeDiv = document.createElement("div");

idTypeDiv.classList.add("chat-id");

idTypeDiv.innerText = item.sender;

const contentDiv = document.createElement("div");

contentDiv.classList.add("chat-text");

contentDiv.innerText = item.content;

itemDiv.appendChild(idTypeDiv);

itemDiv.appendChild(contentDiv);

template.appendChild(itemDiv);

})

chatConversationDiv.innerHTML = "";

chatConversationDiv.appendChild(template);

}

async function StartAIConversation(data) {

return await fetch("http://localhost:3000/start-conversation", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: data,

}).then(res => res.json())

}

async function StopAIConversation(data) {

return await fetch("http://localhost:3000/stop-conversation", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: data,

}).then(res => res.json())

}

async function startConversation() {

try {

trtcClient = trtcClient || TRTC.create();

await trtcClient.enterRoom({

roomId,

scene: "rtc",

sdkAppId,

userId,

userSig,

});

trtcClient.on(TRTC.EVENT.CUSTOM_MESSAGE, (event) => {

let jsonData = new TextDecoder().decode(event.data);

let data = JSON.parse(jsonData);

if (data.type === 10000) {

const sender = data.sender

const text = data.payload.text;

const roundId = data.payload.roundid;

const isRobot = sender === 'robot_id';

const end = data.payload.end; // Robot dynamically displays text, no ellipsis

const msgItem = messageList.find(item => item.id === roundId && item.sender === sender);

if (msgItem) {

msgItem.content = text;

msgItem.end = end

} else {

messageList.unshift({

id: roundId,

content: text,

sender,

type: isRobot ? 'ai' : 'user',

end: end

});

}

ChatBoxRender();

}

});

await trtcClient?.startLocalAudio();

const data = {...chatConfig, RoomId: String(roomId)};

const res = await StartAIConversation(JSON.stringify(data));

taskId = res.TaskId;

} catch (error) {

stopConversation();

return;

}

startButton.disabled = true;

endButton.disabled = false;

}

async function stopConversation() {

try {

taskId && await StopAIConversation(JSON.stringify({

TaskId: taskId,

}))

} catch (error) { }

try {

await trtcClient.exitRoom();

} catch (error) { }

trtcClient.destroy();

trtcClient = null;

endButton.disabled = true;

startButton.disabled = false;

}

</script>

</body>

</html>Conclusion

Tencent RTC's integration with DeepSeek and support for multiple STT, LLM, and TTS services make it a powerful tool for implementing conversational AI. As long as the model you want to use is compatible with OpenAI and supports function calls, you can integrate it with the real-time voice agent.

With its code-free configuration, real-time interruption support, and advanced features such as AI noise reduction and latency monitoring, Tencent RTC enables developers to create engaging and efficient voice interactions. This complete set of voice interaction solutions completely shields the underlying complex logic, and Tencent RTC has already helped you get it done.

You only need to focus on the business logic to make AI speak more naturally and more in line with actual needs. Whether you are building a customer service chatbot, an interactive voice assistant, or any other application, Tencent RTC is your preferred platform for seamless conversational AI.

For further help, refer to the extensive documentation and support resources provided on the Tencent RTC. I wish you a happy development!