We all know that Flash has become a thing of the past, with mainstream browsers like chrome waving goodbye to it in their version 88 update. So, what is the go-to solution now for live streaming on the web? Enter WebRTC.

WebRTC is your ticket to hassle-free live streaming, with the power to transmit video and audio streams seamlessly. It's technology simplifying the way we share live content online, making the complex look effortless. Through WebRTC, websites can establish point-to-point connections between browsers and browsers/browsers and servers without the help of any intermediaries, and realize the transmission of video streams, audio streams, or other arbitrary data.

To put it in one simple way, this means users do not need to use streaming software such as OBS(Open Broadcaster Software) anymore, they can initiate live just by opening a webpage.

What is Streaming with WebRTC?

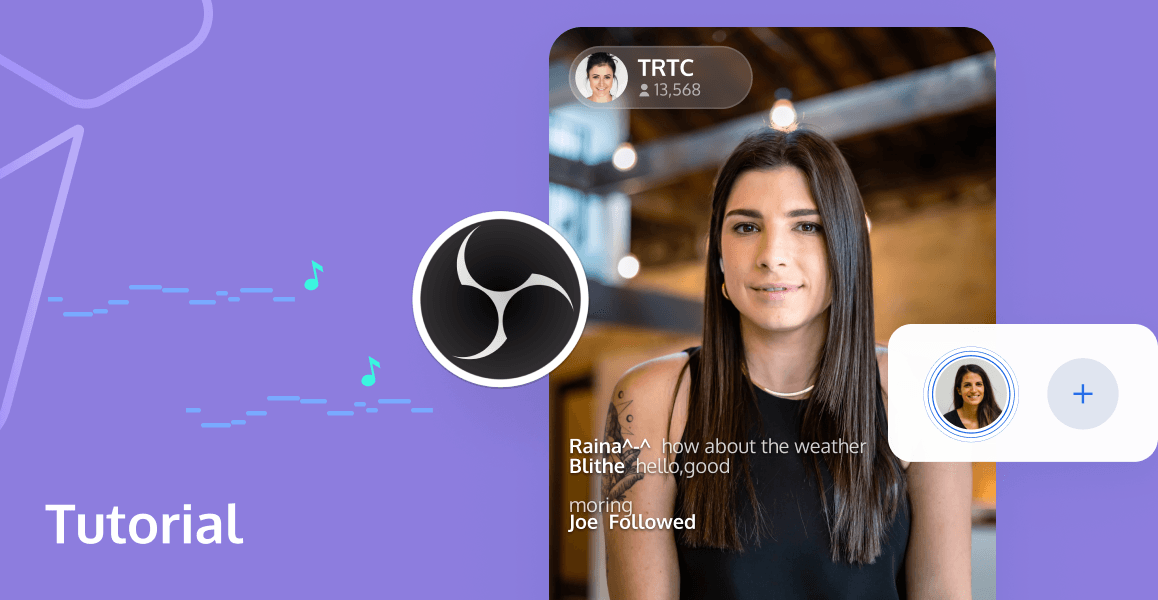

The underlying implementation of WebRTC is undeniably complex, yet its usage on the web is remarkably straightforward. With just a few lines of code, you can establish peer-to-peer connections and facilitate data transmission. Browsers have abstracted the intricate WebRTC functionality into three key APIs:

- MediaStream: This API allows you to acquire audio and video streams.

- RTCPeerConnection: It's responsible for establishing peer-to-peer connections and facilitating the transmission of audio and video data.

- RTCDataChannel: This API comes into play for the transmission of arbitrary application data.

To start live streaming, you only need the first two WebRTC APIs. Begin by obtaining a MediaStream object representing your audio and video feed. Following that, establish a peer-to-peer connection using RTCPeerConnection, and through this connection, upload your MediaStream to the live server. It's this simple interaction that powers real-time broadcasting on the web.

Collect of a Live Stream

The collection of live streaming content depends on how you acquire the MediaStream object. WebRTC provides specific interfaces for this purpose.

The most commonly used interface is navigator.mediaDevices.getUserMedia, which opens the microphone and camera for capturing audio and video streams. Another option is navigator.mediaDevices.getDisplayMedia, which captures audio and video from shared screen windows (like desktop, applications, or browser tabs).

Both of these interfaces have their limitations:

- They can only be used in secure contexts, such as over HTTPS or on a localhost for local development.

- Only iOS 14.3 versions and above support the use of

getUserMediain WKWebView, and thegetDisplayMediainterface is not supported on mobile devices.

Fortunately, WebRTC offers the captureStream interface, greatly expanding the definition of MediaStreams. This versatility allows for diverse and dynamic streaming content, breaking away from a single, fixed source.

By invoking the captureStream method on HTMLMediaElement and HTMLCanvasElement, you can capture the actively rendered content of the current element and create a real-time MediaStream object. In simple terms, anything being displayed through Video or Canvas, whether it's video, audio, images, or custom drawings, can be transformed into a live stream for broadcasting.

However, several hiccups came up while implementing video.captureStream in different browsers:

- Chrome Browser: Starting from version 88,

video.captureStreamobtained video streams cannot be played properly by the receiver via WebRTC. Up to this point, Chrome has not completely resolved this issue. The only workaround is to disable the hardware encoding option in the browser settings, but it's not very user-friendly. - Firefox Browser: The

captureStreammethod must include the "moz" prefix, i.e.,mozCaptureStreamif want to function correctly. - Safari Browser: The Video element does not support the

captureStreammethod entirely.

As a result, we ultimately abandoned the use of the video.captureStream method and used canvas.captureStream instead to generate various custom streams.

Preprocess of the Captured Content

If you are solely capturing video and images through Canvas and converting them into real-time streams, then only single-source video stream can be generated. What if we can combine multiple audio and video streams into a single stream? Before generating the real-time stream, we could perform mixing and preprocessing on the captured visual content through Canvas and apply similar mixing and preprocessing to the captured audio using the WebAudio interface. This approach allows us to bring most of the OBS (Open Broadcaster Software) functionality to the web.

With the powerful assistance of Canvas and WebAudio, OBS-web is easy to achieve. Here's a brief overview of our basic approach in designing and implementing OBS-web.

First, we implemented the fundamental mixing function, which enables the combination of multiple video and audio streams into one. Additionally, users can customize the size and position of each video feed and adjust the volume of each audio source, as shown in the following diagram:

Then, implement individual preprocessing effects for each video feed, such as mirror flipping and filter effects:

Finally, adding the addition of watermarks, text, and other supplementary content to the visuals, and you've pretty much covered the basic functions of OBS-web! The overall effect can be referenced in the image below:

When using Canvas for rendering visuals, WebGL can be put into practice to enhance rendering performance, leveraging GPU acceleration for faster rendering speeds. Furthermore, employing WebGL Shaders can achieve more sophisticated visual effects, making full use of the browser's inherent capabilities. Simultaneously, we've developed a foundational compositional protocol that supports input sources like MediaStream, HTMLVideoElement, and HTMLAudioElement. This protocol defines processing tasks for video and audio streams according to rules and dynamically and drives the processing of visuals and sounds according to data changes. The flexibility of this design enables seamless integration of new effects, improving development efficiency.

During the implementation of OBS-web, some common issues can occur, and we summarize them with their solutions as follows:

RequestAnimationFrameLimitations: In most cases, Canvas rendering relies onrequestAnimationFramefor frame redrawing. While this approach has several benefits, such as performance improvements and resource savings, it has limitations when the webpage is in an inactive state (hidden or minimized). During this time, the execution ofrequestAnimationFramepauses, causing the Canvas content to remain static, effectively resulting in a live stream with a frame rate of 0. To address this issue in live streaming scenarios, we've implemented a solution that involves monitoring the browser's visibilitychange event. If the current page is visible (document.hiddenattribute isfalse), we continue using requestAnimationFrame for frame rendering. However, if the page is not visible (document.hiddenattribute istrue), we switch to using setInterval for frame rendering.- WebAudio Context and Interactivity: Similar to strategies employed to prevent automatic video playback, the WebAudio context objects, when no user interaction with the page is detected, start in a suspended state. In this state, any operations on the WebAudio context are ineffective. It is necessary to activate the WebAudio context when there is user interaction with the browser, transitioning it to the "running" state, to perform any audio operations effectively.

- AudioContext and Media Element Source: When using the

createMediaElementSourcemethod to extract audio fromHTMLVideoElementandHTMLAudioElement, each element can be extracted only once. Subsequent attempts to extract the same element will result in errors. To address this, we need to store and reuse the initially generated results.

These challenges and solutions are essential for ensuring the smooth operation of OBS-web, allowing for high-quality and uninterrupted live streaming.

Now we have bring most of the OBS functionality to the web, eliminating the need to download and install OBS software. However, is there any way to simplify the process as much as possible?

We are delighted to say that we have found a solution to this issue.

Get Started with Our WebRTC Streaming SDK

Our WebRTC streaming SDK have integrated all the functionalities mentioned above, with a focus on addressing browser compatibility and performance issues. Our goal is to make it easy for users to create their own OBS-web applications effortlessly.

Bridging Web-Based Stream Capture, Processing, and Streaming: The Ultimate SDK

With the WebRTC streaming SDK, you can capture various live streams, perform local mixing and preprocessing on these streams, such as picture-in-picture layouts, adding mirror and filter effects, watermarks, and text, and then upload the processed audio and video streams to Tencent Cloud's live streaming backend.

It's worth mentioning that you can perform real-time visual and audio effects during the streaming process without the need to interrupt the stream, with this feature, you can even achieve effects similar to local broadcasting.

Notes

To use the WebRTC Streaming SDK, you'll need to first go to the CSS console, agree to Tencent Cloud Service Agreement, and click Apply for Activation to activate CSS. The SDK doesn't enable local mixing and preprocessing by default due to potential browser performance issues. Users must manually activate these features using the provided interface. Before activation, only one video stream and one audio stream can be captured. After activation, you can capture and mix multiple video and audio streams, which is useful for scenarios like online teaching or live streaming with multiple video sources and visual effects adjustments. You also have the option to utilize OBS WHIP support in conjunction with Tencent Real-Time Communication (Tencent RTC) cloud services for your streaming platform. This is an excellent choice if you prefer not to create your own platform and are seeking a more dependable and secure solution with professional support. TencentRTC offers a free trial that includes a set amount of streaming time, read How to push stream to TencentRTC room with OBS WHIP - Tencent RTC Documentation if you want to know more.

More Features We Offer

In the context of web streaming, we sometimes need to further process the streams, such as beauty filters, makeup, and popular AR effects. Tencent RTC currently offers a WebAR SDK that can be used in conjunction with the WebRTC Streaming SDK to enhance the stream processing capabilities. More services and products are coming soon, please stay tuned on our official website Tencent RTC.

If you have any questions or need assistance, our support team is always ready to help. Please feel free to Contact Us - Tencent RTC or join us in Discord.