GPT-4o & RTC: Leading a new era of real-time multi-modal interaction (Startup Enterprise Plan)

📅 On May 13th, OpenAI announced the GPT-4o model, which can perform real-time reasoning across audio, visual, and textual inputs. It accepts any combination of text, audio, and images as input and generates any combination of text, audio, and images as output.

🤔 OpenAI has also adopted RTC technology for the first time in GPT-4o. The model demonstrates extremely low latency performance when interacting with large models, opening up possibilities for richer applications of real-time interaction with large models.

👀 OpenAI has made it possible for the GPT-4o model to respond to audio inputs in as little as 232 milliseconds, with an average response time of 320 milliseconds, similar to human response times in conversations, and it can even be interrupted at any time. This is not just a simple speech-to-text processing mode; it can understand tone and intonation, act as an emotional conversational assistant, and even serve as a real-time simultaneous interpreter without any issues. During a live demo, the demonstrator pretended to breathe rapidly, and GPT-4o was able to understand his breathing pattern and promptly offered suggestions to help him relax.

GPT-4o: Multimodal Model ⚙️

The ultimate responsive experience comes from GPT-4o, which unifies various modalities to form a complete multimodal base model.

Before GPT-4o, users could use Voice Mode (consisting of three separate models) to interact with ChatGPT, going through a process of "speech-to-text, question-answering, and text-to-speech":

1. Speech recognition or ASR: audio -> text1

2. LLM that plans what to say next: text1 -> text2

3. Speech synthesis or TTS: text2 -> audio

However, the average latency of this process reached 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4), and a lot of information was lost in the process. For example, GPT-4 could not directly observe pitch, multiple speakers, or background noise, nor could it output laughter, singing, or emotional expressions. GPT-4o feeds speech in real-time to the large model, greatly improving response time and achieving a speed similar to human interaction.

Compared to existing models, GPT-4o is particularly outstanding in visual and audio understanding. GPT-4o has significantly improved speech recognition performance across all languages, especially for those with fewer resources.

GPT-4o has achieved state-of-the-art performance in speech translation and outperforms Whisper-v3 in the MLS benchmark test.

With GPT-4o, OpenAI has trained a new end-to-end model across text, visual, and audio modalities, meaning that all inputs and outputs are handled by the same neural network. The key to achieving this leap in technological advancement lies in two aspects: the evolution of large models and the application of RTC (Real-Time Communication) capabilities.

GPT-4o Leads the Trend of Real-Time Multimodal Development 📈

The release of GPT-4o signifies that support for end-to-end real-time multimodal processing will become a new direction for the development of large models. Real-time text, audio, and video transmission capabilities are gradually becoming standard configurations for real-time large models. By integrating multiple data modalities and achieving instant responses, this technology can understand and respond to user needs from multiple angles, providing a more natural and efficient user experience. In the future, other large model manufacturers may also follow up and launch products with end-to-end real-time multimodal capabilities. Among these, RTC technology plays an extremely crucial role in multimodal processing.

1. Real-time audio input and output 🔊

RTC technology ensures that user's voice input can be transmitted to the processing system in real time, quickly undergo speech recognition, and be converted into text. The text generated by the processing system is transmitted to the user in real time through RTC, and converted into natural and smooth voice output through speech synthesis.

2. Real-time video transmission and analysis 🧐

RTC technology can transmit user's video data to the processing system in real time for image and video analysis. Real-time video analysis can recognize user's expressions and actions, providing the system with more emotional and behavioral information, and enhancing the interactive experience.

3. Low latency data transmission 💨

RTC technology uses optimized transmission protocols to ensure low latency in the transmission of multimodal data, avoiding a decrease in interactive experience caused by network latency. It can also dynamically adjust the bitrate of the transmitted data according to current network conditions, ensuring stable transmission quality in different network environments.

In addition, these features also make us think about the many possibilities of GPT-4o's applications, such as online education tutoring, virtual conference assistants, museum guides, assistance for visually impaired people, and simultaneous interpretation.

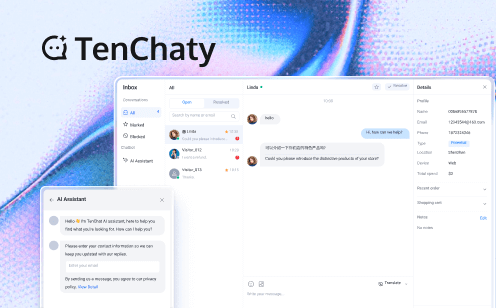

1. Online Education Tutoring 📚

In online education, real-time interaction and instant feedback are key to improving learning outcomes. Combining GPT-4o with RTC technology, teachers and students can communicate in real-time through video and voice, while GPT-4o provides intelligent assistance in the background. For example, students can ask GPT-4o questions at any time during class and receive immediate answers to supplement the teacher's explanations. Based on students' learning progress and needs, GPT-4o can also provide personalized tutoring suggestions and learning resources. After students submit their assignments, GPT-4o can quickly grade and provide feedback, allowing teachers to focus more on teaching.

2. Virtual Conference Assistant 📝

In virtual meetings, GPT-4o and RTC technology can provide intelligent assistance for meeting participants, improving meeting efficiency. For example, GPT-4o can record meeting content in real-time and automatically generate summaries and to-do items after the meeting. In multilingual meetings, GPT-4o can support simultaneous interpretation, helping participants with different languages communicate without barriers. Based on the meeting content, GPT-4o can remind participants of important matters and follow-up tasks during or after the meeting.

3. Museum Guide 🗺️

Combining GPT-4o with RTC technology can provide visitors with a highly interactive and personalized museum tour experience. Visitors can ask GPT-4o questions through voice and receive detailed information about exhibits. Supporting multilingual tours, GPT-4o can meet the needs of visitors from different countries and regions, providing personalized tour suggestions and background knowledge.

4. Assistance for Visually Impaired People 👀

Visually impaired people need a lot of assistance information in their daily lives. They can ask GPT-4o about routes and surrounding environment information through voice commands and receive real-time voice navigation. By taking pictures of text content and surrounding objects with a camera, GPT-4o can recognize and describe them to visually impaired people.

5. Virtual Idol Live Streaming 🎤

In the entertainment field, GPT-4o can make virtual idols more lively. For example, virtual idols can have real-time interactive live streams, interacting with the audience. The audience can interact with virtual idols through voice or text, and GPT-4o can generate responses based on the audience's questions and feedback. Virtual idols can even customize live content based on the audience's interests and preferences, such as impromptu performances and Q&A sessions.

6. Online Murder Mystery Games 🎮

Players can participate in real-time interactive murder mystery games online, enjoying an immersive storyline experience. GPT-4o can play multiple roles in the game, providing dynamic dialogues and plot development. Based on players' choices and interactions, GPT-4o can also adjust the plot direction in real-time, offering multiple endings.

Tencent RTC's AIGC Real-Time Audio and Video Solution 🎉

Tencent RTC offers a one-stop AIGC solution based on large model real-time audio and video technology, helping large model manufacturers create real-time audio and video interaction capabilities, allowing users to interact with AI in real-time through voice and video formats. This solution can also achieve audio conversation features similar to GPT-4o.

Tencent RTC provides a fully encapsulated SDK, supports flexible modular assembly, covers various functions such as RTC real-time audio and video, real-time messaging, and supports fast API calls, offering ready-to-use scenario-based demos. For enterprises and developers who want to quickly validate new scenarios, this solution can significantly save development time.

If you have any questions or need assistance online, our support team is always ready to help. Please feel free to Contact Us or join us on Telegram.

Startup Enterprise Plan 💰

Tencent RTC Startup Enterprise Plan aims to support startups in all fields. We offer up to $3,000 in funding for eligible startup companies! In addition, our technical support team will provide comprehensive support to ensure smooth use of our products and services, helping you save a significant amount of time, labor, and capital costs.

Fill out the Form, and Tencent RTC Startup Plan will set sail with you.