In the high-octane world of entertainment, a quiet revolution is taking place. They don't age, they don't get tired, and they can speak 30 languages fluently. They are AI Idols—virtual entertainers powered by artificial intelligence, advanced motion capture, and real-time rendering.

In the high-octane world of entertainment, a quiet revolution is taking place. They don't age, they don't get tired, and they can speak 30 languages fluently. They are AI Idols—virtual entertainers powered by artificial intelligence, advanced motion capture, and real-time rendering.

From the holographic concerts of Hatsune Miku to the hyper-realistic K-pop group MAVE:, the line between digital code and human celebrity is blurring. But this isn't just a cultural novelty; it is a multi-billion dollar shift in how content is created, consumed, and monetized.

This comprehensive guide will dismantle the technology, business models, and creation process behind AI idols. Whether you are a developer, a marketer, or a fan trying to understand the future of fame, this is everything you need to know.

1. What is an AI Idol? Defining the Digital Star

Before diving into the technology, we must establish a clear taxonomy. The term "AI Idol" is often used as a catch-all, but industry insiders distinguish between three distinct categories.

The Virtual Idol (The "Puppet" Model)

- Definition: A 3D or 2D avatar rigged to move, but controlled entirely by a human actor in real-time using motion capture (mocap) suits.

- Key Feature: The voice and personality are human; the appearance is digital.

- Example: PLAVE. This K-pop boy group consists of real humans wearing mocap suits. Fans love them because the "humanity" (humor, mistakes, breathing) shines through the avatar.

The Hybrid Idol

- Definition: A real human group that has digital counterparts (or "twins").

- Key Feature: Used for storytelling and lore expansion.

- Example: aespa and their "ae" counterparts. The human members perform on stage, while their digital twins appear in music videos and AR apps.

The True AI Idol (The "Generative" Model)

- Definition: An entity where the voice, movement, and sometimes even the personality are generated by algorithms.

- Key Feature: Can scale infinitely. A true AI idol can chat with 10,000 fans simultaneously, offering a unique conversation to each one.

- Example: MAVE: (mostly). While they rely on human choreographers, their voices and faces are deeply integrated with generative DeepFake and neural rendering tech.

2. The Evolution: From Synthesizers to Neural Networks

The road to the modern AI idol was paved by three decades of innovation.

Era 1: The Vocaloid Revolution (2007–2016)

It started with Hatsune Miku. Developed by Crypton Future Media using Yamaha's Vocaloid software, Miku was not an AI. She was a voice synthesizer—an instrument.

- Legacy: Miku proved that fans could fall in love with a character that had no "soul." She filled stadiums as a hologram, establishing the commercial viability of virtual concerts.

Era 2: The VTuber Boom (2016–2020)

Kizuna AI coined the term "Virtual YouTuber." This era democratized the tech. Using basic facial tracking (often just an iPhone), content creators could become anime characters.

- Legacy: This era introduced the importance of Live Interaction. Fans didn't just want pre-recorded songs; they wanted to Chat and interact in real-time.

Era 3: The Metahuman & Deep Learning Era (2021–Present)

With the release of Unreal Engine 5 and MetaHuman Creator, photorealism became accessible. Simultaneously, AI voice synthesis (TTS) became emotional and indistinguishable from human speech.

- Current State: We are now seeing "Hyper-Real" idols like Eternity and Superkind, who look so realistic they often trick the casual observer.

3. The Heavy Hitters: Case Studies of Top AI Groups

To understand the market, we must analyze the leaders.

PLAVE: The Kings of "Live" Immersiveness

PLAVE is currently the most successful example of the "Virtual Avatar" model. Managed by VLAST, their success lies in their livestreaming technology.

- Strategy: They stream multiple times a week. The members (human performers) play games, sing live, and fix technical glitches on stream. This "breaking the fourth wall" creates immense trust and relatability.

- Tech: High-fidelity motion capture with zero-latency rendering, allowing for real-time dance battles.

MAVE:: The Corporate Supergroup

Created by Metaverse Entertainment (a Kakao/Netmarble joint venture), MAVE: represents the high-budget, corporate approach.

- Strategy: Flawless visuals. Their debut music video "Pandora" hit millions of views instantly. They act as brand ambassadors for games and products.

- Tech: They utilize AI voice synthesis for multilingual support, allowing the members to speak to fans in English, Korean, and Japanese seamlessly.

K/DA: The Gaming Crossover

While technically a promotional tool for League of Legends, K/DA showed the music industry that virtual groups could top the Billboard charts. They utilized real pop stars (like Madison Beer and Soyeon) for voices but established the "visual standard" for all groups that followed.

4. The Tech Stack: How an AI Idol is Built

Building an AI idol requires a convergence of three complex technology pipelines: Visuals, Audio, and Brain.

A. The Visual Pipeline (The Body)

- Modeling: Most high-end idols use Unreal Engine 5 with MetaHuman Identity. This allows for skin texture simulation, subsurface scattering (how light passes through ears/fingers), and realistic hair physics.

- Rigging: The model is "boned" or rigged to move. 52 distinct facial blend shapes (ARKit standard) are required for realistic talking.

- Rendering: For live idols, this must be Real-Time Rendering. Pre-recorded music videos can use Offline Rendering (Ray Tracing) for higher quality.

B. The Audio Pipeline (The Voice)

- Voice Conversion (RVC): This is the most popular current method. A human sings into a microphone, and an AI model "skins" the voice to sound like the character in real-time.

- Text-to-Speech (TTS): For fully autonomous idols, LLMs generate text which is fed into emotional TTS engines (like ElevenLabs or proprietary solutions) to generate speech.

C. The Intelligence Pipeline (The Brain)

- LLM Integration: The idol needs a personality. Developers use Large Language Models (like GPT-4 or Llama 3) fine-tuned on a specific "Character Bible."

- Memory Vector Databases: To make the idol "remember" fans, interaction history is stored in a vector database, allowing the AI to say, "Hey, you're back! How was your exam last week?"

5. The Business Case: Why Companies Prefer Pixels

Why are record labels investing billions in AI?

- Risk Mitigation: The "Scandal-Free" Guarantee. AI idols do not get DUIs, they do not have secret dating scandals, and they do not get exhausted. In an industry where one scandal can sink a stock price, this safety is invaluable.

- infinite Scalability: A human idol can only be in one city at a time. An AI idol can perform a concert in Roblox, hold a fan meet in Seoul, and shoot a commercial in New York simultaneously.

- Intellectual Property Ownership: When a human artist leaves a label, they take their talent with them. With an AI idol, the agency owns 100% of the IP, the voice, and the image forever.

6. The Fan Ecosystem: Parasocial Relationships 2.0

The success of AI idols relies on Gamification of fandom.

The "Fancall" Evolution

In traditional K-pop, fans buy hundreds of albums for a chance at a 1-minute video call. With AI idols, this scarcity is artificial. Companies can sell "private Chat time" to millions of fans simultaneously. The AI can remember the fan's name and details, creating a hyper-personalized relationship that feels more "real" than a hurried 30-second call with a tired human star.

24/7 Availability

Fans today crave constant content. AI idols can stream 24/7, react to new music videos instantly, and wish fans goodnight every single night. This creates a feedback loop of engagement that human idols simply cannot physically sustain.

7. Ethical Dilemmas & The "Uncanny Valley"

It is not all profit and pixels. The rise of AI idols brings significant ethical questions.

- The Displacement of Artists: Musicians and dancers fear losing jobs to cheaper, digital alternatives.

- Deepfakes and Consent: The technology used to create AI idols is the same used for malicious deepfakes. Clear legal frameworks are needed regarding "digital likeness rights."

- The Uncanny Valley: If an idol looks too human but moves slightly wrong, it evokes a feeling of revulsion (the Uncanny Valley). Successful idols usually style themselves as slightly stylized (anime-esque) to avoid this biological warning system.

8. Enhancing AI Idols with Real-Time Communication

The difference between a "video file" and a "Virtual Idol" is interactivity. For an AI idol to succeed, they must be able to perform live concerts, host fan meetings, and chat with millions of users instantly. This requires robust Real-Time Communication (RTC) infrastructure.

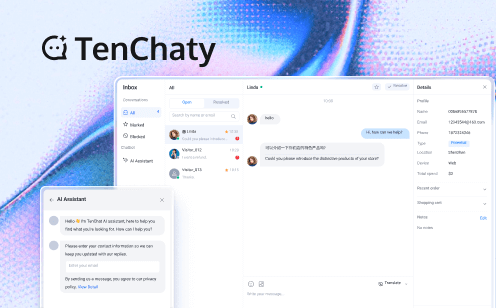

Tencent RTC offers the specific tools required to bring these digital stars to life.

Live Performance (Low Latency Streaming)

When a virtual idol performs a concert, the sync between the motion capture data and the video feed must be perfect.

- Tencent RTC Live Streaming: Provides ultra-low latency broadcasting. This ensures that when the fan types "Do a heart sign!" in the Chat, the idol can react instantly. High-quality audio transmission is critical for concerts, and Tencent RTC supports high-fidelity audio profiles essential for music performance.

Massive Interactive Fan Meetings

- Voice Chat Room: Using Tencent RTC's Voice Chat Room solution, an AI idol can host a room with thousands of listeners. Fans can "raise their hand" to come on stage and speak directly to the avatar.

- Chat: For the text-based component, Tencent's Chat SDK (formerly IM) handles millions of concurrent messages, enabling features like "Danmaku" (bullet screen comments) overlaying the idol's performance.

One-on-One AI Video Calls

- Conversational AI & Video Call: Imagine a fan winning a private meet-and-greet. Tencent RTC's Video Call API allows developers to integrate the AI's video feed directly into a mobile app, providing HD video quality even on unstable networks. This creates an intimate, face-to-face experience between the fan and the digital entity.

By leveraging Tencent RTC, developers can move beyond pre-rendered videos and create a living, breathing digital celebrity that interacts in real-time.

9. Step-by-Step Guide: Launching Your Own Virtual Avatar

Do you want to enter the market? Here is the roadmap.

Step 1: Define the Archetype Don't just make "a pretty girl/boy." Define the niche. Is it a Cyberpunk DJ? A High-Fantasy Bard? A K-Pop Trainee? The backstory is more important than the polygons.

Step 2: Choose Your Engine

- Beginner: VRoid Studio (Free, anime style).

- Pro: Unreal Engine 5 + MetaHuman (Photorealistic).

Step 3: Setup the Rig Connect your avatar to a motion capture solution. For starters, an iPhone with FaceID running Live Link Face is sufficient. For professional body tracking, consider suits like Rokoko or Xsens.

Step 4: Integrate the Voice Decide between a Voice Actor (Human-in-the-loop) or AI Voice. If AI, use RVC models to train a unique voice skin that matches your character design.

Step 5: Go Live Use OBS Studio to composite your avatar into a scene. Connect to a streaming platform. Use Tencent RTC if you are building a proprietary app to handle the interactive Chat and video streams.

10. Frequently Asked Questions

Q1: How can I make my AI idol interact with fans in real-time without lag?

A: Latency breaks the illusion of reality. You should use Tencent RTC's Live Streaming solution, which is designed for ultra-low latency. This allows the motion capture data and voice to reach the viewer almost instantly, enabling the idol to respond to Chat comments in real-time.

Q2: What is the best way to handle thousands of fans chatting at once during a virtual concert?

A: Managing massive chat volume requires a robust messaging backend. Tencent RTC's Chat SDK supports high-concurrency message handling, allowing you to implement features like message throttling, priority display for VIPs, and bullet screen (Danmaku) overlays without crashing your app.

Q3: Can I create a feature where fans can have a private video call with the AI idol?

A: Yes. You can implement this using Tencent RTC's Video Call API. By feeding the real-time render of your AI character into the video stream, fans can have a 1-on-1 face-to-face call with the idol, complete with high-definition audio and video stability.

Q4: How do I enable voice interactions in a virtual fan club app?

A: You can create audio-based fan spaces using Tencent RTC's Voice Chat Room. This allows the AI idol to act as a host, broadcasting music or talking, while fans can listen in, request songs, or be invited "on stage" to speak using their microphones.

Q5: Is it possible to integrate an AI Chatbot that speaks with the idol's voice?

A: Absolutely. You can combine a Large Language Model (LLM) for text generation with Tencent RTC's Conversational AI capabilities. This allows the system to process fan speech, generate a text response, convert it to the idol's voice, and stream it back in real-time.