Dive deep into scalable chat architecture design. The choice between REST and WebSocket protocols dictates the ultimate success of any real-time application. Traditional HTTP/REST introduces unacceptable latency for messaging due to its unidirectional, stateless nature. Learn why WebSockets are the essential backbone for instant message delivery, typing indicators, and presence updates. This technical guide explores the necessity of a hybrid API architecture for optimal performance and data retrieval. Discover how Tencent RTC's Chat API abstracts these complexities, providing guaranteed message delivery and ordering consistency built atop robust, low-latency infrastructure, empowering developers to focus purely on application features.

Building a modern chat application requires more than just an interface; it demands a resilient, low-latency architecture capable of supporting millions of concurrent users globally. The foundational decision for any developer lies in selecting the correct communication protocol: the standard Request-Response paradigm of REST APIs or the persistent, bidirectional channel offered by WebSockets.

Architectural Primitives: REST vs. WebSocket in Chat Design

The architecture of a chat system fundamentally differs from that of a standard web application designed for Create, Read, Update, and Delete (CRUD) operations. The primary issue with using traditional HTTP/REST for core messaging is its inherent inefficiency for real-time communication. REST is fundamentally unidirectional (client-initiated) and stateless. To simulate real-time message receipt, developers would be forced to implement continuous polling or long polling, which introduces significant latency, generates excessive overhead, and wastes server resources.

Conversely, WebSockets are engineered specifically for continuous, instantaneous data exchange. A WebSocket connection establishes a single, bidirectional, stateful, and persistent TCP connection after an initial HTTP handshake. This full-duplex channel drastically minimizes communication latency and reduces the necessary overhead, making it the non-negotiable choice for handling core real-time message sending and receiving, as well as ephemeral updates like typing indicators.

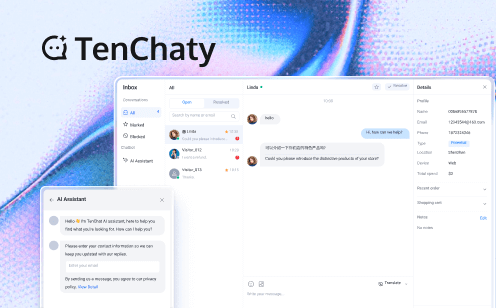

A production-grade chat system, however, rarely relies on a single protocol. It utilizes a hybrid design pattern: REST APIs are perfectly suitable for stateless CRUD operations, such as user authentication, fetching profile details, or retrieving specific segments of message history (pagination). WebSockets handle the actual real-time message exchange and presence management. TRTC Chat APIs are designed to integrate seamlessly within this hybrid model, providing simple interfaces for both stateless data management and stateful, persistent messaging.

Integrating Core Features: Rich Media, Typing Indicators, and Presence

Modern chat is defined by its ability to handle more than simple text. A robust chat API must accommodate rich media (images, files, audio), facilitate instantaneous push notifications, and manage detailed presence indicators (online/offline status, last active time, typing status). The successful handling of these features relies on segregating data types. Rich media sharing is typically managed via separate file storage APIs (often relying on MIME type detection ) to offload large binary data. This ensures that the low-latency message channel—the WebSocket connection—remains free for the quick delivery of critical textual data and metadata. TRTC provides dedicated mechanisms for managing these separate data flows efficiently.

Scalability and Reliability: The Tencent RTC Advantage

Achieving massive scale requires overcoming major architectural hurdles, including horizontal scalability, ensuring load distribution, and reliable message queuing. If a system is not designed to scale horizontally by adding more resources, it risks catastrophic crashes during traffic spikes. Advanced architectures require the adoption of message brokers like Apache Kafka or RabbitMQ to manage high message throughput, decoupling services, and ensuring a guaranteed, ordered data flow between microservices.

Tencent RTC abstracts this entire, complex microservices architecture. By utilizing proprietary protocols and sophisticated global infrastructure, TRTC provides inherent resilience against scaling limitations. The platform manages load distribution internally and ensures critical features like message delivery acknowledgment (sent, delivered, read) and consistency (messages delivered in the order they were sent) are guaranteed, even across global networks with variable connectivity. This capability allows application developers to achieve high-scale reliability immediately without designing and maintaining complex message queue and microservice layers.

Proposed Q&A

Q: Is REST suitable for sending messages in a chat application?

A: No. REST is client-initiated and stateless, requiring inefficient techniques like polling or long polling, which introduce unacceptable latency and high overhead for real-time messaging. Core messaging requires a persistent, bidirectional protocol like WebSocket.

Q: What is the main architectural difference between a chat API and a standard REST API?

A: A standard REST API is stateless and uses a request/response model, typically over HTTP. A chat API's core real-time component uses WebSockets, which are stateful and establish a persistent, bidirectional connection for low-latency communication.

Q: How does TRTC handle message persistence when a user is offline?

A: TRTC maintains chat storage on the server, persistently storing messages until the recipient comes back online. The system then utilizes push notifications to alert the offline user and ensures successful delivery upon reconnection.

Q: What authentication methods are best for securing a WebSocket chat connection?

A: Although the initial WebSocket handshake uses standard HTTP authentication (like token-based authorization), the persistent connection often relies on secure, ephemeral tokens and session management, which TRTC facilitates for secure client-sided authentication.

Q: How does TRTC ensure message delivery order (consistency)?

A: TRTC’s scalable backend architecture leverages robust message brokers and specialized queuing mechanisms designed to ensure consistency, guaranteeing that messages are delivered to the recipient in the exact order they were sent.