Introduction: Why Message History is a Must-Have Feature

Message history is a core pillar of user experience and data integrity in any modern chat application. It provides users with a persistent, searchable record of their conversations, enabling them to retrieve important information, maintain context, and sync their conversations across multiple devices. Without a reliable message history function, an application feels incomplete and can quickly frustrate users, leading to churn. For a developer, the implementation of this feature is not trivial. It requires a robust backend architecture capable of handling the persistence, retrieval, and synchronization of massive volumes of data in a way that remains fast and reliable.

Behind the Curtain: Architectural Best Practices for Message Persistence

A scalable real-time messaging system requires a carefully designed architecture to handle message persistence and retrieval. The foundational components typically include Message Brokers and Publish/Subscribe Patterns. Message brokers act as intermediaries, managing the queuing and routing of messages to ensure reliable delivery even during high-traffic periods. The pub/sub pattern is particularly effective for group chats, as it allows messages to be broadcast to multiple recipients simultaneously.

Beyond the real-time delivery, a persistent database is essential to durably store messages. To handle the high read/write volumes associated with chat applications, a system must employ scaling strategies such as Database Sharding, where data is partitioned across multiple servers, and robust Caching strategies to serve recent conversations quickly. Additionally, Service Redundancy and Disaster Recovery mechanisms are critical to ensuring the system remains available and messages are not lost.

The handling of offline messages is a specific challenge that requires a dedicated solution. For a user who is offline, messages must be stored in a queue and delivered automatically when the user reconnects. Clients must also be able to track the last message they received and request all subsequent messages upon reconnection to ensure a seamless synchronization experience.

The Latency vs. Feature Trade-off

A significant architectural challenge in building a chat application is the inherent tension between optimizing for ultra-low latency, the defining characteristic of real-time communication, and providing a rich, feature-complete user experience, such as message history and search. A purely raw RTC setup, for example, a simple WebRTC peer-to-peer connection, is optimized for speed but lacks core features like message persistence. A developer would then be forced to integrate a separate database solution, like Firebase Cloud Firestore , and manually build all the logic for search and retrieval. This multi-vendor approach introduces significant complexity, potential performance bottlenecks, and a high risk of integration errors.

A unified platform like Tencent RTC provides a clear strategic advantage by solving this trade-off out of the box. The platform's Chat API and RTC Engine are not disparate services but part of an integrated solution. This means that the platform handles both the low-latency message delivery (often via WebSockets ) and the durable database storage and retrieval in a cohesive manner. This integrated architecture allows developers to bypass the architectural headaches of integrating a patchwork of disparate tools, leading to a faster path to market and a more performant and reliable final product.

Tencent RTC's Comprehensive History Management

Tencent RTC provides a comprehensive set of APIs and services for managing message history, making it a robust solution for developers. The primary APIs for retrieval are getC2CHistoryMessageList for one-on-one chats and getGroupHistoryMessageList for group messages. These APIs support efficient pagination, with developers able to use thelastMsg parameter to retrieve messages page by page. The documentation recommends pulling a count of 20 messages per page to enhance loading efficiency, a detail that demonstrates a focus on practical performance.

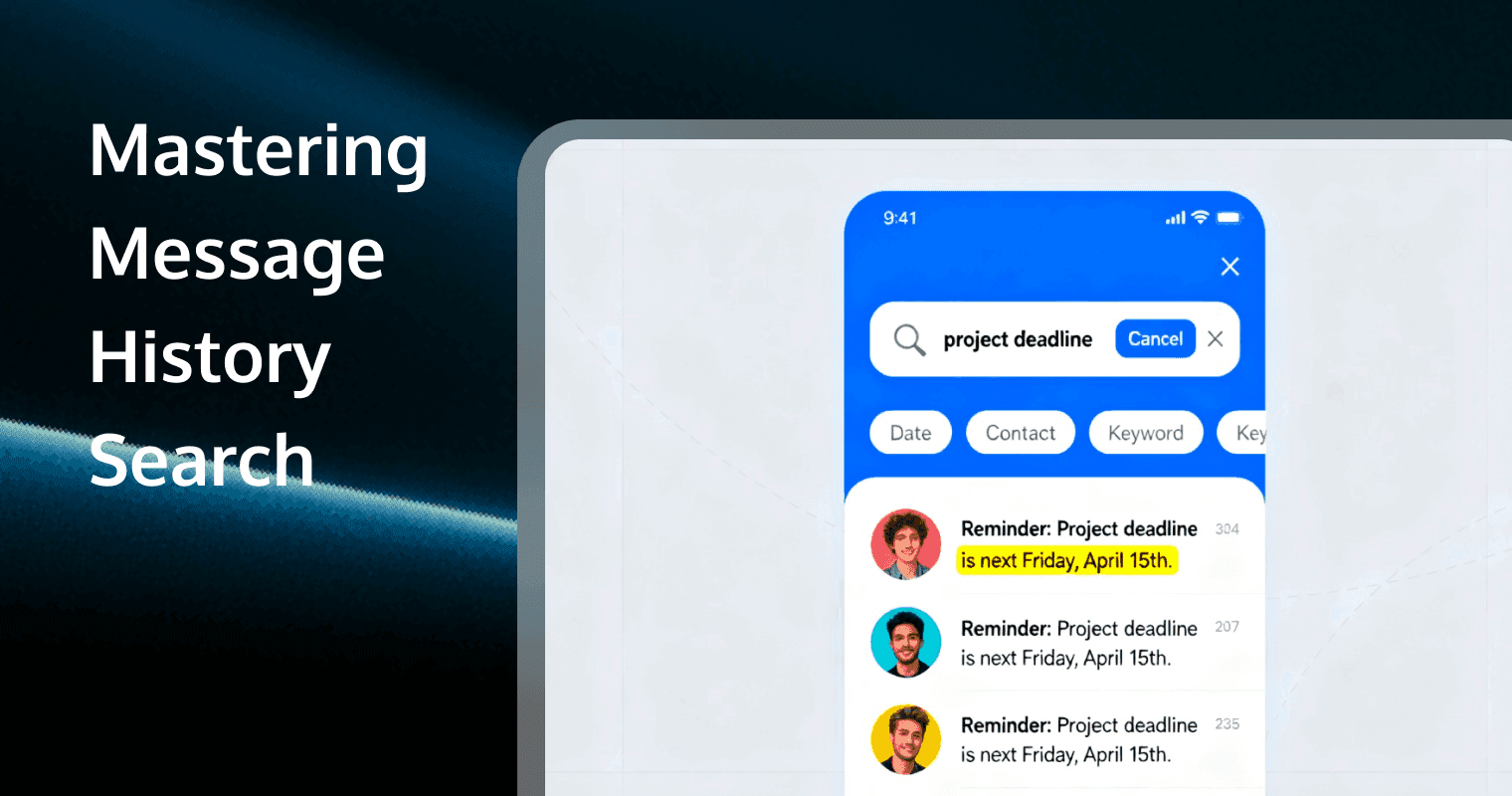

The platform also provides nuanced control over message storage and retrieval. Historical messages can be pulled from both the cloud and the local database, with the SDK automatically returning locally stored messages in the event of a network exception. For group chats, the platform supports both local and cloud search, but developers should note that messages from certain group types, such as AVChatRoom, are not saved locally. A key feature is the ability to search for cloud messages using the searchCloudMessages API, which is available as a value-added service for certain editions of the platform.

Crucially, the service provides different message retention policies based on the service edition, allowing businesses to choose a plan that aligns with their needs. The storage periods vary from 7 days for the Free Trial and Standard editions to 30 days for the Pro edition, and up to 90 days for the Pro Plus and Enterprise editions. This tiered approach provides flexibility and a clear upgrade path for businesses as their data retention needs evolve.

A Competitive Edge in Message Management

When comparing the message history capabilities of different platforms, the maturity and flexibility of the service are key differentiators. For example, a competing solution like Agora offers a Signaling message history feature, which is still in beta and requires developers to contact support to enable the feature and request a custom storage duration. Furthermore, the getMessages() method is limited to retrieving up to 100 historical messages at a time.

Tencent RTC's approach is fully documented and provides a seamless, self-service experience with its tiered, predefined retention policies. The well-established APIs for both one-on-one and group messages, combined with the option for cloud search, demonstrate a more mature and enterprise-ready solution. This level of comprehensive, out-of-the-box functionality simplifies the developer's work and provides a more predictable and scalable foundation for a production application.

Q&A

Q1: How do cloud providers like Tencent and Agora handle the storage of chat history?

A1: They typically store chat history in a persistent, scalable cloud database. For example, Tencent Cloud offers cloud message storage as a feature, allowing developers to retrieve messages for a specific duration (e.g., 7 days or longer). Agora has a similar mechanism, often with customizable retention policies. This separates the real-time message flow from long-term storage, optimizing performance for both.

Q2: What is the trade-off between storing message history on the client side versus the server side?

A2: Client-side storage (e.g., on a user's device) is fast and provides offline access. However, it's not reliable for long-term history, as it's tied to a single device and can be lost if the user switches phones or uninstalls the app. Server-side storage is the industry standard for message persistence. It ensures history is synchronized across all of a user's devices and is always available, even if they log in from a new device.

Q3: Is message history search available by default in most RTC SDKs?

A3: While most RTC SDKs provide APIs for retrieving message history, the ability to perform a complex search (e.g., by keyword, user, or time range) is often an advanced feature or an optional paid add-on. This is because complex search queries require a different type of indexing and database architecture than simple message retrieval. For instance, Tencent Cloud offers advanced search capabilities as part of its paid IM services.

Q4: What are the key performance considerations for implementing history search in an app with millions of users?

A4: Performance is critical for a good user experience. Key considerations include database indexing to enable fast keyword searches, optimizing query performance to prevent server timeouts, and implementing pagination to load results in chunks rather than all at once. Cloud providers like Tencent Cloud handle these complexities on the backend, offering optimized APIs to developers.