Imagine that you are watching a video, and there is a noticeable lag—frustrating, isn't it? Low latency video streaming is the solution to this common problem, ensuring that video content is delivered almost in real-time. In this blog, we will explore everything you need to know about low it, from what is low latency, the various types and use cases to how to achieve a low latency video streaming. Read on to improve your online video experiences.

What Is Low Latency Video Streaming?

Low latency video streaming refers to the transmission of video content over the internet with minimal delay. It is designed to ensure that the time between capturing the video and displaying it to the viewer is as short as possible, typically aiming for delays of less than a few seconds. This type of streaming is crucial in scenarios where real-time interaction and quick decision-making are essential, such as live sports, video gaming, and interactive webinars. By reducing latency, the stream becomes more synchronous with real-time events, providing a seamless viewing experience that is almost indistinguishable from live action.

Why Low Latency Video Streaming Is Important?

Low latency video streaming is important because it significantly enhances the interactivity and responsiveness of online experiences. In environments where delay can disrupt the flow of information or interaction, such as telemedicine or remote education, low latency ensures that communications are immediate and more natural.

This immediacy can maintain the effectiveness of communication, ensuring that participants can interact as if they were face-to-face, thereby minimizing misunderstandings and improving the overall efficiency of exchanges. In essence, low latency streaming helps bridge the gap between virtual and real-world interactions, providing a smoother and more engaging user experience.

What Are the Main Types of Latency?

Latency in networked environments can be categorized into several main types, each affecting the quality and speed of data transmission differently. Understanding these can help in diagnosing issues and optimizing systems for better performance:

Standard Latency

Standard latency, often referred to as "regular" latency in the context of video streaming, typically ranges from a few seconds to several seconds of delay. This level of latency is common in standard live broadcasting where the slight delay is acceptable. It allows for basic buffering and processing time, ensuring the video stream is stable and continuous. Standard latency is adequate for most traditional broadcast content where real-time interaction with the audience is not critical.

Low Latency

Low latency video streaming protocols reduce the delay to just a few seconds, aiming to make live broadcasts feel more immediate. This type of latency is crucial for interactive applications such as live auctions or online gaming, where near-real-time reactions are needed. Low latency helps in maintaining a good user experience by reducing the gap between the actual live event and what the viewer sees, thus enhancing viewer engagement.

Ultra-low Latency

Ultra-low latency pushes the boundaries by reducing the delay to less than a second. This level of immediacy is required in highly interactive environments like financial trading platforms, sports broadcasting, or real-time communication tools like video conferencing, where every millisecond counts. Ultra-low latency ensures that actions and communications are almost instantaneous, providing an experience as close to real-time as possible.

What Are the Use Cases for Low Latency Video Streaming?

Low latency video streaming is crucial in many scenarios where real-time or near-real-time interaction is essential. Here are some key use cases:

- Live Sports Broadcasting: Allows fans to watch games in almost real-time, reducing the gap between live action and broadcast, which is critical for keeping the viewer experience as immediate and exciting as watching the game in the stadium.

- Online Gaming and Esports: Ensures that player actions and reactions are synchronized in real-time, because every millisecond can affect the outcome in competitive gameplay.

- Financial Trading: Traders rely on real-time data to make quick decisions. Low latency streaming ensures that financial broadcasts and updates are received as quickly as possible to maintain a competitive edge.

- Live Auctions: Enables bidders to see updates seamlessly, enhancing the fairness and efficacy of the bidding process. Immediate data transmission allows participants to react swiftly and adjust their bids based on real-time information, maintaining an equitable and dynamic auction environment.

- Video Conferencing: Improves communication in business settings by reducing delays, ensuring that conversations happen smoothly and naturally, almost as if all participants are in the same room.

How to Achieve Low Latency Video Streaming?

If you are building a video platform, you may wonder how to achieve low latency live video streaming. Here are some effective ways:

Optimize the Encoding Process: Use hardware acceleration to speed up the video encoding process. Hardware encoders are faster than software encoders and can help reduce latency significantly.

Reduce Keyframe Interval: Lowering the keyframe interval ensures that frames are encoded more frequently, which can help reduce the latency. This means that the video player needs to wait for fewer frames before starting playback.

Use Efficient Video Codecs: Some codecs, like H.264 or HEVC, are more efficient at compressing video and require less time to process. Using these codecs can reduce the amount of data that needs to be sent and decrease the time it takes for the video to begin playing.

Leverage Content Delivery Networks (CDNs): Deploying your video streams through a CDN can reduce latency by caching the video closer to the viewer’s location. This reduces the travel time of the data across the network.

Adjust Network Settings: Fine-tuning network settings such as the transport protocol can also impact latency. Protocols like WebRTC are designed for real-time communication and are generally better for low latency streaming.

Limit Network Hops: Minimize the number of hops between the video source and the viewer. Each hop can introduce additional delays, so direct paths are preferable.

Conclusion

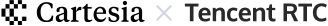

By now, we have known what is low latency Internet, various applications and how to achieve it. Tencent's RTC (Real-Time Communication) platform has emerged as a potent tool for low latency.

Moreover, Tencent RTC reduces stuttering through intelligent QoS control and optimized encoding, ensuring high-quality, smooth, and stable audio/video communication.

If you have any questions or need assistance, our support team is always ready to help. Please feel free to Contact Us or join us in Telegram.

FAQs

What Is Considered Low Latency in Video Streaming?

Low latency in video streaming is generally defined as a delay of less than five seconds from the source to the viewer. This ensures that viewers experience content with minimal disruption, making it almost real-time.

How Does Low Latency Video Streaming Benefit Users and Content Providers?

Low latency video streaming protocols enhance the user experience by providing real-time or near-real-time interaction. For content providers, it means more engagement, as audiences are more likely to stay connected if there is no perceptible delay. This immediacy can also increase the accuracy and effectiveness of content delivery, particularly in live auctions, sports, or any interactive media, ensuring that all participants have a synchronized viewing experience.