The Technical Challenge of Real-Time Stylization

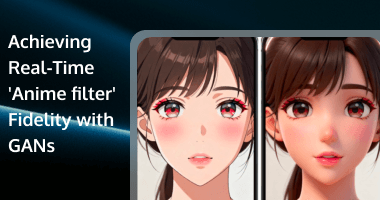

The global popularity of Japanese animation has driven immense search interest in the "Anime filter," the ability to transform oneself into a high-fidelity manga character. While numerous online tools offer static "photo to anime" conversion, the true technical frontier—and the major opportunity for live streaming and video platforms—lies in achieving real-time video stylization.

Converting a live video stream to a high-quality, continuous anime aesthetic requires immense computational power and ultra-low latency. It is a challenge so intense that some developers jest about needing a quantum computer to achieve 60 frames per second (FPS).29 The Tencent Beauty AR directly addresses this barrier, leveraging optimized GAN architectures and AI reinforcement to deliver the required fidelity and speed for a professional-grade live anime filter.

The Quality Imperative: Why StyleGAN2 Dominates Anime Synthesis

The visual quality of a stylized filter is directly dependent on the underlying Generative Adversarial Network architecture. Low-quality models produce noise, artifacts, and a loss of artistic fidelity. To achieve a professional, aesthetically pleasing anime style, the SDK must support cutting-edge models.

Research confirms that advanced GAN architectures, such as StyleGAN2, demonstrate superior performance in generating realistic and high-fidelity anime faces.30 Quantitatively, this superiority is measured by the Fréchet Inception Distance (FID) score, where StyleGAN2 achieves a significantly lower score (31) compared to older models like ProGAN (218.3) and DCGAN (625).30 A lower FID score validates the model's superior effectiveness in creating believable anime characters in the digital space.

The integration of such high-quality GAN technology ensures that the Tencent Beauty AR can deliver visuals that meet the highest artistic standards of the anime aesthetic.

Overcoming the Latency Barrier for Live Animation

The most significant technical hurdle for a live anime filter is latency. Static conversion tools (like some "Toongineer" or Canva features) can take time to process a single, high-resolution image. A real-time filter, however, must process continuous video input at speeds of 30 FPS or higher, demanding instantaneous computational throughput that far exceeds standard processing capabilities.

Tencent Beauty AR 4.0's core innovation is its focus on performance optimization specifically for complex AI models. This optimization is delivered through:

- Algorithm Optimization: Re-engineered rendering pipelines ensure streamlined processing.

- AI Reinforcement: Targeted AI enhancement allows complex GAN models to run stably and efficiently on standard mobile hardware, mitigating the common issue of lag and ensuring a smooth, continuous animation experience.

This capability to bridge the gap between StyleGAN2-level visual fidelity and low-latency, real-time performance is the key differentiator for enterprise developers seeking to dominate the live stylization market.

Creative Control: Training Custom Anime Styles

The market is driven by novelty, meaning developers need the flexibility to create unique, proprietary anime styles. This requires the ability to train the AI model on a specific, stylized dataset.

Training Requirements: Creating a brand new style requires sufficient training data, often involving 60 to 100 specialized style frames to ensure consistency and variability.

However, modern techniques like Dreambooth can sometimes achieve good results with as few as 12 specialized images.

The robust and simplified developer architecture of Tencent Beauty AR 4.0 ensures that platforms can easily integrate these custom, computationally heavy GAN models. This ongoing AI capability supports the rapid development of new stylized content, enabling developers to pursue new artistic expressions that attract overseas traffic and generate viral, novel effects.

The Future is Real-Time and High-Fidelity

The demand for the "Anime filter" is not going away, but the technical requirement has shifted from mere novelty to high-fidelity, real-time performance. The Tencent Beauty AR addresses this by mastering the complexity of running superior GAN architectures like StyleGAN2 at low latency, providing developers with the speed, quality, and creative flexibility needed to lead the live video stylization market.

Q&A

Q: What is the main technical difference between a real-time Anime Filter and a static photo converter?

A: The real-time filter processes live video input and must maintain extremely low latency (e.g., 30-60 FPS) to enable simultaneous video shooting. Static converters (like some image-to-anime apps) are optimized for high-quality, non-real-time image processing and cannot handle continuous video seamlessly.

Q: How difficult is it to train an AI model to replicate a completely new anime style?

A: Creating a novel style requires training the model on a specific dataset. While some techniques can use as few as 12 specialized images (Dreambooth method), most new styles require training on a style dataset of around 60–100 frames to ensure aesthetic consistency and variability.

Q: Why does the Anime filter sometimes take time to "set on the face" or require searching?

A: Complex real-time filters require time for the model to establish accurate face tracking and projection. Lag can also occur due to unoptimized processing or high resource consumption. Tencent Beauty AR directly aims to improve overall algorithm performance to ensure instant, stable filter engagement.