Throughput is characterized as the volume of data transmitted over the network per unit time, generally measured in bits per second. It gives a measure of a network's capacity to carry data. In contrast, Latency refers to the delay that occurs when transmitting data over the network, representing the time taken for a data packet to travel from source to destination.

In real-time communication, maintaining an optimal balance between throughput and latency is critical for ensuring efficient data transfer and minimizing network congestion.

Deeply understanding the terms, in real-time communication, throughput and latency must exist in equilibrium. High throughput may lead to congestion and increase in latency, disrupting real-time data flow. On the other hand, minimal latency necessitates efficient and fast data transfer, requiring an optimized throughput. This balancing helps maintain efficient communication.

What is Throughput?

In essence, throughput refers to the total amount of data that the network is capable of transmitting in a specific time frame. It is often measured in bits per second (bps), outlining the volume of information efficiently moved from source to destination.

In the realm of real-time communication, throughput is a critical factor. High-throughput rates mean that data is transferred quickly and efficiently, enhancing the seamlessness and effectiveness of voice and video calls.

Beyond its importance in delivering clear and uninterrupted communication, harnessing the power of throughput also influences other key components. This includes network performance, bandwidth utilization, and overall user experience.

What is Latency?

Latency is a critical element that determines the rapid response times during audio or video real-time communication. It refers to the delay that occurs as data packets travel from the source to the destination.

Typically, a lower latency indicates a faster transmission. This is critical as high latency levels could lead to disjointed conversations, creating a poor user experience in real-time communication.

Diving deep into latency, it's synonymous with the term 'transmission delay.' This encompasses the time it takes for a packet to travel from the sender to the receiver, including propagation, serialization, and queuing delays.

The Importance of Balancing Throughput and Latency

Having both high throughput and low latency is crucial to attain optimal real-time communication performance. Their balance ensures seamless and instant data transmission, thus enhancing user experience.

Striving for harmony between throughput and latency is key to achieving superior communication. This synergy optimizes network efficiency and guarantees a smoother and more responsive interaction for users.

What are the differences between Throughput and Latency in Real-Time Communication?

Real-time communication, such as audio and video calls, relies on efficient and reliable data transmission. When discussing the performance of real-time communication systems, two important metrics to consider are throughput and latency. While both are crucial in evaluating the quality of communication, they represent different aspects of the system's performance. Let's delve into the differences between throughput and latency in real-time communication.

Throughput refers to the amount of data that can be transmitted over a network in a given amount of time. It is measured in terms of bits per second (bps) or its multiples such as kilobits per second (Kbps) or megabits per second (Mbps). In the context of real-time communication, throughput determines the capacity of the network to handle the data required for smooth and uninterrupted communication. It reflects the system's ability to transmit audio and video streams without significant delays or packet loss.

On the other hand, latency refers to the delay experienced by data packets as they travel across a network. It is measured in milliseconds (ms) and represents the time taken for a packet to travel from the sender to the receiver. In real-time communication, latency directly affects the responsiveness and quality of the communication experience. Lower latency means less delay between sending a packet and receiving it, resulting in more real-time and synchronized communication.

While throughput and latency are related, they are distinct metrics. Throughput focuses on the quantity of data transmitted, while latency emphasizes the speed and responsiveness of the communication. A high throughput indicates anetwork's capacity to handle large amounts of data, while low latency ensures quick and smooth data transmission.

Optimizing Throughput and Latency in Real-Time Communication To optimize throughput and latency in real-time communication, consider the following strategies:

1. Network Bandwidth: Ensure that your network has sufficient bandwidth to handle the data requirements of real-time communication. A higher bandwidth allows for higher throughput and reduces the chances of congestion and latency.

2. Quality of Service (QoS): Implement QoS mechanisms to prioritize real-time communication traffic over other non-real-time traffic. This ensures that voice and video data packets are given higher priority, reducing latency and improving the overall quality of communication.

3. Codec Selection: Choose efficient and optimized codecs for audio and video compression. These codecs reduce the size of data packets, resulting in higher throughput and lower latency. However, be mindful of the trade-off between compression and audio/video quality.

4. Network Optimization: Optimize your network infrastructure and configuration to minimize latency. This includes reducing the number of network hops, using faster routing protocols, and implementing traffic shaping and prioritization techniques.

5. Buffering and Packet Loss: Implement proper buffering mechanisms to handle temporary network congestion or fluctuations in throughput. Additionally, use error correction techniques to minimize packet loss, which can significantly impact the quality of real-time communication.

6. Real-Time Protocol (RTP) Optimization: RTP is a standard protocol for real-time communication. Utilize RTP optimization techniques, such as header compression and congestioncontrol, to reduce latency and improve throughput. These techniques help in efficient transmission of audio and video streams, ensuring a smoother communication experience.

How does Latency impact Throughput in Real-Time Communication?

Latency is a crucial aspect of real-time communication that directly affects throughput. Throughput refers to the rate at which data is transmitted and received within a given time frame. In the context of audio and video calls, latency is the delay between sending a packet of data and its reception at the destination.

High latency can significantly impact throughput in real-time communication. Here's how:

1. Increased Round-Trip Time (RTT): Latency causes a delay in the transmission of data packets, resulting in an increased RTT. RTT is the time it takes for a packet to travel from the sender to the receiver and back. Higher latency means a longer RTT, which reduces the number of packets that can be transmitted within a given time frame, ultimately reducing throughput.

2. Delayed ACK and NACK: In real-time communication, acknowledgment (ACK) and negative acknowledgment (NACK) packets are crucial for ensuring reliable data transmission. Latency can cause delays in the reception of these packets, leading to retransmissions and reduced throughput.

3. Packet Loss and Retransmissions: Latency can increase the likelihood of packet loss due to network congestion or other factors. When packets are lost, they need to be retransmitted, which adds to the overall latency and reduces throughput.

4. Jitter Buffer Management: To compensate for varying latency, real-time communication systems employ jitter buffers. Jitter refers to the inconsistency in packet arrival time caused by variable latency. Larger jitter requires larger buffer sizesto accommodate the delayed packets, which can result in increased latency and decreased throughput. Optimizing latency is essential to minimize jitter and maintain a consistent throughput in real-time communication.The Importance of Optimizing Throughput and LatencyOptimizing throughput and latency in real-time communication is crucial for delivering a seamless and high-quality user experience.

Strategies to Optimize Throughput and Latency in Real-Time Communication

Real-Time Communication (RTC) is a critical aspect of modern audio and video call applications. To ensure a smooth user experience, it is important to reduce latency and improve throughput. Here are some techniques employed to achieve these goals:

1. Network Optimization: One of the primary factors affecting latency and throughput is the network. Implementing techniques such as Quality of Service (QoS), traffic shaping, and network congestion control can help optimize the network for real-time communication. These techniques prioritize RTC traffic and ensure a stable and reliable connection.

2. Codec Selection: The choice of audio and video codecs can significantly impact latency and throughput. It is crucial to select codecs that strike a balance between compression efficiency and computational complexity. Low-latency codecs, like Opus for audio and VP9 for video, can be preferred to reduce latency. Additionally, utilizing hardware acceleration for encoding and decoding can improve throughput.

3. Packet Loss Recovery: Packet loss, a common issue in network communication, can severely impact RTC quality. Implementing techniques such as Forward Error Correction (FEC) and packet loss concealment can help recover lost packets or mitigate their impact. These techniques can improve perceived quality and reduce the need for retransmission, reducing latency.

4. Buffering and Jitter Control: Buffering can help smooth out network fluctuations and reduce the impact of jitter. By carefully managing buffer sizes and implementing adaptive playout algorithms, it is possible to reduce latency caused by jitter. Additionally, incorporating jitter buffer management techniquescan help optimize the delivery of packets and improve overall throughput.

5. Network Infrastructure: Optimizing the network infrastructure can have a significant impact on latency and throughput. Reducing the number of network hops, using faster routing protocols, and ensuring a stable and reliable connection can help minimize latency. Additionally, implementing traffic shaping and prioritization techniques can optimize the flow of data and improve overall throughput.

6. Real-Time Protocol (RTP) Optimization: RTP is a standard protocol used for real-time communication. Employing RTP optimization techniques such as header compression and congestion control can help reduce latency and improve throughput. These techniques ensure efficient transmission of audio and video streams, resulting in a smoother communication experience.

7. Monitoring and Analysis: Regularly monitoring and analyzing network performance can help identify potential bottlenecks and areas for improvement. By using tools like network analyzers and performance monitoring software, developers can gain insights into latency and throughput issues and make necessary adjustments to optimize real-time communication.

Conclusion

Reducing latency and improving throughput are critical for ensuring a smooth and high-quality real-time communication experience. By implementing network optimization techniques, selecting efficient codecs, utilizing packet loss recovery mechanisms, managing buffering and jitter, optimizing the network infrastructure, and employing RTP optimization, developers can enhance the performance of audio and video call applications. Regular monitoring and analysis of network performance also play a crucial role in identifying and addressing latency and throughput issues. By prioritizing these strategies, audio and video call developers can provide users with seamless, real-time, and reliable communication experiences.

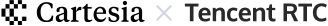

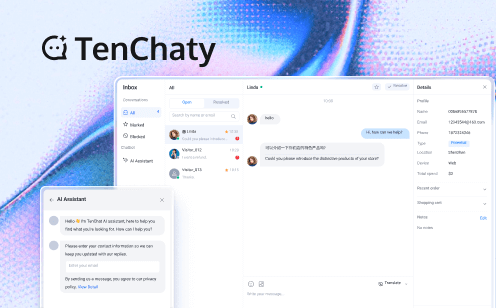

Tencent RTC supplements the advantages of low latency by providing seamless and immersive experiences, whether you're gaming, attending virtual conferences, or streaming live events. With advanced technology and algorithms, Tencent RTC ensures minimal delays, allowing for responsive interactions and enhanced engagement.

So, next time you're seeking an unparalleled audio and video experience, remember the importance of low latency and how Tencent RTC can elevate your real-time communication!

If you have any questions or need assistance, our support team is always ready to help. Please feel free to Contact Us or join us in Discord .