Glossary

ABR (Adaptive Bitrate Streaming)

Adaptive Bitrate Streaming (ABR) is a video streaming technique that dynamically adjusts the quality of a video being delivered to a user based on their network conditions and device capabilities. The primary goal of ABR is to provide the best possible viewing experience by minimizing buffering and ensuring smooth playback, even when network conditions fluctuate. ABR works by encoding the video content into multiple bitrates and resolutions, creating a range of video quality options. These options are then segmented into small chunks, typically a few seconds in length, and are made available to the user's video player.

ASR (Automatic Speech Recognition)

Automatic Speech Recognition (ASR) is a technology that converts spoken language into written text. It's a form of artificial intelligence (AI) that uses algorithms to identify and process human speech. ASR can recognize and understand a wide range of spoken words and phrases, enabling it to transcribe spoken content into text, control devices, and execute commands.

ASR has a wide range of applications, including transcription services, voice assistants like Siri and Alexa, voice-controlled systems in cars, and accessibility tools for individuals with disabilities. It's also used in call centers to transcribe and analyze customer interactions, in healthcare for transcribing doctor-patient conversations, and in education for assisting students with learning disabilities.

Audio Codec

An audio codec is a program or a device that is used to encode or decode a digital data stream or signal for audio. The term 'codec' is a portmanteau of 'coder-decoder'. Audio codecs are used in applications that record and transmit or play back audio, such as telephony, streaming media, and in producing music. They work by compressing the data to make it easier to store or transmit, and then decompressing it for playback or editing.

There are two types of audio codecs - lossy and lossless. Lossy codecs, such as MP3, AAC, and Ogg Vorbis, reduce the size of the audio file by removing some of the audio information that is less important to the overall sound quality. This results in a much smaller file size, but some loss of quality. On the other hand, lossless codecs, such as FLAC, ALAC, and WMA Lossless, compress the audio data without losing any information, resulting in high-quality audio playback but larger file sizes.

AVC (Advanced Video Coding)

Advanced Video Coding (AVC), also known as H.264 or MPEG-4 Part 10, is a widely-used video compression standard that enables efficient encoding and delivery of high-quality video content over the internet. Developed by the International Telecommunication Union (ITU-T) and the International Organization for Standardization (ISO) / International Electrotechnical Commission (IEC), AVC was designed to provide significant improvements in video compression performance compared to its predecessors, such as MPEG-2 and MPEG-4 Part 2.

AVC achieves its high compression efficiency through the use of various advanced coding techniques, such as motion estimation, intra-prediction, and transform coding. Motion estimation is a key feature of AVC, which involves predicting the movement of objects between frames and using this information to reduce redundancy in the video stream. Intra-prediction allows the encoder to predict and eliminate spatial redundancy within a single frame, while transform coding and quantization help further compress the data by representing the video information more compactly.

Balanced Audio

Balanced audio is a method of transmitting audio signals over cables using three conductors: two for carrying the audio signal and one for the ground. This approach is commonly used in professional audio applications where high-quality audio transmission is crucial, such as in recording studios, live sound setups, and professional performances.

The purpose of using balanced audio is to minimize interference and noise that can be introduced into the audio signal during transmission. The audio signal is split into two identical copies, with one being inverted in polarity, also known as phase. These two signals are then sent through separate conductors, known as the "hot" and "cold" signals, while the ground conductor remains the same.

At the receiving end, the balanced audio signal is recombined, with the inverted polarity of one of the signals essentially cancelling out any noise or interference that may have been picked up during transmission. This results in a much cleaner and higher quality audio signal compared to unbalanced audio connections, which only use a single conductor for the audio signal.

Binaural Audio

Binaural audio is a recording technique that aims to create a realistic and immersive 3D sound experience for the listener. It involves capturing audio with two microphones or two microphones placed inside a dummy head simulating human ears. The resulting recordings are then played back through headphones, giving the listener a sense of audio perception similar to how they would hear sounds in the real world.

The key concept behind binaural audio is the use of two separate signals, one for each ear, to replicate the way sound waves interact with our head and ears. As sound travels from a source to our ears, it gets filtered and modified by various factors, including the shape of our ears, the distance between them, and the reflections off our head and torso. Binaural recording and playback aim to recreate these subtle cues, enabling a realistic audio experience with a sense of direction, distance, and spatial awareness.

Binaural audio finds applications in fields like virtual reality (VR), gaming, and audio entertainment. It can enhance the immersion and realism of virtual experiences, making users feel as if they are truly present in a simulated environment. It can also be used in audio storytelling, creating an engaging and lifelike auditory experience where sounds seem to be coming from all around the listener.

Bitrate

Bitrate refers to the amount of data that is transmitted or processed per unit of time, typically measured in bits per second (bps) or kilobits per second (kbps). In the context of digital media, such as audio and video files, bitrate is an important factor that determines the quality and size of the file. A higher bitrate generally results in better quality, as more data is used to represent the audio or video information, but it also leads to larger file sizes and increased bandwidth requirements for streaming.

Broadcast

Broadcasting is the transmission of audio, video, or data to a wide audience through electronic media channels like radio, television, and the internet. It enables mass communication by rapidly disseminating information to many people simultaneously. Traditional broadcasting uses airwaves, cable, and satellite systems, while modern broadcasting includes internet-based platforms like podcasts and video streaming services. Broadcasting plays a crucial role in delivering news, entertainment, and educational content to the public.

Call Transcription

Call transcription refers to the process of converting spoken words from a recorded phone call into written text. It involves using advanced technologies, such as speech recognition software, to automatically transcribe the audio content of the conversation.

Call transcription can be useful in various industries, including customer support, sales, legal, and healthcare. It allows organizations to monitor and evaluate the quality of interactions between agents and customers, identify areas for improvement, and ensure compliance with regulations and industry standards.

Chatbot

A chatbot is an artificial intelligence (AI) program designed to simulate human conversation and interaction. It uses natural language processing and machine learning techniques to understand and respond to user input in the form of text or voice messages.

The purpose of a chatbot is to engage in natural and fluid conversations with users. They can be employed for various tasks, such as answering common questions, providing product or service information, handling customer support requests, or even offering entertainment and conversation.

Echo Cancellation

Echo cancellation is a technology used to eliminate or reduce the presence of echo in telecommunication systems. Echo refers to the unintended reflection of sound waves, creating a delayed and distorted version of the original audio signal. It commonly occurs during phone calls or voice/video conferencing when the audio from the speaker or microphone is picked up by the microphone and played back to the other participants.

Echo cancellation algorithms are employed to analyze and filter out the echo signal, ensuring that the transmitted audio is clear and free from distortion. The process involves dynamically estimating the echo characteristics and then subtracting it from the received signal.

There are two main types of echo cancellation: acoustic echo cancellation (AEC) and line echo cancellation (LEC). AEC is used in scenarios where the echo is primarily caused by sound reflecting off physical objects in the environment, such as walls or furniture. LEC, on the other hand, is employed when the echo is primarily caused by impedance mismatches in the telephone network.

Encoding

In the context of video and audio, encoding refers to the process of converting analog signals or raw data into a digital format that can be stored, transmitted, or processed by electronic devices.

During encoding, video and audio data may undergo various processing stages. These can include color space conversion, resolution adjustment, bitrate optimization, and compression. The encoded video and audio data can then be stored in a digital file format such as AVI, MP4, or MKV, or transmitted over networks or other media for playback on devices such as televisions, computers, or mobile devices. Upon receiving the encoded data, decoding is performed to revert it back to its original form for display or playback.

Encoding ladder

The encoding ladder is a collection of video files encoded at different quality levels and bitrates. It is used in adaptive streaming technologies to deliver videos seamlessly based on the viewer's internet speed and device capabilities. The ladder consists of multiple steps, each representing a different video quality, from low to high. By switching between these versions during playback, the video player ensures smooth streaming without buffering.

The purpose of the encoding ladder is to optimize video delivery, balancing file size and visual quality. It allows streaming platforms to provide the best viewing experience by dynamically selecting the appropriate video quality based on the viewer's available bandwidth. This adaptive approach ensures uninterrupted playback and efficient bandwidth utilization.

Equalization (EQ)

Equalization, or EQ, is the process of adjusting the frequency balance of an audio signal. It involves boosting or cutting certain frequencies to enhance or reduce their prominence in the sound. EQ can be used to correct audio issues, shape the tonal balance, and improve the overall clarity and quality of the audio. Different types of EQ filters, such as graphic, parametric, shelving, and notch EQ, are available for specific adjustments. EQ is commonly used in music production, live sound, and other audio applications.

FFMpeg

FPS, or frames per second, is a measure of the number of individual frames or images displayed per second in a video or animation. It indicates the smoothness and fluidity of motion in a visual sequence. In video production or gaming, FPS is an essential metric that determines the quality and real-time rendering capability of a system.

The frame rate of a video is determined during the recording or rendering process. Higher frame rates require more computational power and storage capacity. On the other hand, lower frame rates can result in a choppy or stuttering visual experience. The appropriate frame rate to use depends on the specific application and the desired visual effect.

FPS (Frames per Second)

FPS, or frames per second, is a measure of the number of individual frames or images displayed per second in a video or animation. It indicates the smoothness and fluidity of motion in a visual sequence. In video production or gaming, FPS is an essential metric that determines the quality and real-time rendering capability of a system.

The frame rate of a video is determined during the recording or rendering process. Higher frame rates require more computational power and storage capacity. On the other hand, lower frame rates can result in a choppy or stuttering visual experience. The appropriate frame rate to use depends on the specific application and the desired visual effect.

H.264

H.264, also known as MPEG-4 Part 10 or AVC (Advanced Video Coding), is a widely used video compression standard. It is designed to efficiently compress and store or transmit video content while maintaining high visual quality. H.264 is known for its ability to deliver excellent video compression ratios, resulting in smaller file sizes without significant loss in image quality.

H.264 is widely supported by a range of devices, software, and platforms, making it a popular choice for various applications, including video streaming and distribution, video conferencing, video surveillance, and digital television broadcasting. It offers a good balance between video quality and file size, making it ideal for efficient delivery and playback on a wide range of devices, from smartphones and tablets to smart TVs and streaming platforms.

H.265

H.265, also known as High Efficiency Video Coding (HEVC), is the successor to the H.264 video compression standard. Like its predecessor, H.265 is designed to efficiently encode video content while maintaining high visual quality. However, H.265 offers even better compression performance, meaning it can achieve smaller file sizes with improved video quality compared to H.264.

The benefits of H.265 are particularly valuable in scenarios where bandwidth or storage capacity is limited, such as video streaming, video conferencing, and 4K or ultra-high-definition (UHD) video content. It enables smoother streaming experiences, reduces data usage, and provides better visual fidelity on compatible devices. However, due to its more complex encoding process, decoding and encoding H.265 video may require more computational resources compared to H.264. Nonetheless, H.265 has gained widespread adoption and is supported by many video playback devices, software, and platforms.

HD

HD stands for High Definition and refers to the quality of video or image display. HD offers a higher level of visual clarity and resolution compared to standard definition (SD) video or images. In the context of video, HD generally refers to a resolution of 1280x720 pixels (720p) or 1920x1080 pixels (1080p).

HD video provides sharper and more detailed images with greater depth and color accuracy. The increased resolution allows for more pixels to be displayed, resulting in clearer and crisper visuals. HD is commonly used in various applications, including television broadcasts, Blu-ray discs, streaming platforms, and digital cameras.

Latency

Latency refers to the delay between sending and receiving data in a network. It is the time it takes for data to travel from its source to its destination. Low latency is desirable as it provides faster and more immediate communication. High latency can cause delays and impact real-time applications like gaming or video streaming. Latency is measured in milliseconds and is influenced by factors such as network congestion and distance. Optimizing network infrastructure and using faster technologies can help reduce latency and improve communication efficiency.

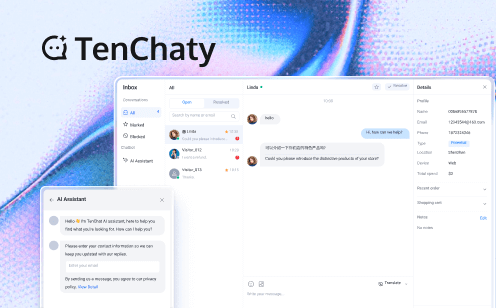

Live Chat

Live chat is a real-time communication feature that enables users to engage in text-based conversations over the internet. It allows individuals or businesses to provide instant support, interact with website visitors, or facilitate customer service interactions in real time. Live chat typically involves a chat widget or application embedded on a website or platform, which enables users to initiate chat sessions with customer service representatives or support agents.

With live chat, users can ask questions, seek assistance, or provide feedback to a representative who responds promptly. It provides a convenient and efficient method of communication, allowing users to receive immediate responses and resolve issues or inquiries in real-time. Live chat is often seen as an alternative or complement to other communication channels, such as phone calls or email.

Live Streaming

Live streaming is the process of broadcasting real-time audio or video content over the internet. It enables viewers to watch or listen to the content as it happens without having to download the entire file. Live streaming is used for a wide range of purposes, such as live events, gaming, sports, webinars, and more. The content is captured, encoded, and transmitted to a streaming server, which then distributes it to viewers in real-time. Viewers can access the stream through web browsers, mobile apps, or dedicated streaming platforms, allowing for interactive engagement and real-time interaction with the content creators.

Live streaming has become increasingly popular with advancements in technology and wider access to high-speed internet. It provides a dynamic and immersive experience for content creators and viewers, with features like live chat and audience participation. It has revolutionized the way events are shared, expanding the reach of content to a global audience in real-time.

Microphone

A microphone is a device used to capture and convert sound waves into electrical signals. It is an essential tool in audio recording, communication, and broadcasting. Microphones come in various types and designs, but they all serve the same basic purpose of converting sound into an electrical signal that can be amplified, recorded, or transmitted.

MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

MPEG-DASH (Dynamic Adaptive Streaming over HTTP) is a streaming protocol that enables the adaptive delivery of multimedia content, such as video and audio, over the internet. It allows for the dynamic selection and streaming of different segments or chunks of the content, adjusting the quality and bit rate based on the viewer's network conditions.

With MPEG-DASH, multimedia content is divided into smaller segments or chunks, each with different bit rates and resolutions. These segments are made available on a server and can be requested and downloaded by a client device using HTTP. The client device then dynamically selects and fetches the segments based on the available network bandwidth and device capabilities, ensuring a smooth playback experience.

One of the advantages of MPEG-DASH is its compatibility with various devices, platforms, and browsers, as it utilizes the widely supported HTTP protocol. It allows for seamless streaming across different devices, from smartphones and tablets to smart TVs and computers. Additionally, MPEG-DASH supports adaptive streaming, meaning it can adjust the quality of the content on the fly to match the viewer's network conditions, providing a consistent viewing experience.

Multi CDN

Multi CDN refers to the process of multiplexing, also known as muxing, where multiple video, audio, and subtitle streams are combined into a single container format. This process typically occurs after video encoding and aims to merge different streams in an efficient way for transmission and storage.

By leveraging multi CDN with muxing technology, multiple independent video, audio, and subtitle streams can be merged into a single container format, enabling more efficient transmission and storage of content. This approach helps reduce latency and bandwidth usage when delivering streams, resulting in a better viewing experience.

Noise

Noise refers to unwanted or excessive sound that disrupts the desired audio or signal. It can originate from various factors such as electrical interference, environmental factors, or human activities. Noise can have negative effects on communication, productivity, and health.

To mitigate its impact, noise reduction techniques and technologies, like soundproofing, noise-canceling headphones, or signal processing algorithms, are employed. Additionally, regulations and measures are implemented to control noise pollution and create quieter environments, ensuring a peaceful and healthy living and working environment for individuals.

PSTN (Public Switched Telephone Network)

PSTN stands for Public Switched Telephone Network. It is the traditional system of wired telephone lines and infrastructure used for voice communication over long distances. PSTN is a circuit-switched network that relies on physical copper wires to transmit analog voice signals.

PSTN is a widely used telecommunications network that connects homes, businesses, and public institutions, allowing them to make voice calls to each other both locally and globally. It provides services such as call routing, call setup, and call termination through a series of telephone exchanges and switches.

Although PSTN has been the backbone of voice communication for many years, it is gradually being replaced by digital and Internet-based communication technologies, such as Voice over IP (VoIP). However, PSTN still plays a significant role in areas where traditional phone lines are essential or where reliable voice communication is required, such as emergency services or rural areas with limited internet access.

Push Notification

Push notifications are real-time messages delivered directly to a user's device by mobile apps or web services. They serve as alerts or reminders, appearing even when the associated app is not actively being used. Users can opt-in to receive push notifications, granting apps permission to send them.

These notifications provide a valuable means for apps and businesses to engage with users by delivering timely and relevant information. They can be used to inform users about new messages, updates, promotions, or important events. Push notifications are facilitated by push notification services provided by operating systems, like APNs for iOS and FCM for Android. By leveraging push notifications, app developers and businesses can effectively communicate with users, enhancing user engagement and retention.

QoE

QoE stands for Quality of Experience. It refers to the subjective measure of an individual user's perception of the overall quality and satisfaction they derive from using a specific product or service, typically in the context of digital technology.

In the digital realm, QoE is particularly relevant in areas like video streaming, gaming, mobile apps, and web browsing. Organizations strive to optimize QoE by focusing on areas like fast loading times, smooth and uninterrupted playback, intuitive user interfaces, high-quality visuals and audio, and responsive interactions. By enhancing the QoE, businesses aim to provide users with a satisfying and enjoyable experience, leading to greater engagement, customer loyalty, and positive brand perception.

QoS

QoS, which stands for Quality of Service, refers to the techniques and mechanisms used to manage and prioritize network resources for different applications or services. It is implemented to ensure optimal performance and reliability by controlling factors like bandwidth, latency, and packet loss.

By utilizing QoS, network administrators can assign priorities to specific types of traffic and allocate resources accordingly. This prioritization allows for the smooth delivery of critical or time-sensitive applications, such as voice and video communication, by minimizing delays, jitter, and disruptions. QoS mechanisms include traffic prioritization, traffic shaping, congestion management, and resource reservation.

Real-time communication (RTC)

Real-time communication (RTC) refers to the ability to exchange information or engage in communication instantaneously or with very low latency. It enables individuals or systems to interact and communicate in real-time without any noticeable delays. RTC involves technologies and protocols that facilitate immediate data transmission and delivery.

RTC is utilized in various applications and platforms, including voice and video calls, instant messaging, live streaming, online gaming, and collaborative workspaces. These applications leverage RTC to provide seamless and interactive communication experiences, allowing users to connect and communicate effectively regardless of their physical locations. Real-time feedback and responsiveness are crucial in supporting engaging and efficient communication.

Resolution

Resolution refers to the level of detail and clarity. It is measured in pixels and represents the number of pixels in the width and height of an image or display. Higher resolutions, such as Full HD (1920x1080) or 4K (3840x2160), result in sharper and more detailed visuals.

RTMP (Real Time Messaging Protocol)

RTMP, or Real-Time Messaging Protocol, is a network protocol primarily used for streaming audio, video, and data in real-time over the internet. It was developed by Adobe Systems and gained popularity for its ability to handle low-latency streaming and support interactive multimedia applications. RTMP establishes a persistent connection between a client and a server, allowing for real-time bidirectional communication and data exchange during streaming sessions.

Although RTMP was widely adopted for live streaming and on-demand video services in the past, its usage has decreased in recent years. This decline is partly due to the rise of HTTP-based streaming protocols like HLS and DASH, which offer greater device and platform compatibility. Despite this shift, RTMP still finds utility in certain scenarios, such as legacy systems and software reliant on Flash-based players or applications that require real-time, interactive streaming capabilities.

RTSP (Real Time Streaming Protocol)

RTMP, or Real-Time Messaging Protocol, is a network protocol primarily used for streaming audio, video, and data in real-time over the internet. It was developed by Adobe Systems and gained popularity for its ability to handle low-latency streaming and support interactive multimedia applications. RTMP establishes a persistent connection between a client and a server, allowing for real-time bidirectional communication and data exchange during streaming sessions.

Although RTMP was widely adopted for live streaming and on-demand video services in the past, its usage has decreased in recent years. This decline is partly due to the rise of HTTP-based streaming protocols like HLS and DASH, which offer greater device and platform compatibility. Despite this shift, RTMP still finds utility in certain scenarios, such as legacy systems and software reliant on Flash-based players or applications that require real-time, interactive streaming capabilities.

Sample Rate

Sample rate, in the context of digital audio, refers to the number of samples taken per second during the process of converting analog sound waves into a digital format. It is measured in hertz (Hz) and determines the level of detail and accuracy of the audio reproduction. A higher sample rate means more samples are taken per second, resulting in a more precise representation of the original analog audio signal.

Different sample rates are used in various audio applications. The most commonly used sample rate in digital audio is 44.1 kHz, which is the standard for CD-quality audio. This sample rate is capable of capturing frequencies up to 22 kHz, covering most of the audible range for humans. Higher sample rates, such as 48 kHz, are often used in professional audio production and digital formats like DVD and Blu-ray. Sample rates above 48 kHz, such as 96 kHz or 192 kHz, are typically employed for high-resolution audio, where the goal is to capture even more detailed and accurate sound.

Screen Capture

Screen capture, also known as screen recording or screencasting, is the process of capturing and recording the contents of a computer or mobile device screen. It allows users to create videos or images of everything happening on their screen, including software demonstrations, tutorials, presentations, or gameplay.

To perform a screen capture, specialized software or built-in features in operating systems or video editing applications can be used. These tools enable users to select a specific region or the entire screen to be recorded. The captured content can be saved as a video file or an image, depending on the purpose.

SD (Standard Definition)

SD, or Standard Definition, refers to a video or display format that has a lower resolution and visual quality compared to high-definition (HD) or ultra-high-definition (UHD) formats. Standard Definition is typically characterized by a resolution of 480i or 576i, with an aspect ratio of 4:3, meaning that the image is not as wide as it is tall.

In terms of video quality, SD offers a lower level of detail, sharpness, and color accuracy compared to HD or UHD formats. This lower resolution is suited for older television sets or devices with limited display capabilities. SD content may appear less crisp and clear, especially when viewed on larger screens or high-resolution displays where individual pixels may be more visible.

SIP (Session Initiation Protocol)

SIP (Session Initiation Protocol) is a network protocol designed for establishing, modifying, and terminating communication sessions in IP networks. It is widely used in Voice over IP (VoIP) systems, video conferencing, instant messaging, and other real-time communication applications. SIP allows devices and applications to locate, negotiate, and create sessions between participants for voice or video calls, chat sessions, and multimedia conferences.

SIP functions by sending messages between endpoints, such as SIP phones or softphones, to establish and control sessions. These messages include invitations (INVITE) to initiate a communication session, responses from the called party, and subsequent messages to modify or terminate the session. SIP also supports features like call routing, call transfer, presence information, and more, providing a foundation for advanced communication services.

SMS

SMS, which stands for Short Message Service, is a text messaging service that enables the exchange of short written messages between mobile devices. It has become a ubiquitous form of communication, allowing individuals to send and receive text messages quickly and conveniently. SMS messages are limited to a maximum of 160 characters, making them concise and suitable for short interactions.

The popularity of SMS lies in its simplicity and widespread accessibility. Unlike other communication platforms that require internet connectivity, SMS relies on cellular networks, making it available even in areas with limited internet connectivity or during network congestion. SMS is widely used for personal messaging, from staying in touch with friends and family to coordinating plans and sharing information. It is also utilized by businesses for various purposes, such as customer service notifications, appointment reminders, marketing campaigns, and authentication codes.

Despite the emergence of messaging apps and other forms of communication, SMS remains a widely used method of exchanging text messages due to its reliability, compatibility across different devices, and broad support from mobile network providers.

Speaker

A speaker is an electroacoustic transducer that converts electrical signals into audible sound waves. It consists of various components, including a speaker driver, magnet, enclosure, and sometimes a crossover. The speaker driver, typically a diaphragm or cone connected to a voice coil, vibrates in response to the electrical signals passing through the voice coil. This vibration creates sound waves that are radiated into the surrounding air, producing the audible sound.

Speakers come in different sizes and types to suit various audio applications. For instance, smaller speakers, such as tweeters, are designed to reproduce high-frequency sounds, while larger speakers, like woofers, are specialized in generating low-frequency sounds. The enclosure plays a crucial role in optimizing the sound quality by providing proper acoustics and improving the efficiency of the speaker system.

Streaming

Streaming refers to the continuous transmission and playback of media over a network, allowing users to consume audio, video, or other multimedia content in real-time without the need to download the entire file before playing it. Streaming enables users to access and enjoy content immediately, as it is delivered and played in small pieces, or "chunks," while the rest of the media file continues to be downloaded in the background.

Streaming utilizes various protocols and technologies to deliver content efficiently and maintain a continuous playback experience. These protocols include HTTP-based protocols like HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP), as well as proprietary protocols like RTMP (Real-Time Messaging Protocol) and RTSP (Real-Time Streaming Protocol).

Transcoding

Transcoding is the conversion of digital media files from one format to another. It involves decoding the original file and re-encoding it into a different format. Transcoding is used to ensure compatibility between devices, optimize file size, improve playback quality, or meet specific requirements.

For example, a video file may be transcoded to a format supported by a particular device or platform. Transcoding can also be used to reduce file size without significant quality loss, making it easier to store or transmit large media files. Additionally, transcoding can enhance the quality of media files by adjusting parameters like resolution or bit rate. Overall, transcoding is a process that enables media files to be more accessible, efficient, and compatible across different devices and platforms.

VBR (Variable Bit Rate)

Variable Bit Rate (VBR) is a compression technique used in audio and video encoding that adjusts the bit rate dynamically based on the complexity of the content being encoded. Instead of using a fixed bit rate throughout the entire encoding process, VBR allows for more efficient use of storage space or transmission bandwidth by allocating higher bit rates to more complex segments and lower bit rates to simpler segments.

In video encoding, VBR adjusts the bit rate on a frame-by-frame basis. This means that during scenes with high motion or complexity, more bits are allocated to preserve the quality and detail of the video. In contrast, during scenes with less motion or simpler content, fewer bits are allocated, resulting in smaller file sizes or lower bandwidth requirements.

Video Bandwidth

Video bandwidth refers to the amount of data required to transmit a video stream over a network or internet connection. It specifies the rate at which data is transferred and is typically measured in bits per second (bps), kilobits per second (Kbps), or megabits per second (Mbps). The video bandwidth determines how much data needs to be transmitted in order to deliver a video stream to the viewer.

Video Bitrate

Video bitrate refers to the amount of data allocated to represent one second of video content. It is typically measured in bits per second (bps) or a variation such as kilobits per second (Kbps) or megabits per second (Mbps). The video bitrate directly impacts the quality and file size of a video.

Video Codec

A video codec, short for "coder-decoder," is a technology used to compress and decompress digital video files. It is responsible for encoding video data into a compact file size for storage, transmission, and playback, as well as decoding it for viewing or editing purposes.

Video codecs utilize algorithms and mathematical techniques to reduce the amount of data required to represent a video without significant loss in quality. These algorithms exploit redundancies and eliminate unnecessary information in the video frames to achieve compression. Different video codecs employ various compression methods, such as spatial compression (eliminating repeating patterns), temporal compression (encoding only the differences between frames), and transform coding (representing video data in frequency domain).

Video Frame

A video frame is a single still image within a sequence of images that form a video. These frames are displayed rapidly in succession to create the illusion of motion. Each frame represents a specific point in time and contains visual information captured in that moment.

Video frames are the building blocks of video editing and playback. They can be manipulated, altered, or deleted to create desired effects or transitions. The smooth transition between frames gives the appearance of continuous movement in a video. Factors like resolution, aspect ratio, color depth, and compression determine the characteristics of each video frame.

Video Quality

Video quality refers to the perceived level of visual clarity, sharpness, detail, color accuracy, and overall fidelity of a video. It represents how well the video content reproduces the original image or intended visual experience.

Video Resolution

Video resolution refers to the number of pixels displayed on a screen to create an image or video. It is typically presented as the combination of horizontal and vertical pixel dimensions, such as 1920x1080 (Full HD) or 3840x2160 (4K Ultra HD).

The resolution of a video directly impacts the level of detail and clarity of the visual content. Higher resolutions offer greater sharpness, finer details, and more pixels to display the image. This is particularly noticeable when viewing videos on larger screens or when zooming in on the content.

Video Streaming

Video streaming is the process of delivering video content in real-time over the internet. It allows users to watch videos without the need for downloading the entire file. Instead, the video is transmitted in small, continuous data packets that can be played back immediately. This enables users to access and view a wide range of video content instantly, including movies, TV shows, and live events.

To stream a video, the content is first encoded and compressed into a streaming format. This compressed video data is then transmitted over the internet and received by the user's device, which decodes and plays back the video in real-time. Video streaming requires a stable and sufficient internet connection to ensure smooth playback, and the quality of the stream may vary based on factors like internet speed and device capabilities.

Video streaming has become increasingly popular due to its convenience and accessibility. It allows users to watch videos on-demand, eliminating the need for physical media or lengthy downloads. With the proliferation of streaming platforms and services, users have access to a vast library of content that can be streamed anytime, anywhere, on various devices.

VoIP (Voice Over IP)

VoIP, which stands for Voice over Internet Protocol, is a technology that enables voice communication and telephone services to be transmitted over the internet rather than traditional telephone networks. It allows users to make phone calls, send voice messages, participate in video conferences, and engage in other forms of real-time voice communication using IP-based networks.

VoIP works by converting analog voice signals into digital data packets that can be transmitted over the internet. These packets are then sent to the recipient and converted back into analog signals for playback. VoIP services can be accessed through various devices, including computers, smartphones, and dedicated VoIP phones, which need to be connected to the internet.

Webcast

A webcast refers to the broadcast or transmission of live or pre-recorded audio and video content over the internet. It allows users to view or listen to an event, presentation, or performance in real-time or on-demand using a web browser or a dedicated webcast platform.

Webcasts are commonly used for various purposes including corporate meetings, conferences, seminars, product launches, educational sessions, entertainment events, and more. They enable individuals or organizations to reach a wide audience, regardless of their geographic location, as long as they have an internet connection.

WebRTC

WebRTC, which stands for Web Real-Time Communication, is an open-source project that enables real-time audio, video, and data communication directly within web browsers and mobile applications. It provides a set of APIs (Application Programming Interfaces) and protocols that allow developers to build applications and services for peer-to-peer communication, as well as for integrating real-time communication capabilities into websites and applications.

WebRTC utilizes a combination of HTML5, JavaScript, and web browser APIs to enable direct communication between users, without the need for plugins, extensions, or third-party software. It supports secure and encrypted peer-to-peer connections, ensuring privacy and data confidentiality.

WebSocket

WebSocket is a communication protocol that provides full-duplex, bidirectional communication between a client and a server over a single, long-lived connection. It is designed to enable real-time, low-latency communication between web browsers and servers, allowing for interactive and dynamic web applications.

The WebSocket protocol is different from the standard HTTP protocol commonly used for request-response communication between clients and servers. While HTTP follows a stateless model where the server responds to client requests, WebSocket provides a persistent connection that remains open as long as needed.